Add fusion (#3)

Browse files* vectorize and optimized block reduce

* add benchmark test (w/o readme update)

* implemented fused_mul_poly_norm

Signed-off-by: taehyun <[email protected]>

* add_rms_norm added

* deleted backward pass on fused add rms norm, split test and benchmarks

Signed-off-by: taehyun <[email protected]>

* refactored benchmarks

* add readme

* fix readme

* add build

* fix readme

* fix readme2

* add mi250 results

* highlight used our kernel for baseline in fused performance

* applied yapf

---------

Signed-off-by: taehyun <[email protected]>

Co-authored-by: taehyun <[email protected]>

This view is limited to 50 files because it contains too many changes.

See raw diff

- README.md +178 -7

- activation/block_reduce.h +0 -21

- activation/fused_add_rms_norm.cu +157 -0

- activation/fused_mul_poly_norm.cu +642 -0

- activation/poly_norm.cu +88 -61

- activation/rms_norm.cu +243 -51

- benchmarks/README.md +35 -0

- benchmarks/cases/__init__.py +1 -0

- benchmarks/cases/add_rms.py +55 -0

- benchmarks/cases/mul_poly.py +53 -0

- benchmarks/cases/poly.py +58 -0

- benchmarks/cases/rms.py +35 -0

- benchmarks/common/__init__.py +1 -0

- benchmarks/common/bench_framework.py +220 -0

- benchmarks/common/diff_engine.py +85 -0

- benchmarks/plots/h100/add_rms/plot_add_rms-bwd-perf.png +0 -0

- benchmarks/plots/h100/add_rms/plot_add_rms-fwd-perf.png +0 -0

- benchmarks/plots/h100/mul_poly/plot_mul_poly-bwd-perf.png +0 -0

- benchmarks/plots/h100/mul_poly/plot_mul_poly-fwd-perf.png +0 -0

- benchmarks/plots/h100/poly/plot_poly-bwd-perf.png +0 -0

- benchmarks/plots/h100/poly/plot_poly-fwd-perf.png +0 -0

- benchmarks/plots/h100/rms/plot_rms-bwd-perf.png +0 -0

- benchmarks/plots/h100/rms/plot_rms-fwd-perf.png +0 -0

- benchmarks/plots/mi250/add_rms/plot_add_rms-bwd-perf.png +0 -0

- benchmarks/plots/mi250/add_rms/plot_add_rms-fwd-perf.png +0 -0

- benchmarks/plots/mi250/mul_poly/plot_mul_poly-bwd-perf.png +0 -0

- benchmarks/plots/mi250/mul_poly/plot_mul_poly-fwd-perf.png +0 -0

- benchmarks/plots/mi250/poly/plot_poly-bwd-perf.png +0 -0

- benchmarks/plots/mi250/poly/plot_poly-fwd-perf.png +0 -0

- benchmarks/plots/mi250/rms/plot_rms-bwd-perf.png +0 -0

- benchmarks/plots/mi250/rms/plot_rms-fwd-perf.png +0 -0

- benchmarks/run_cases.py +143 -0

- build.toml +4 -2

- build/torch27-cxx11-cu118-x86_64-linux/activation/__init__.py +24 -2

- tests/perf.png → build/torch27-cxx11-cu118-x86_64-linux/activation/_activation_20250907180255.abi3.so +2 -2

- build/torch27-cxx11-cu118-x86_64-linux/activation/_ops.py +3 -3

- build/torch27-cxx11-cu118-x86_64-linux/activation/layers.py +48 -2

- build/torch27-cxx11-cu118-x86_64-linux/activation/poly_norm.py +37 -0

- build/torch27-cxx11-cu118-x86_64-linux/activation/rms_norm.py +47 -0

- build/torch27-cxx11-cu126-x86_64-linux/activation/__init__.py +24 -2

- build/torch27-cxx11-cu126-x86_64-linux/activation/_activation_20250907180255.abi3.so +3 -0

- build/torch27-cxx11-cu126-x86_64-linux/activation/_ops.py +3 -3

- build/torch27-cxx11-cu126-x86_64-linux/activation/layers.py +48 -2

- build/torch27-cxx11-cu126-x86_64-linux/activation/poly_norm.py +37 -0

- build/torch27-cxx11-cu126-x86_64-linux/activation/rms_norm.py +47 -0

- build/torch27-cxx11-cu128-x86_64-linux/activation/__init__.py +24 -2

- build/torch27-cxx11-cu128-x86_64-linux/activation/_activation_20250907180255.abi3.so +3 -0

- build/torch27-cxx11-cu128-x86_64-linux/activation/_ops.py +3 -3

- build/torch27-cxx11-cu128-x86_64-linux/activation/layers.py +48 -2

- build/torch27-cxx11-cu128-x86_64-linux/activation/poly_norm.py +37 -0

README.md

CHANGED

|

@@ -11,6 +11,37 @@ Activation is a python package that contains custom CUDA-based activation kernel

|

|

| 11 |

- Currently implemented

|

| 12 |

- [PolyNorm](https://arxiv.org/html/2411.03884v1)

|

| 13 |

- [RMSNorm](https://docs.pytorch.org/docs/stable/generated/torch.nn.RMSNorm.html)

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 14 |

|

| 15 |

## Usage

|

| 16 |

|

|

@@ -28,18 +59,158 @@ print(poly_norm(x))

|

|

| 28 |

```

|

| 29 |

|

| 30 |

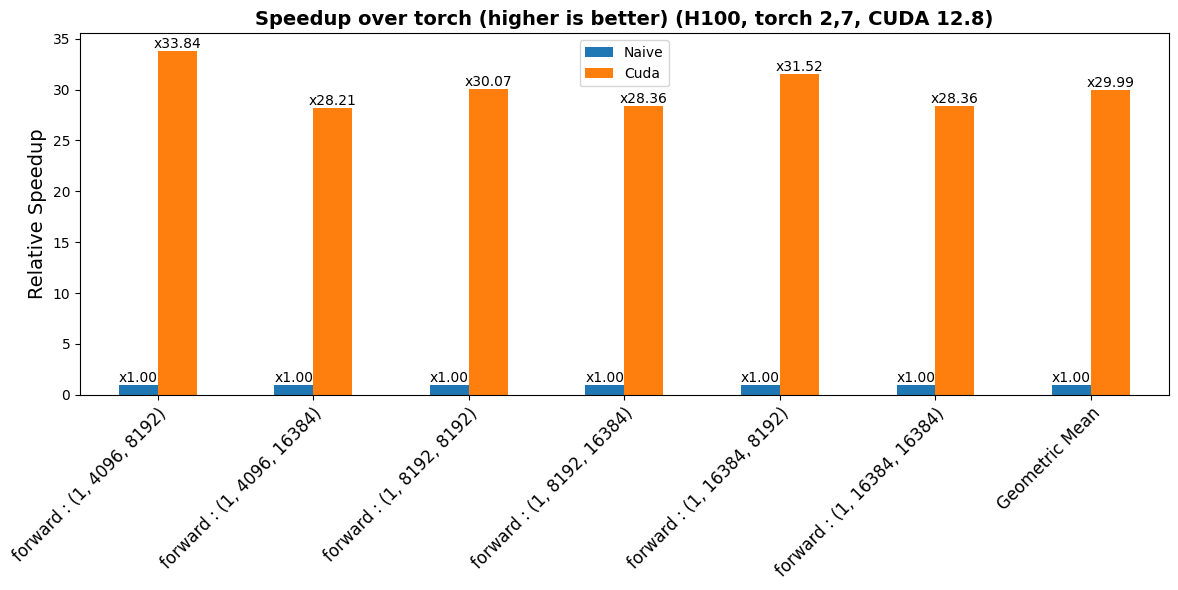

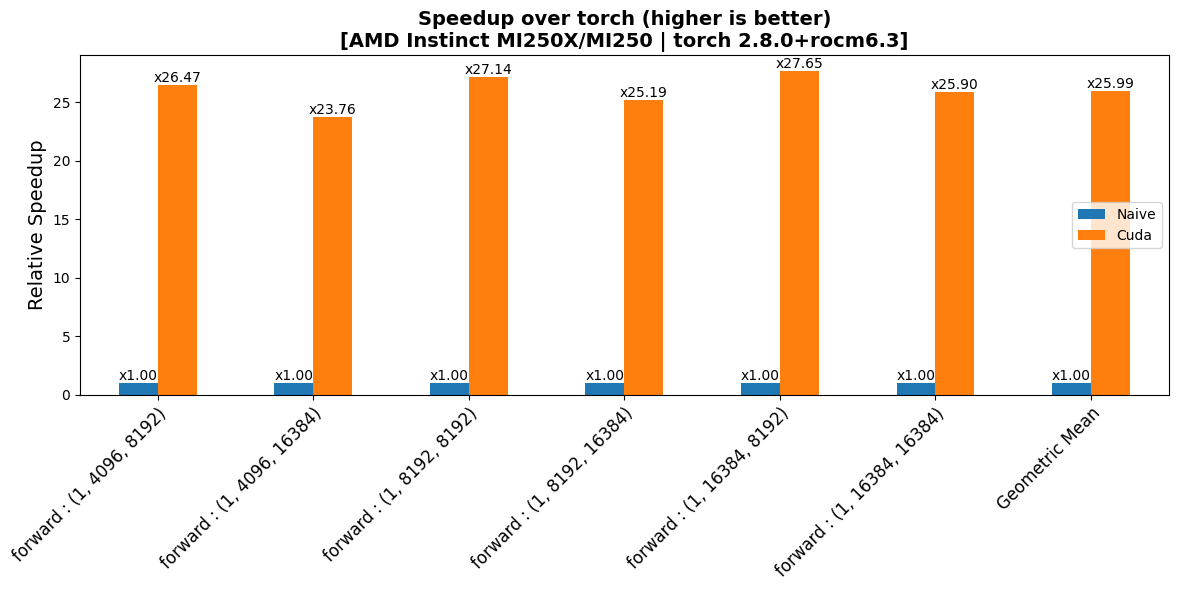

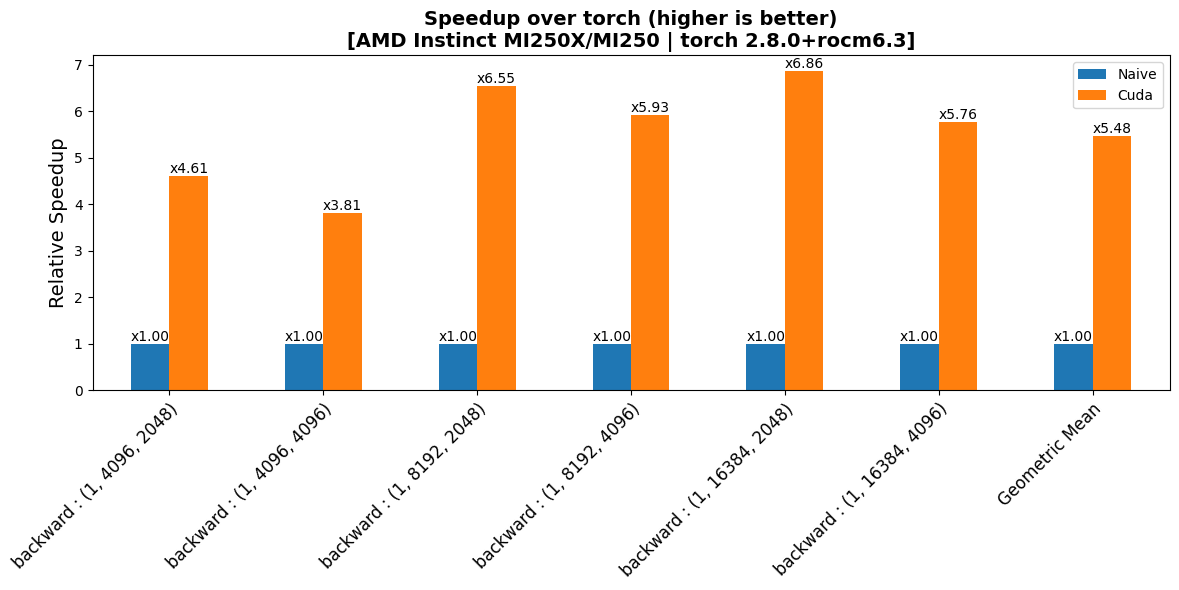

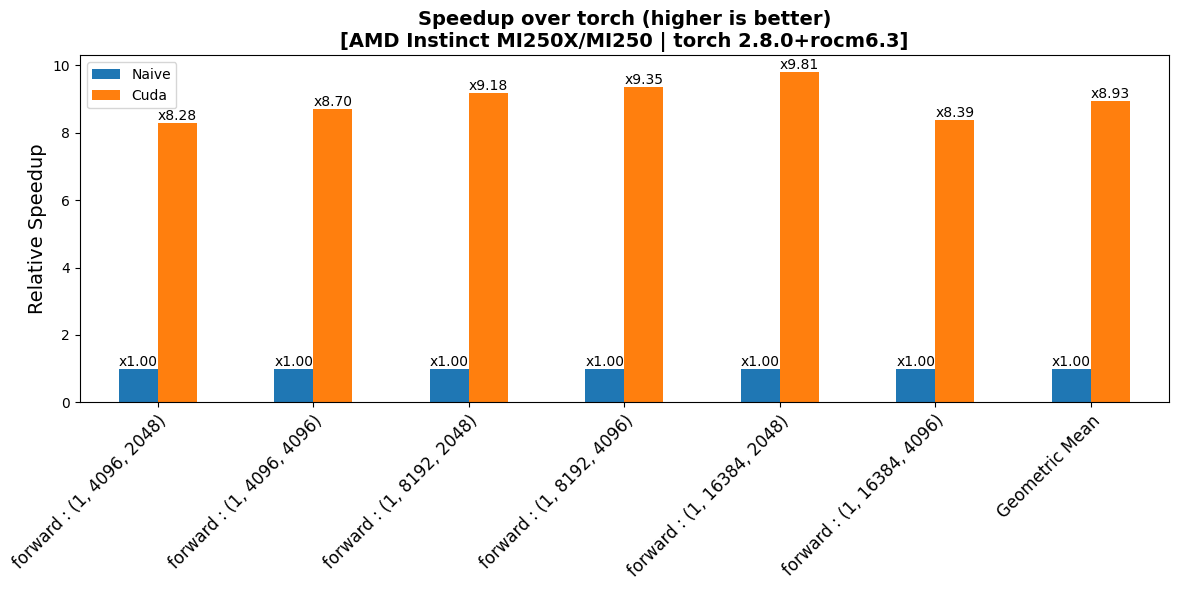

## Performance

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 31 |

|

| 32 |

### PolyNorm

|

| 33 |

|

| 34 |

-

|

| 35 |

-

- You can reproduce the results with:

|

| 36 |

|

| 37 |

-

|

| 38 |

-

|

| 39 |

-

|

| 40 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 41 |

|

| 42 |

-

|

| 43 |

|

| 44 |

## Pre-commit Hooks

|

| 45 |

|

|

|

|

| 11 |

- Currently implemented

|

| 12 |

- [PolyNorm](https://arxiv.org/html/2411.03884v1)

|

| 13 |

- [RMSNorm](https://docs.pytorch.org/docs/stable/generated/torch.nn.RMSNorm.html)

|

| 14 |

+

- **FusedAddRMSNorm**

|

| 15 |

+

|

| 16 |

+

A fused operator that combines **residual addition** (`x + residual`) with **RMSNorm** in a single kernel.

|

| 17 |

+

- Instead of:

|

| 18 |

+

|

| 19 |

+

```python

|

| 20 |

+

y = x + residual

|

| 21 |

+

out = rms_norm(y, weight, eps)

|

| 22 |

+

```

|

| 23 |

+

|

| 24 |

+

- Fused as:

|

| 25 |

+

|

| 26 |

+

```python

|

| 27 |

+

out = fused_add_rms_norm(x, residual, weight, eps)

|

| 28 |

+

```

|

| 29 |

+

|

| 30 |

+

- **FusedMulPolyNorm**

|

| 31 |

+

|

| 32 |

+

A fused operator that combines **PolyNorm** with an **element-wise multiplication** by a Tensor.

|

| 33 |

+

- Instead of:

|

| 34 |

+

|

| 35 |

+

```python

|

| 36 |

+

y = poly_norm(x, weight, bias, eps)

|

| 37 |

+

out = y * a

|

| 38 |

+

```

|

| 39 |

+

|

| 40 |

+

- Fused as:

|

| 41 |

+

|

| 42 |

+

```python

|

| 43 |

+

out = fused_mul_poly_norm(x, a, weight, bias, eps)

|

| 44 |

+

```

|

| 45 |

|

| 46 |

## Usage

|

| 47 |

|

|

|

|

| 59 |

```

|

| 60 |

|

| 61 |

## Performance

|

| 62 |

+

- Test cases are from the Motif LLM

|

| 63 |

+

- The results can be reproduced using the provided benchmarking tools.

|

| 64 |

+

- For details on how to use the benchmarking tools, please refer to the [benchmarks README](./benchmarks/README.md).

|

| 65 |

+

- The benchmark results may show fluctuations, especially in the backward pass and when the dimension size is small.

|

| 66 |

+

|

| 67 |

+

### RMSNorm

|

| 68 |

+

|

| 69 |

+

#### H100 Results

|

| 70 |

+

|

| 71 |

+

<details>

|

| 72 |

+

<summary>Forward Performance</summary>

|

| 73 |

+

|

| 74 |

+

|

| 75 |

+

|

| 76 |

+

</details>

|

| 77 |

+

|

| 78 |

+

<details>

|

| 79 |

+

<summary>Backward Performance</summary>

|

| 80 |

+

|

| 81 |

+

|

| 82 |

+

|

| 83 |

+

</details>

|

| 84 |

+

|

| 85 |

+

#### MI250 Results

|

| 86 |

+

|

| 87 |

+

<details>

|

| 88 |

+

<summary>Forward Performance</summary>

|

| 89 |

+

|

| 90 |

+

|

| 91 |

+

|

| 92 |

+

</details>

|

| 93 |

+

|

| 94 |

+

<details>

|

| 95 |

+

<summary>Backward Performance</summary>

|

| 96 |

+

|

| 97 |

+

|

| 98 |

+

|

| 99 |

+

</details>

|

| 100 |

+

|

| 101 |

+

---

|

| 102 |

+

|

| 103 |

+

### FusedAddRMSNorm

|

| 104 |

+

|

| 105 |

+

> [!NOTE]

|

| 106 |

+

> For fusion case performance, the **non-fused baseline** was implemented with our **custom kernels**.

|

| 107 |

+

|

| 108 |

+

#### H100 Results

|

| 109 |

+

|

| 110 |

+

<details>

|

| 111 |

+

<summary>Forward Performance</summary>

|

| 112 |

+

|

| 113 |

+

|

| 114 |

+

|

| 115 |

+

</details>

|

| 116 |

+

|

| 117 |

+

<details>

|

| 118 |

+

<summary>Backward Performance</summary>

|

| 119 |

+

|

| 120 |

+

|

| 121 |

+

|

| 122 |

+

</details>

|

| 123 |

+

|

| 124 |

+

#### MI250 Results

|

| 125 |

+

|

| 126 |

+

<details>

|

| 127 |

+

<summary>Forward Performance</summary>

|

| 128 |

+

|

| 129 |

+

|

| 130 |

+

|

| 131 |

+

</details>

|

| 132 |

+

|

| 133 |

+

<details>

|

| 134 |

+

<summary>Backward Performance</summary>

|

| 135 |

+

|

| 136 |

+

|

| 137 |

+

|

| 138 |

+

</details>

|

| 139 |

+

|

| 140 |

+

---

|

| 141 |

|

| 142 |

### PolyNorm

|

| 143 |

|

| 144 |

+

#### H100 Results

|

|

|

|

| 145 |

|

| 146 |

+

<details>

|

| 147 |

+

<summary>Forward Performance</summary>

|

| 148 |

+

|

| 149 |

+

|

| 150 |

+

|

| 151 |

+

</details>

|

| 152 |

+

|

| 153 |

+

<details>

|

| 154 |

+

<summary>Backward Performance</summary>

|

| 155 |

+

|

| 156 |

+

|

| 157 |

+

|

| 158 |

+

</details>

|

| 159 |

+

|

| 160 |

+

#### MI250 Results

|

| 161 |

+

|

| 162 |

+

<details>

|

| 163 |

+

<summary>Forward Performance</summary>

|

| 164 |

+

|

| 165 |

+

|

| 166 |

+

|

| 167 |

+

</details>

|

| 168 |

+

|

| 169 |

+

<details>

|

| 170 |

+

<summary>Backward Performance</summary>

|

| 171 |

+

|

| 172 |

+

|

| 173 |

+

|

| 174 |

+

</details>

|

| 175 |

+

|

| 176 |

+

---

|

| 177 |

+

|

| 178 |

+

### FusedMulPolyNorm

|

| 179 |

+

|

| 180 |

+

> [!NOTE]

|

| 181 |

+

> For fusion case performance, the **non-fused baseline** was implemented with our **custom kernels**.

|

| 182 |

+

|

| 183 |

+

#### H100 Results

|

| 184 |

+

|

| 185 |

+

<details>

|

| 186 |

+

<summary>Forward Performance</summary>

|

| 187 |

+

|

| 188 |

+

|

| 189 |

+

|

| 190 |

+

</details>

|

| 191 |

+

|

| 192 |

+

<details>

|

| 193 |

+

<summary>Backward Performance</summary>

|

| 194 |

+

|

| 195 |

+

|

| 196 |

+

|

| 197 |

+

</details>

|

| 198 |

+

|

| 199 |

+

#### MI250 Results

|

| 200 |

+

|

| 201 |

+

<details>

|

| 202 |

+

<summary>Forward Performance</summary>

|

| 203 |

+

|

| 204 |

+

|

| 205 |

+

|

| 206 |

+

</details>

|

| 207 |

+

|

| 208 |

+

<details>

|

| 209 |

+

<summary>Backward Performance</summary>

|

| 210 |

+

|

| 211 |

+

|

| 212 |

|

| 213 |

+

</details>

|

| 214 |

|

| 215 |

## Pre-commit Hooks

|

| 216 |

|

activation/block_reduce.h

DELETED

|

@@ -1,21 +0,0 @@

|

|

| 1 |

-

namespace motif {

|

| 2 |

-

|

| 3 |

-

template <typename acc_t, int BLOCK_SIZE>

|

| 4 |

-

__device__ acc_t _block_reduce_sum(acc_t *shared, const float val,

|

| 5 |

-

const int d) {

|

| 6 |

-

// TODO: Optimize with warp-level primitives

|

| 7 |

-

__syncthreads();

|

| 8 |

-

|

| 9 |

-

shared[threadIdx.x] = threadIdx.x < d ? val : 0.0f;

|

| 10 |

-

__syncthreads();

|

| 11 |

-

for (int stride = BLOCK_SIZE / 2; stride > 0; stride /= 2) {

|

| 12 |

-

if (threadIdx.x < stride) {

|

| 13 |

-

shared[threadIdx.x] += shared[threadIdx.x + stride];

|

| 14 |

-

}

|

| 15 |

-

__syncthreads();

|

| 16 |

-

}

|

| 17 |

-

|

| 18 |

-

return shared[0];

|

| 19 |

-

}

|

| 20 |

-

|

| 21 |

-

} // namespace motif

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

activation/fused_add_rms_norm.cu

ADDED

|

@@ -0,0 +1,157 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#include <ATen/Functions.h>

|

| 2 |

+

#include <ATen/cuda/CUDAContext.h>

|

| 3 |

+

#include <c10/cuda/CUDAGuard.h>

|

| 4 |

+

#include <torch/all.h>

|

| 5 |

+

|

| 6 |

+

#include <cmath>

|

| 7 |

+

|

| 8 |

+

#include "assert_utils.h"

|

| 9 |

+

#include "atomic_utils.h"

|

| 10 |

+

#include "cuda_compat.h"

|

| 11 |

+

#include "dispatch_utils.h"

|

| 12 |

+

|

| 13 |

+

namespace motif {

|

| 14 |

+

|

| 15 |

+

template <typename type, int N> struct alignas(sizeof(type) * N) type_vec_t {

|

| 16 |

+

type data[N];

|

| 17 |

+

};

|

| 18 |

+

|

| 19 |

+

template <typename scalar_t, typename acc_t, int width>

|

| 20 |

+

__global__ std::enable_if_t<(width > 0)>

|

| 21 |

+

fused_add_rms_norm_kernel(scalar_t *__restrict__ out, // [..., d]

|

| 22 |

+

scalar_t *__restrict__ add_out, // [..., d]

|

| 23 |

+

const scalar_t *__restrict__ input, // [..., d]

|

| 24 |

+

const scalar_t *__restrict__ residual, // [..., d]

|

| 25 |

+

const scalar_t *__restrict__ weight, // [d]

|

| 26 |

+

const float eps, const int d) {

|

| 27 |

+

using vec_t = type_vec_t<scalar_t, width>;

|

| 28 |

+

|

| 29 |

+

const int vec_d = d / width;

|

| 30 |

+

const int64_t vec_offset = blockIdx.x * vec_d;

|

| 31 |

+

const vec_t *__restrict__ input_vec = reinterpret_cast<const vec_t *>(input);

|

| 32 |

+

const vec_t *__restrict__ residual_vec =

|

| 33 |

+

reinterpret_cast<const vec_t *>(residual);

|

| 34 |

+

vec_t *__restrict__ add_out_vec = reinterpret_cast<vec_t *>(add_out);

|

| 35 |

+

acc_t sum_square = 0.0f;

|

| 36 |

+

|

| 37 |

+

for (int64_t idx = threadIdx.x; idx < vec_d; idx += blockDim.x) {

|

| 38 |

+

vec_t x_vec = input_vec[vec_offset + idx];

|

| 39 |

+

vec_t res_vec = residual_vec[vec_offset + idx];

|

| 40 |

+

vec_t add_vec;

|

| 41 |

+

|

| 42 |

+

#pragma unroll

|

| 43 |

+

for (int i = 0; i < width; ++i) {

|

| 44 |

+

acc_t x = x_vec.data[i] + res_vec.data[i];

|

| 45 |

+

sum_square += x * x;

|

| 46 |

+

add_vec.data[i] = x;

|

| 47 |

+

}

|

| 48 |

+

add_out_vec[vec_offset + idx] = add_vec;

|

| 49 |

+

}

|

| 50 |

+

|

| 51 |

+

using BlockReduce = cub::BlockReduce<float, 1024>;

|

| 52 |

+

__shared__ typename BlockReduce::TempStorage reduceStore;

|

| 53 |

+

|

| 54 |

+

sum_square = BlockReduce(reduceStore).Sum(sum_square, blockDim.x);

|

| 55 |

+

|

| 56 |

+

__shared__ acc_t s_scale;

|

| 57 |

+

|

| 58 |

+

if (threadIdx.x == 0) {

|

| 59 |

+

s_scale = rsqrtf(sum_square / d + eps);

|

| 60 |

+

}

|

| 61 |

+

__syncthreads();

|

| 62 |

+

|

| 63 |

+

const vec_t *__restrict__ weight_vec =

|

| 64 |

+

reinterpret_cast<const vec_t *>(weight);

|

| 65 |

+

vec_t *__restrict__ output_vec = reinterpret_cast<vec_t *>(out);

|

| 66 |

+

|

| 67 |

+

for (int64_t idx = threadIdx.x; idx < vec_d; idx += blockDim.x) {

|

| 68 |

+

vec_t x_vec = add_out_vec[vec_offset + idx];

|

| 69 |

+

vec_t w_vec = weight_vec[idx];

|

| 70 |

+

vec_t y_vec;

|

| 71 |

+

|

| 72 |

+

#pragma unroll

|

| 73 |

+

for (int i = 0; i < width; ++i) {

|

| 74 |

+

acc_t x = x_vec.data[i];

|

| 75 |

+

acc_t w = w_vec.data[i];

|

| 76 |

+

|

| 77 |

+

y_vec.data[i] = w * x * s_scale;

|

| 78 |

+

}

|

| 79 |

+

output_vec[vec_offset + idx] = y_vec;

|

| 80 |

+

}

|

| 81 |

+

}

|

| 82 |

+

|

| 83 |

+

template <typename scalar_t, typename acc_t, int width>

|

| 84 |

+

__global__ std::enable_if_t<(width == 0)>

|

| 85 |

+

fused_add_rms_norm_kernel(scalar_t *__restrict__ out, // [..., d]

|

| 86 |

+

scalar_t *__restrict__ add_out, // [..., d]

|

| 87 |

+

const scalar_t *__restrict__ input, // [..., d]

|

| 88 |

+

const scalar_t *__restrict__ residual, // [..., d]

|

| 89 |

+

const scalar_t *__restrict__ weight, // [d]

|

| 90 |

+

const float eps, const int d) {

|

| 91 |

+

const int64_t token_idx = blockIdx.x;

|

| 92 |

+

const int64_t vec_idx = threadIdx.x;

|

| 93 |

+

acc_t sum_square = 0.0f;

|

| 94 |

+

|

| 95 |

+

for (int64_t idx = vec_idx; idx < d; idx += blockDim.x) {

|

| 96 |

+

acc_t x = input[token_idx * d + idx] + residual[token_idx * d + idx];

|

| 97 |

+

sum_square += x * x;

|

| 98 |

+

add_out[token_idx * d + idx] = x;

|

| 99 |

+

}

|

| 100 |

+

|

| 101 |

+

using BlockReduce = cub::BlockReduce<float, 1024>;

|

| 102 |

+

__shared__ typename BlockReduce::TempStorage reduceStore;

|

| 103 |

+

|

| 104 |

+

sum_square = BlockReduce(reduceStore).Sum(sum_square, blockDim.x);

|

| 105 |

+

|

| 106 |

+

__shared__ acc_t s_scale;

|

| 107 |

+

|

| 108 |

+

if (vec_idx == 0) {

|

| 109 |

+

s_scale = rsqrtf(sum_square / d + eps);

|

| 110 |

+

}

|

| 111 |

+

__syncthreads();

|

| 112 |

+

|

| 113 |

+

for (int64_t idx = vec_idx; idx < d; idx += blockDim.x) {

|

| 114 |

+

acc_t x = add_out[token_idx * d + idx];

|

| 115 |

+

acc_t w = weight[idx];

|

| 116 |

+

out[token_idx * d + idx] = w * x * s_scale;

|

| 117 |

+

}

|

| 118 |

+

}

|

| 119 |

+

|

| 120 |

+

} // namespace motif

|

| 121 |

+

|

| 122 |

+

#define LAUNCH_RMS_NORM(width) \

|

| 123 |

+

MOTIF_DISPATCH_FLOATING_TYPES( \

|

| 124 |

+

input.scalar_type(), "fused_add_rms_norm_kernel", [&] { \

|

| 125 |

+

motif::fused_add_rms_norm_kernel<scalar_t, float, width> \

|

| 126 |

+

<<<grid, block, 0, stream>>>( \

|

| 127 |

+

out.data_ptr<scalar_t>(), add_out.data_ptr<scalar_t>(), \

|

| 128 |

+

input.data_ptr<scalar_t>(), residual.data_ptr<scalar_t>(), \

|

| 129 |

+

weight.data_ptr<scalar_t>(), eps, d); \

|

| 130 |

+

});

|

| 131 |

+

|

| 132 |

+

void fused_add_rms_norm(torch::Tensor &out, // [..., d]

|

| 133 |

+

torch::Tensor &add_out, // [..., d]

|

| 134 |

+

const torch::Tensor &input, // [..., d]

|

| 135 |

+

const torch::Tensor &residual, // [..., d]

|

| 136 |

+

const torch::Tensor &weight, // [d]

|

| 137 |

+

double eps) {

|

| 138 |

+

AssertTensorShapeEqual(input, residual, "input", "residual");

|

| 139 |

+

AssertTensorShapeEqual(input, out, "input", "out");

|

| 140 |

+

AssertTensorShapeEqual(input, add_out, "input", "result");

|

| 141 |

+

AssertTensorNotNull(weight, "weight");

|

| 142 |

+

// TODO shape check

|

| 143 |

+

|

| 144 |

+

int d = input.size(-1);

|

| 145 |

+

int64_t num_tokens = input.numel() / input.size(-1);

|

| 146 |

+

dim3 grid(num_tokens);

|

| 147 |

+

const int max_block_size = (num_tokens < 256) ? 1024 : 256;

|

| 148 |

+

dim3 block(std::min(d, max_block_size));

|

| 149 |

+

|

| 150 |

+

const at::cuda::OptionalCUDAGuard device_guard(device_of(input));

|

| 151 |

+

const cudaStream_t stream = at::cuda::getCurrentCUDAStream();

|

| 152 |

+

if (d % 8 == 0) {

|

| 153 |

+

LAUNCH_RMS_NORM(8);

|

| 154 |

+

} else {

|

| 155 |

+

LAUNCH_RMS_NORM(0);

|

| 156 |

+

}

|

| 157 |

+

}

|

activation/fused_mul_poly_norm.cu

ADDED

|

@@ -0,0 +1,642 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#include <ATen/Functions.h>

|

| 2 |

+

#include <ATen/cuda/CUDAContext.h>

|

| 3 |

+

#include <c10/cuda/CUDAGuard.h>

|

| 4 |

+

#include <torch/all.h>

|

| 5 |

+

|

| 6 |

+

#include <cmath>

|

| 7 |

+

|

| 8 |

+

#include "assert_utils.h"

|

| 9 |

+

#include "atomic_utils.h"

|

| 10 |

+

#include "cuda_compat.h"

|

| 11 |

+

#include "dispatch_utils.h"

|

| 12 |

+

|

| 13 |

+

namespace motif {

|

| 14 |

+

|

| 15 |

+

template <typename type, int N> struct alignas(sizeof(type) * N) type_vec_t {

|

| 16 |

+

type data[N];

|

| 17 |

+

};

|

| 18 |

+

|

| 19 |

+

struct SumOp {

|

| 20 |

+

__device__ float3 operator()(const float3 &a, const float3 &b) const {

|

| 21 |

+

return make_float3(a.x + b.x, a.y + b.y, a.z + b.z);

|

| 22 |

+

}

|

| 23 |

+

};

|

| 24 |

+

|

| 25 |

+

struct SumOp4 {

|

| 26 |

+

__device__ float4 operator()(const float4 &a, const float4 &b) const {

|

| 27 |

+

return make_float4(a.x + b.x, a.y + b.y, a.z + b.z, a.w + b.w);

|

| 28 |

+

}

|

| 29 |

+

};

|

| 30 |

+

|

| 31 |

+

template <typename scalar_t, typename acc_t, int width>

|

| 32 |

+

__global__ std::enable_if_t<(width > 0)>

|

| 33 |

+

fused_mul_poly_norm_kernel(scalar_t *__restrict__ out, // [..., d]

|

| 34 |

+

const scalar_t *__restrict__ input, // [..., d]

|

| 35 |

+

const scalar_t *__restrict__ mul, // [..., d]

|

| 36 |

+

const scalar_t *__restrict__ weight, // [3]

|

| 37 |

+

const scalar_t *__restrict__ bias, // [1]

|

| 38 |

+

const float eps, const int d) {

|

| 39 |

+

using vec_t = type_vec_t<scalar_t, width>;

|

| 40 |

+

|

| 41 |

+

const int vec_d = d / width;

|

| 42 |

+

const int64_t vec_offset = blockIdx.x * vec_d;

|

| 43 |

+

const vec_t *__restrict__ input_vec = reinterpret_cast<const vec_t *>(input);

|

| 44 |

+

|

| 45 |

+

acc_t sum2 = 0.0f;

|

| 46 |

+

acc_t sum4 = 0.0f;

|

| 47 |

+

acc_t sum6 = 0.0f;

|

| 48 |

+

|

| 49 |

+

for (int64_t idx = threadIdx.x; idx < vec_d; idx += blockDim.x) {

|

| 50 |

+

vec_t x_vec = input_vec[vec_offset + idx];

|

| 51 |

+

|

| 52 |

+

#pragma unroll

|

| 53 |

+

for (int i = 0; i < width; ++i) {

|

| 54 |

+

acc_t x1 = x_vec.data[i];

|

| 55 |

+

acc_t x2 = x1 * x1;

|

| 56 |

+

acc_t x4 = x2 * x2;

|

| 57 |

+

acc_t x6 = x4 * x2;

|

| 58 |

+

|

| 59 |

+

sum2 += x2;

|

| 60 |

+

sum4 += x4;

|

| 61 |

+

sum6 += x6;

|

| 62 |

+

}

|

| 63 |

+

}

|

| 64 |

+

|

| 65 |

+

using BlockReduce = cub::BlockReduce<float3, 1024>;

|

| 66 |

+

__shared__ typename BlockReduce::TempStorage reduceStore;

|

| 67 |

+

|

| 68 |

+

float3 thread_sums = make_float3(sum2, sum4, sum6);

|

| 69 |

+

float3 block_sums =

|

| 70 |

+

BlockReduce(reduceStore).Reduce(thread_sums, SumOp{}, blockDim.x);

|

| 71 |

+

|

| 72 |

+

sum2 = block_sums.x;

|

| 73 |

+

sum4 = block_sums.y;

|

| 74 |

+

sum6 = block_sums.z;

|

| 75 |

+

|

| 76 |

+

__shared__ acc_t s_bias;

|

| 77 |

+

|

| 78 |

+

__shared__ acc_t s_w2_inv_std1;

|

| 79 |

+

__shared__ acc_t s_w1_inv_std2;

|

| 80 |

+

__shared__ acc_t s_w0_inv_std3;

|

| 81 |

+

|

| 82 |

+

if (threadIdx.x == 0) {

|

| 83 |

+

acc_t w0 = weight[0];

|

| 84 |

+

acc_t w1 = weight[1];

|

| 85 |

+

acc_t w2 = weight[2];

|

| 86 |

+

s_bias = bias[0];

|

| 87 |

+

|

| 88 |

+

s_w2_inv_std1 = rsqrtf(sum2 / d + eps) * w2;

|

| 89 |

+

s_w1_inv_std2 = rsqrtf(sum4 / d + eps) * w1;

|

| 90 |

+

s_w0_inv_std3 = rsqrtf(sum6 / d + eps) * w0;

|

| 91 |

+

}

|

| 92 |

+

__syncthreads();

|

| 93 |

+

|

| 94 |

+

acc_t w2_inv_std1 = s_w2_inv_std1;

|

| 95 |

+

acc_t w1_inv_std2 = s_w1_inv_std2;

|

| 96 |

+

acc_t w0_inv_std3 = s_w0_inv_std3;

|

| 97 |

+

acc_t bias_reg = s_bias;

|

| 98 |

+

|

| 99 |

+

vec_t *__restrict__ output_vec = reinterpret_cast<vec_t *>(out);

|

| 100 |

+

const vec_t *__restrict__ mul_vec = reinterpret_cast<const vec_t *>(mul);

|

| 101 |

+

|

| 102 |

+

for (int64_t idx = threadIdx.x; idx < vec_d; idx += blockDim.x) {

|

| 103 |

+

vec_t x_vec = input_vec[vec_offset + idx];

|

| 104 |

+

vec_t m_vec = mul_vec[vec_offset + idx];

|

| 105 |

+

vec_t y_vec;

|

| 106 |

+

|

| 107 |

+

#pragma unroll

|

| 108 |

+

for (int i = 0; i < width; ++i) {

|

| 109 |

+

acc_t x1 = x_vec.data[i];

|

| 110 |

+

scalar_t m = m_vec.data[i];

|

| 111 |

+

acc_t x2 = x1 * x1;

|

| 112 |

+

acc_t x3 = x2 * x1;

|

| 113 |

+

scalar_t poly_norm_result =

|

| 114 |

+

x1 * w2_inv_std1 + x2 * w1_inv_std2 + x3 * w0_inv_std3 + bias_reg;

|

| 115 |

+

y_vec.data[i] = poly_norm_result * m;

|

| 116 |

+

}

|

| 117 |

+

output_vec[vec_offset + idx] = y_vec;

|

| 118 |

+

}

|

| 119 |

+

}

|

| 120 |

+

|

| 121 |

+

template <typename scalar_t, typename acc_t, int width>

|

| 122 |

+

__global__ std::enable_if_t<(width == 0)>

|

| 123 |

+

fused_mul_poly_norm_kernel(scalar_t *__restrict__ out, // [..., d]

|

| 124 |

+

const scalar_t *__restrict__ input, // [..., d]

|

| 125 |

+

const scalar_t *__restrict__ mul, // [..., d]

|

| 126 |

+

const scalar_t *__restrict__ weight, // [3]

|

| 127 |

+

const scalar_t *__restrict__ bias, // [1]

|

| 128 |

+

const float eps, const int d) {

|

| 129 |

+

const int64_t token_idx = blockIdx.x;

|

| 130 |

+

|

| 131 |

+

acc_t sum2 = 0.0f;

|

| 132 |

+

acc_t sum4 = 0.0f;

|

| 133 |

+

acc_t sum6 = 0.0f;

|

| 134 |

+

|

| 135 |

+

for (int64_t idx = threadIdx.x; idx < d; idx += blockDim.x) {

|

| 136 |

+

acc_t x1 = input[token_idx * d + idx];

|

| 137 |

+

acc_t x2 = x1 * x1;

|

| 138 |

+

acc_t x4 = x2 * x2;

|

| 139 |

+

acc_t x6 = x4 * x2;

|

| 140 |

+

|

| 141 |

+

sum2 += x2;

|

| 142 |

+

sum4 += x4;

|

| 143 |

+

sum6 += x6;

|

| 144 |

+

}

|

| 145 |

+

|

| 146 |

+

using BlockReduce = cub::BlockReduce<float3, 1024>;

|

| 147 |

+

__shared__ typename BlockReduce::TempStorage reduceStore;

|

| 148 |

+

|

| 149 |

+

float3 thread_sums = make_float3(sum2, sum4, sum6);

|

| 150 |

+

float3 block_sums =

|

| 151 |

+

BlockReduce(reduceStore).Reduce(thread_sums, SumOp{}, blockDim.x);

|

| 152 |

+

|

| 153 |

+

sum2 = block_sums.x;

|

| 154 |

+

sum4 = block_sums.y;

|

| 155 |

+

sum6 = block_sums.z;

|

| 156 |

+

|

| 157 |

+

__shared__ acc_t s_bias;

|

| 158 |

+

|

| 159 |

+

__shared__ acc_t s_w2_inv_std1;

|

| 160 |

+

__shared__ acc_t s_w1_inv_std2;

|

| 161 |

+

__shared__ acc_t s_w0_inv_std3;

|

| 162 |

+

|

| 163 |

+

if (threadIdx.x == 0) {

|

| 164 |

+

acc_t w0 = weight[0];

|

| 165 |

+

acc_t w1 = weight[1];

|

| 166 |

+

acc_t w2 = weight[2];

|

| 167 |

+

s_bias = bias[0];

|

| 168 |

+

|

| 169 |

+

s_w2_inv_std1 = rsqrtf(sum2 / d + eps) * w2;

|

| 170 |

+

s_w1_inv_std2 = rsqrtf(sum4 / d + eps) * w1;

|

| 171 |

+

s_w0_inv_std3 = rsqrtf(sum6 / d + eps) * w0;

|

| 172 |

+

}

|

| 173 |

+

__syncthreads();

|

| 174 |

+

|

| 175 |

+

acc_t w2_inv_std1 = s_w2_inv_std1;

|

| 176 |

+

acc_t w1_inv_std2 = s_w1_inv_std2;

|

| 177 |

+

acc_t w0_inv_std3 = s_w0_inv_std3;

|

| 178 |

+

acc_t bias_reg = s_bias;

|

| 179 |

+

|

| 180 |

+

for (int64_t idx = threadIdx.x; idx < d; idx += blockDim.x) {

|

| 181 |

+

acc_t x1 = input[token_idx * d + idx];

|

| 182 |

+

scalar_t m = mul[token_idx * d + idx];

|

| 183 |

+

acc_t x2 = x1 * x1;

|

| 184 |

+

acc_t x3 = x2 * x1;

|

| 185 |

+

scalar_t poly_norm_result =

|

| 186 |

+

x1 * w2_inv_std1 + x2 * w1_inv_std2 + x3 * w0_inv_std3 + bias_reg;

|

| 187 |

+

out[token_idx * d + idx] = poly_norm_result * m;

|

| 188 |

+

}

|

| 189 |

+

}

|

| 190 |

+

|

| 191 |

+

template <typename scalar_t, typename acc_t, int width>

|

| 192 |

+

__global__ std::enable_if_t<(width > 0)> fused_mul_poly_norm_backward_kernel(

|

| 193 |

+

scalar_t *__restrict__ input_grad, // [..., d]

|

| 194 |

+

scalar_t *__restrict__ mul_grad, // [..., d]

|

| 195 |

+

acc_t *__restrict__ temp_weight_grad, // [..., 3]

|

| 196 |

+

acc_t *__restrict__ temp_bias_grad, // [..., 1]

|

| 197 |

+

const scalar_t *__restrict__ output_grad, // [..., d]

|

| 198 |

+

const scalar_t *__restrict__ input, // [..., d]

|

| 199 |

+

const scalar_t *__restrict__ mul, // [..., d]

|

| 200 |

+

const scalar_t *__restrict__ weight, // [3]

|

| 201 |

+

const scalar_t *__restrict__ bias, // [1]

|

| 202 |

+

const float eps, const int d) {

|

| 203 |

+

using vec_t = type_vec_t<scalar_t, width>;

|

| 204 |

+

|

| 205 |

+

const int vec_d = d / width;

|

| 206 |

+

const int64_t vec_offset = blockIdx.x * vec_d;

|

| 207 |

+

const vec_t *__restrict__ input_vec = reinterpret_cast<const vec_t *>(input);

|

| 208 |

+

const vec_t *__restrict__ mul_vec = reinterpret_cast<const vec_t *>(mul);

|

| 209 |

+

const vec_t *__restrict__ output_grad_vec =

|

| 210 |

+

reinterpret_cast<const vec_t *>(output_grad);

|

| 211 |

+

|

| 212 |

+

acc_t sum2 = 0.0f;

|

| 213 |

+

acc_t sum4 = 0.0f;

|

| 214 |

+

acc_t sum6 = 0.0f;

|

| 215 |

+

|

| 216 |

+

acc_t sum_dx1 = 0.0f;

|

| 217 |

+

acc_t sum_dx2 = 0.0f;

|

| 218 |

+

acc_t sum_dx3 = 0.0f;

|

| 219 |

+

|

| 220 |

+

for (int64_t idx = threadIdx.x; idx < vec_d; idx += blockDim.x) {

|

| 221 |

+

vec_t x_vec = input_vec[vec_offset + idx];

|

| 222 |

+

vec_t dy_fused_vec = output_grad_vec[vec_offset + idx];

|

| 223 |

+

vec_t m_vec = mul_vec[vec_offset + idx];

|

| 224 |

+

|

| 225 |

+

#pragma unroll

|

| 226 |

+

for (int i = 0; i < width; ++i) {

|

| 227 |

+

acc_t x1 = x_vec.data[i];

|

| 228 |

+

acc_t x2 = x1 * x1;

|

| 229 |

+

acc_t x3 = x2 * x1;

|

| 230 |

+

acc_t x4 = x2 * x2;

|

| 231 |

+

acc_t x6 = x3 * x3;

|

| 232 |

+

|

| 233 |

+

sum2 += x2;

|

| 234 |

+

sum4 += x4;

|

| 235 |

+

sum6 += x6;

|

| 236 |

+

|

| 237 |

+

acc_t dy = dy_fused_vec.data[i] * m_vec.data[i];

|

| 238 |

+

|

| 239 |

+

sum_dx1 += dy * x1;

|

| 240 |

+

sum_dx2 += dy * x2;

|

| 241 |

+

sum_dx3 += dy * x3;

|

| 242 |

+

}

|

| 243 |

+

}

|

| 244 |

+

|

| 245 |

+

using BlockReduce = cub::BlockReduce<float3, 1024>;

|

| 246 |

+

__shared__ typename BlockReduce::TempStorage reduceStore;

|

| 247 |

+

|

| 248 |

+

float3 thread_sums = make_float3(sum2, sum4, sum6);

|

| 249 |

+

float3 block_sums =

|

| 250 |

+

BlockReduce(reduceStore).Reduce(thread_sums, SumOp{}, blockDim.x);

|

| 251 |

+

|

| 252 |

+

sum2 = block_sums.x;

|

| 253 |

+

sum4 = block_sums.y;

|

| 254 |

+

sum6 = block_sums.z;

|

| 255 |

+

|

| 256 |

+

float3 thread_dxs = make_float3(sum_dx1, sum_dx2, sum_dx3);

|

| 257 |

+

__syncthreads();

|

| 258 |

+

float3 block_sum_dxs =

|

| 259 |

+

BlockReduce(reduceStore).Reduce(thread_dxs, SumOp{}, blockDim.x);

|

| 260 |

+

|

| 261 |

+

sum_dx1 = block_sum_dxs.x;

|

| 262 |

+

sum_dx2 = block_sum_dxs.y;

|

| 263 |

+

sum_dx3 = block_sum_dxs.z;

|

| 264 |

+

|

| 265 |

+

__shared__ acc_t s_mean2;

|

| 266 |

+

__shared__ acc_t s_mean4;

|

| 267 |

+

__shared__ acc_t s_mean6;

|

| 268 |

+

__shared__ acc_t s_sdx1;

|

| 269 |

+

__shared__ acc_t s_sdx2;

|

| 270 |

+

__shared__ acc_t s_sdx3;

|

| 271 |

+

|

| 272 |

+

const acc_t inv_d = acc_t(1) / d;

|

| 273 |

+

|

| 274 |

+

if (threadIdx.x == 0) {

|

| 275 |

+

s_mean2 = sum2 * inv_d + eps;

|

| 276 |

+

s_mean4 = sum4 * inv_d + eps;

|

| 277 |

+

s_mean6 = sum6 * inv_d + eps;

|

| 278 |

+

|

| 279 |

+

s_sdx1 = sum_dx1 * inv_d;

|

| 280 |

+

s_sdx2 = sum_dx2 * inv_d;

|

| 281 |

+

s_sdx3 = sum_dx3 * inv_d;

|

| 282 |

+

}

|

| 283 |

+

__syncthreads();

|

| 284 |

+

|

| 285 |

+

acc_t w0 = weight[0];

|

| 286 |

+

acc_t w1 = weight[1];

|

| 287 |

+

acc_t w2 = weight[2];

|

| 288 |

+

acc_t bias_reg = bias[0];

|

| 289 |

+

|

| 290 |

+

acc_t mean2 = s_mean2;

|

| 291 |

+

acc_t mean4 = s_mean4;

|

| 292 |

+

acc_t mean6 = s_mean6;

|

| 293 |

+

acc_t sdx1 = s_sdx1;

|

| 294 |

+

acc_t sdx2 = s_sdx2;

|

| 295 |

+

acc_t sdx3 = s_sdx3;

|

| 296 |

+

|

| 297 |

+

acc_t inv_std1 = rsqrtf(mean2);

|

| 298 |

+

acc_t inv_std2 = rsqrtf(mean4);

|

| 299 |

+

acc_t inv_std3 = rsqrtf(mean6);

|

| 300 |

+

|

| 301 |

+

acc_t w2_inv_std1 = inv_std1 * w2;

|

| 302 |

+

acc_t w1_inv_std2 = inv_std2 * w1;

|

| 303 |

+

acc_t w0_inv_std3 = inv_std3 * w0;

|

| 304 |

+

|

| 305 |

+

// inv_std / mean == powf(mean, -1.5)

|

| 306 |

+

acc_t c1 = w2_inv_std1 / mean2;

|

| 307 |

+

acc_t c2 = acc_t(2) * w1_inv_std2 / mean4;

|

| 308 |

+

acc_t c3 = acc_t(3) * w0_inv_std3 / mean6;

|

| 309 |

+

|

| 310 |

+

acc_t sum_dy = 0;

|

| 311 |

+

acc_t sum_dw0 = 0;

|

| 312 |

+

acc_t sum_dw1 = 0;

|

| 313 |

+

acc_t sum_dw2 = 0;

|

| 314 |

+

|

| 315 |

+

vec_t *__restrict__ input_grad_vec = reinterpret_cast<vec_t *>(input_grad);

|

| 316 |

+

vec_t *__restrict__ mul_grad_vec = reinterpret_cast<vec_t *>(mul_grad);

|

| 317 |

+

|

| 318 |

+

for (int64_t idx = threadIdx.x; idx < vec_d; idx += blockDim.x) {

|

| 319 |

+

vec_t x_vec = input_vec[vec_offset + idx];

|

| 320 |

+

vec_t dy_fused_vec = output_grad_vec[vec_offset + idx];

|

| 321 |

+

vec_t m_vec = mul_vec[vec_offset + idx];

|

| 322 |

+

vec_t dx_vec;

|

| 323 |

+

vec_t dm_vec;

|

| 324 |

+

|

| 325 |

+

#pragma unroll

|

| 326 |

+

for (int i = 0; i < width; ++i) {

|

| 327 |

+

acc_t x1 = x_vec.data[i];

|

| 328 |

+

acc_t x2 = x1 * x1;

|

| 329 |

+

acc_t x3 = x2 * x1;

|

| 330 |

+

acc_t dy = dy_fused_vec.data[i] * m_vec.data[i];

|

| 331 |

+

|

| 332 |

+

// For register optimization, the order of the following logic matters.

|

| 333 |

+

// The input_grad related logic must be placed at the very end.

|

| 334 |

+

sum_dy += dy;

|

| 335 |

+

sum_dw0 += dy * (x3 * inv_std3);

|

| 336 |

+

sum_dw1 += dy * (x2 * inv_std2);

|

| 337 |

+

sum_dw2 += dy * (x1 * inv_std1);

|

| 338 |

+

|

| 339 |

+

if (mul_grad) {

|

| 340 |

+

scalar_t poly_norm_result =

|

| 341 |

+

x1 * w2_inv_std1 + x2 * w1_inv_std2 + x3 * w0_inv_std3 + bias_reg;

|

| 342 |

+

dm_vec.data[i] = poly_norm_result * dy_fused_vec.data[i];

|

| 343 |

+

}

|

| 344 |

+

|

| 345 |

+

if (input_grad) {

|

| 346 |

+

acc_t dx3 = c3 * x2 * (dy * mean6 - x3 * sdx3);

|

| 347 |

+

acc_t dx2 = c2 * x1 * (dy * mean4 - x2 * sdx2);

|

| 348 |

+

acc_t dx1 = c1 * (dy * mean2 - x1 * sdx1);

|

| 349 |

+

dx_vec.data[i] = dx1 + dx2 + dx3;

|

| 350 |

+

}

|

| 351 |

+

}

|

| 352 |

+

|

| 353 |

+

if (input_grad) {

|

| 354 |

+

input_grad_vec[vec_offset + idx] = dx_vec;

|

| 355 |

+

}

|

| 356 |

+

if (mul_grad) {

|

| 357 |

+

mul_grad_vec[vec_offset + idx] = dm_vec;

|

| 358 |

+

}

|

| 359 |

+

}

|

| 360 |

+

|

| 361 |

+

using BlockReduce4 = cub::BlockReduce<float4, 1024>;

|

| 362 |

+

__shared__ typename BlockReduce4::TempStorage reduceStore4;

|

| 363 |

+

|

| 364 |

+

float4 thread_sum_ds = make_float4(sum_dy, sum_dw0, sum_dw1, sum_dw2);

|

| 365 |

+

float4 block_sum_ds =

|

| 366 |

+

BlockReduce4(reduceStore4).Reduce(thread_sum_ds, SumOp4{}, blockDim.x);

|

| 367 |

+

|

| 368 |

+

sum_dy = block_sum_ds.x;

|

| 369 |

+

sum_dw0 = block_sum_ds.y;

|

| 370 |

+

sum_dw1 = block_sum_ds.z;

|

| 371 |

+

sum_dw2 = block_sum_ds.w;

|

| 372 |

+

|

| 373 |

+

if (threadIdx.x == 0) {

|

| 374 |

+

temp_bias_grad[blockIdx.x] = sum_dy;

|

| 375 |

+

temp_weight_grad[blockIdx.x * 3 + 0] = sum_dw0;

|

| 376 |

+

temp_weight_grad[blockIdx.x * 3 + 1] = sum_dw1;

|

| 377 |

+

temp_weight_grad[blockIdx.x * 3 + 2] = sum_dw2;

|

| 378 |

+

}

|

| 379 |

+

}

|

| 380 |

+

|

| 381 |

+

template <typename scalar_t, typename acc_t, int width>

|

| 382 |

+

__global__ std::enable_if_t<(width == 0)> fused_mul_poly_norm_backward_kernel(

|

| 383 |

+

scalar_t *__restrict__ input_grad, // [..., d]

|

| 384 |

+

scalar_t *__restrict__ mul_grad, // [..., d]

|

| 385 |

+

acc_t *__restrict__ temp_weight_grad, // [..., 3]

|

| 386 |

+

acc_t *__restrict__ temp_bias_grad, // [..., 1]

|

| 387 |

+

const scalar_t *__restrict__ output_grad, // [..., d]

|

| 388 |

+

const scalar_t *__restrict__ input, // [..., d]

|

| 389 |

+

const scalar_t *__restrict__ mul, // [..., d]

|

| 390 |

+

const scalar_t *__restrict__ weight, // [3]

|

| 391 |

+

const scalar_t *__restrict__ bias, // [1]

|

| 392 |

+

const float eps, const int d) {

|

| 393 |

+

const int64_t token_idx = blockIdx.x;

|

| 394 |

+

|

| 395 |

+

acc_t sum2 = 0.0f;

|

| 396 |

+

acc_t sum4 = 0.0f;

|

| 397 |

+

acc_t sum6 = 0.0f;

|

| 398 |

+

|

| 399 |

+

acc_t sum_dx1 = 0.0f;

|

| 400 |

+

acc_t sum_dx2 = 0.0f;

|

| 401 |

+

acc_t sum_dx3 = 0.0f;

|

| 402 |

+

|

| 403 |

+

for (int64_t idx = threadIdx.x; idx < d; idx += blockDim.x) {

|

| 404 |

+

acc_t dy = output_grad[token_idx * d + idx] * mul[token_idx * d + idx];

|

| 405 |

+

|

| 406 |

+

acc_t x1 = input[token_idx * d + idx];

|

| 407 |

+

acc_t x2 = x1 * x1;

|

| 408 |

+

acc_t x3 = x2 * x1;

|

| 409 |

+

acc_t x4 = x2 * x2;

|

| 410 |

+

acc_t x6 = x3 * x3;

|

| 411 |

+

|

| 412 |

+

sum2 += x2;

|

| 413 |

+

sum4 += x4;

|

| 414 |

+

sum6 += x6;

|

| 415 |

+

|

| 416 |

+

sum_dx1 += dy * x1;

|

| 417 |

+

sum_dx2 += dy * x2;

|

| 418 |

+

sum_dx3 += dy * x3;

|

| 419 |

+

}

|

| 420 |

+

|

| 421 |

+

using BlockReduce = cub::BlockReduce<float3, 1024>;

|

| 422 |

+

__shared__ typename BlockReduce::TempStorage reduceStore;

|

| 423 |

+

|

| 424 |

+