File size: 10,402 Bytes

3be5927 3545920 3be5927 b51a32c 3be5927 b51a32c 3be5927 b51a32c 3be5927 b51a32c 3be5927 |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 279 280 281 282 283 284 285 |

---

license: apache-2.0

pipeline_tag: image-text-to-text

---

<a href="https://chat.qwenlm.ai/" target="_blank" style="margin: 2px;">

<img alt="Chat" src="https://img.shields.io/badge/%F0%9F%92%9C%EF%B8%8F%20Qwen%20Chat%20-536af5" style="display: inline-block; vertical-align: middle;"/>

</a>

# Qwen3-VL-4B-Thinking-FP8

> This repository contains an FP8 quantized version of the [Qwen3-VL-4B-Thinking](https://huggingface.co/Qwen/Qwen3-VL-4B-Thinking) model. The quantization method is fine-grained fp8 quantization with block size of 128, and its performance metrics are nearly identical to those of the original BF16 model. Enjoy!

Meet Qwen3-VL — the most powerful vision-language model in the Qwen series to date.

This generation delivers comprehensive upgrades across the board: superior text understanding & generation, deeper visual perception & reasoning, extended context length, enhanced spatial and video dynamics comprehension, and stronger agent interaction capabilities.

Available in Dense and MoE architectures that scale from edge to cloud, with Instruct and reasoning‑enhanced Thinking editions for flexible, on‑demand deployment.

#### Key Enhancements:

* **Visual Agent**: Operates PC/mobile GUIs—recognizes elements, understands functions, invokes tools, completes tasks.

* **Visual Coding Boost**: Generates Draw.io/HTML/CSS/JS from images/videos.

* **Advanced Spatial Perception**: Judges object positions, viewpoints, and occlusions; provides stronger 2D grounding and enables 3D grounding for spatial reasoning and embodied AI.

* **Long Context & Video Understanding**: Native 256K context, expandable to 1M; handles books and hours-long video with full recall and second-level indexing.

* **Enhanced Multimodal Reasoning**: Excels in STEM/Math—causal analysis and logical, evidence-based answers.

* **Upgraded Visual Recognition**: Broader, higher-quality pretraining is able to “recognize everything”—celebrities, anime, products, landmarks, flora/fauna, etc.

* **Expanded OCR**: Supports 32 languages (up from 19); robust in low light, blur, and tilt; better with rare/ancient characters and jargon; improved long-document structure parsing.

* **Text Understanding on par with pure LLMs**: Seamless text–vision fusion for lossless, unified comprehension.

#### Model Architecture Updates:

<p align="center">

<img src="https://qianwen-res.oss-accelerate.aliyuncs.com/Qwen3-VL/qwen3vl_arc.jpg" width="80%"/>

<p>

1. **Interleaved-MRoPE**: Full‑frequency allocation over time, width, and height via robust positional embeddings, enhancing long‑horizon video reasoning.

2. **DeepStack**: Fuses multi‑level ViT features to capture fine‑grained details and sharpen image–text alignment.

3. **Text–Timestamp Alignment:** Moves beyond T‑RoPE to precise, timestamp‑grounded event localization for stronger video temporal modeling.

This is the weight repository for Qwen3-VL-4B-Thinking-FP8.

---

## Model Performance

**Multimodal performance**

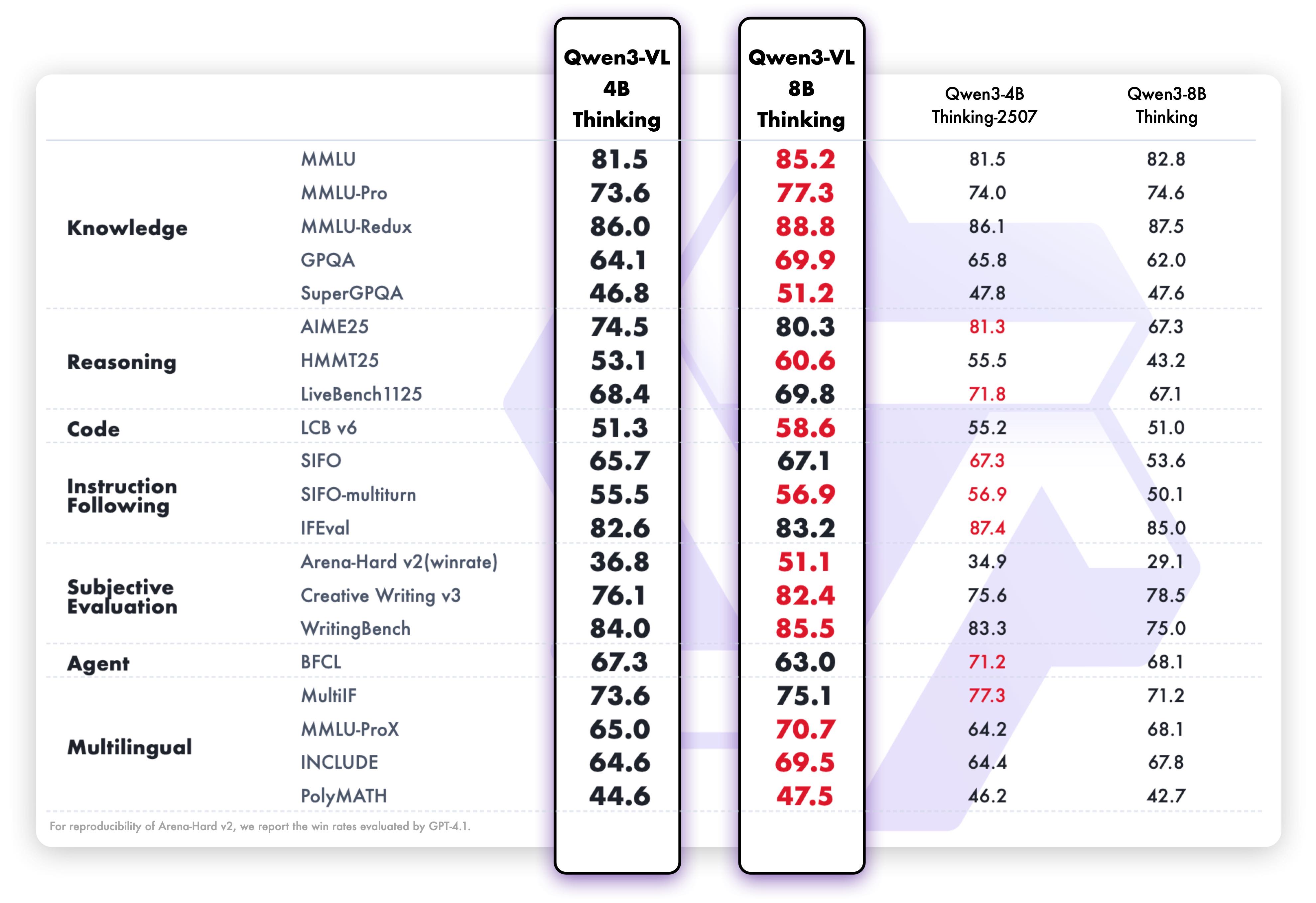

**Pure text performance**

## Quickstart

Currently, 🤗 Transformers does not support loading these weights directly. Stay tuned!

We recommend deploying the model using vLLM or SGLang, with example launch commands provided below. For details on the runtime environment and deployment, please refer to this [link](https://github.com/QwenLM/Qwen3-VL?tab=readme-ov-file#deployment).

### vLLM Inference

Here we provide a code snippet demonstrating how to use vLLM to run inference with Qwen3-VL locally. For more details on efficient deployment with vLLM, please refer to the [community deployment guide](https://docs.vllm.ai/projects/recipes/en/latest/Qwen/Qwen3-VL.html).

```python

# -*- coding: utf-8 -*-

import torch

from qwen_vl_utils import process_vision_info

from transformers import AutoProcessor

from vllm import LLM, SamplingParams

import os

os.environ['VLLM_WORKER_MULTIPROC_METHOD'] = 'spawn'

def prepare_inputs_for_vllm(messages, processor):

text = processor.apply_chat_template(messages, tokenize=False, add_generation_prompt=True)

# qwen_vl_utils 0.0.14+ reqired

image_inputs, video_inputs, video_kwargs = process_vision_info(

messages,

image_patch_size=processor.image_processor.patch_size,

return_video_kwargs=True,

return_video_metadata=True

)

print(f"video_kwargs: {video_kwargs}")

mm_data = {}

if image_inputs is not None:

mm_data['image'] = image_inputs

if video_inputs is not None:

mm_data['video'] = video_inputs

return {

'prompt': text,

'multi_modal_data': mm_data,

'mm_processor_kwargs': video_kwargs

}

if __name__ == '__main__':

# messages = [

# {

# "role": "user",

# "content": [

# {

# "type": "video",

# "video": "https://qianwen-res.oss-cn-beijing.aliyuncs.com/Qwen2-VL/space_woaudio.mp4",

# },

# {"type": "text", "text": "这段视频有多长"},

# ],

# }

# ]

messages = [

{

"role": "user",

"content": [

{

"type": "image",

"image": "https://qianwen-res.oss-accelerate.aliyuncs.com/Qwen3-VL/receipt.png",

},

{"type": "text", "text": "Read all the text in the image."},

],

}

]

# TODO: change to your own checkpoint path

checkpoint_path = "Qwen/Qwen3-VL-4B-Thinking-FP8"

processor = AutoProcessor.from_pretrained(checkpoint_path)

inputs = [prepare_inputs_for_vllm(message, processor) for message in [messages]]

llm = LLM(

model=checkpoint_path,

trust_remote_code=True,

gpu_memory_utilization=0.70,

enforce_eager=False,

tensor_parallel_size=torch.cuda.device_count(),

seed=0

)

sampling_params = SamplingParams(

temperature=0,

max_tokens=1024,

top_k=-1,

stop_token_ids=[],

)

for i, input_ in enumerate(inputs):

print()

print('=' * 40)

print(f"Inputs[{i}]: {input_['prompt']=!r}")

print('\n' + '>' * 40)

outputs = llm.generate(inputs, sampling_params=sampling_params)

for i, output in enumerate(outputs):

generated_text = output.outputs[0].text

print()

print('=' * 40)

print(f"Generated text: {generated_text!r}")

```

### SGLang Inference

Here we provide a code snippet demonstrating how to use SGLang to run inference with Qwen3-VL locally.

```python

import time

from PIL import Image

from sglang import Engine

from qwen_vl_utils import process_vision_info

from transformers import AutoProcessor, AutoConfig

if __name__ == "__main__":

# TODO: change to your own checkpoint path

checkpoint_path = "Qwen/Qwen3-VL-4B-Thinking-FP8"

processor = AutoProcessor.from_pretrained(checkpoint_path)

messages = [

{

"role": "user",

"content": [

{

"type": "image",

"image": "https://qianwen-res.oss-accelerate.aliyuncs.com/Qwen3-VL/receipt.png",

},

{"type": "text", "text": "Read all the text in the image."},

],

}

]

text = processor.apply_chat_template(

messages,

tokenize=False,

add_generation_prompt=True

)

image_inputs, _ = process_vision_info(messages, image_patch_size=processor.image_processor.patch_size)

llm = Engine(

model_path=checkpoint_path,

enable_multimodal=True,

mem_fraction_static=0.8,

tp_size=torch.cuda.device_count(),

attention_backend="fa3"

)

start = time.time()

sampling_params = {"max_new_tokens": 1024}

response = llm.generate(prompt=text, image_data=image_inputs, sampling_params=sampling_params)

print(f"Response costs: {time.time() - start:.2f}s")

print(f"Generated text: {response['text']}")

```

### Generation Hyperparameters

#### VL

```bash

export greedy='false'

export top_p=0.95

export top_k=20

export repetition_penalty=1.0

export presence_penalty=0.0

export temperature=1.0

export out_seq_length=40960

```

#### Text

```bash

export greedy='false'

export top_p=0.95

export top_k=20

export repetition_penalty=1.0

export presence_penalty=1.5

export temperature=1.0

export out_seq_length=32768 (for aime, lcb, and gpqa, it is recommended to set to 81920)

```

## Citation

If you find our work helpful, feel free to give us a cite.

```

@misc{qwen3technicalreport,

title={Qwen3 Technical Report},

author={Qwen Team},

year={2025},

eprint={2505.09388},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/abs/2505.09388},

}

@article{Qwen2.5-VL,

title={Qwen2.5-VL Technical Report},

author={Bai, Shuai and Chen, Keqin and Liu, Xuejing and Wang, Jialin and Ge, Wenbin and Song, Sibo and Dang, Kai and Wang, Peng and Wang, Shijie and Tang, Jun and Zhong, Humen and Zhu, Yuanzhi and Yang, Mingkun and Li, Zhaohai and Wan, Jianqiang and Wang, Pengfei and Ding, Wei and Fu, Zheren and Xu, Yiheng and Ye, Jiabo and Zhang, Xi and Xie, Tianbao and Cheng, Zesen and Zhang, Hang and Yang, Zhibo and Xu, Haiyang and Lin, Junyang},

journal={arXiv preprint arXiv:2502.13923},

year={2025}

}

@article{Qwen2VL,

title={Qwen2-VL: Enhancing Vision-Language Model's Perception of the World at Any Resolution},

author={Wang, Peng and Bai, Shuai and Tan, Sinan and Wang, Shijie and Fan, Zhihao and Bai, Jinze and Chen, Keqin and Liu, Xuejing and Wang, Jialin and Ge, Wenbin and Fan, Yang and Dang, Kai and Du, Mengfei and Ren, Xuancheng and Men, Rui and Liu, Dayiheng and Zhou, Chang and Zhou, Jingren and Lin, Junyang},

journal={arXiv preprint arXiv:2409.12191},

year={2024}

}

@article{Qwen-VL,

title={Qwen-VL: A Versatile Vision-Language Model for Understanding, Localization, Text Reading, and Beyond},

author={Bai, Jinze and Bai, Shuai and Yang, Shusheng and Wang, Shijie and Tan, Sinan and Wang, Peng and Lin, Junyang and Zhou, Chang and Zhou, Jingren},

journal={arXiv preprint arXiv:2308.12966},

year={2023}

}

``` |