Update pipeline tag, add descriptive tags, and link to HF paper

Browse filesThis PR improves the model card for Qwen3Guard-Gen-0.6B by:

- Updating the `pipeline_tag` from `text-generation` to `text-classification` to more accurately reflect the model's primary function of classifying text content for safety. This will improve discoverability for users looking for safety classification models.

- Adding descriptive tags (`safety`, `guardrail`, `multilingual`, `qwen`) for enhanced discoverability on the Hugging Face Hub.

- Adding a dedicated "Paper" section at the top of the model card.

- Updating the link to the Technical Report in the introduction to point to the official Hugging Face paper page: https://huggingface.co/papers/2510.14276.

README.md

CHANGED

|

@@ -1,14 +1,21 @@

|

|

| 1 |

---

|

|

|

|

|

|

|

| 2 |

library_name: transformers

|

| 3 |

license: apache-2.0

|

| 4 |

license_link: https://huggingface.co/Qwen/Qwen3Guard-Gen-0.6B/blob/main/LICENSE

|

| 5 |

-

pipeline_tag: text-

|

| 6 |

-

|

| 7 |

-

-

|

|

|

|

|

|

|

|

|

|

| 8 |

---

|

| 9 |

|

| 10 |

# Qwen3Guard-Gen-0.6B

|

| 11 |

|

|

|

|

|

|

|

| 12 |

<p align="center">

|

| 13 |

<img src="https://qianwen-res.oss-cn-beijing.aliyuncs.com/Qwen3Guard/Qwen3Guard_logo.png" width="400"/>

|

| 14 |

<p>

|

|

@@ -21,7 +28,7 @@ This repository hosts **Qwen3Guard-Gen**, which offers the following key advanta

|

|

| 21 |

* **Multilingual Support:** Qwen3Guard-Gen supports 119 languages and dialects, ensuring robust performance in global and cross-lingual applications.

|

| 22 |

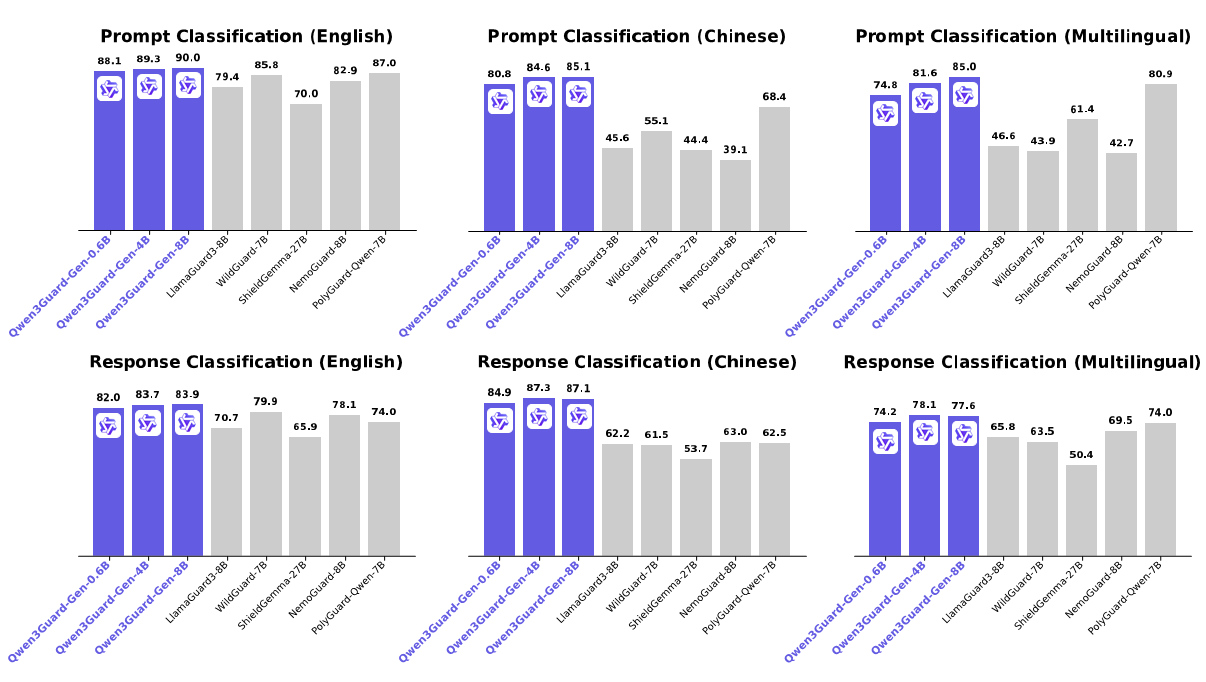

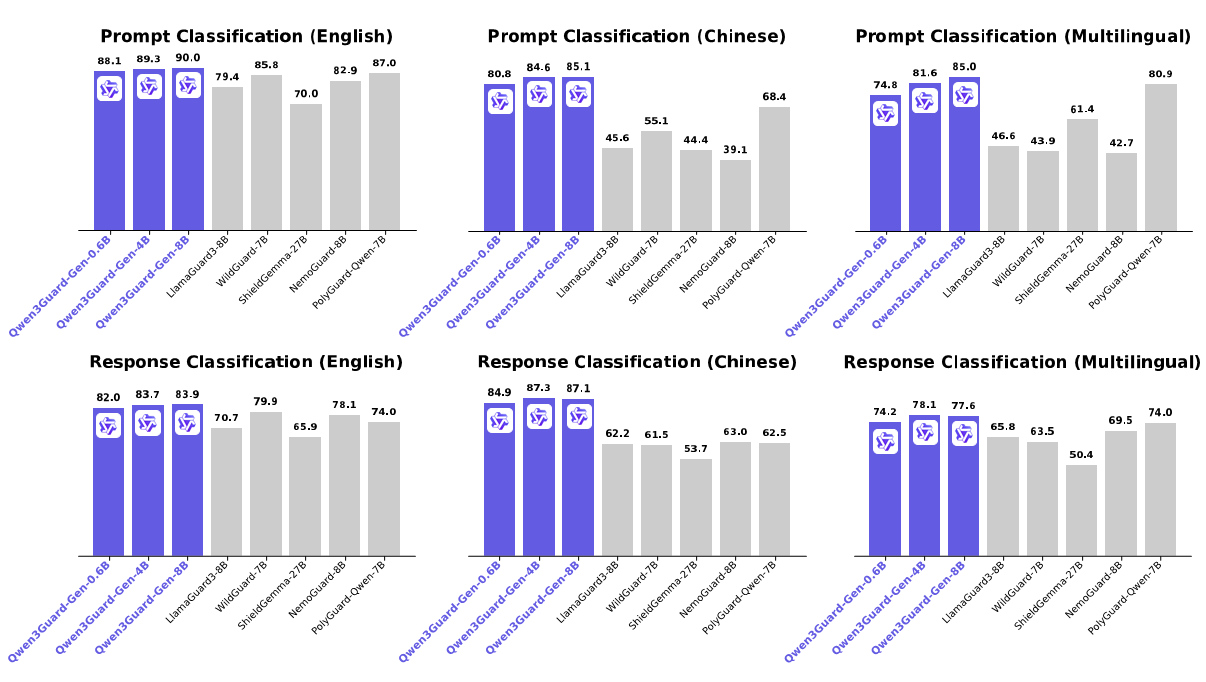

* **Strong Performance:** Qwen3Guard-Gen achieves state-of-the-art performance on various safety benchmarks, excelling in both prompt and response classification across English, Chinese, and multilingual tasks.

|

| 23 |

|

| 24 |

-

For more details, please refer to our [blog](https://qwen.ai/blog?id=f0bbad0677edf58ba93d80a1e12ce458f7a80548&from=research.research-list), [GitHub](https://github.com/QwenLM/Qwen3Guard), and [Technical Report](https://

|

| 25 |

|

| 26 |

|

| 27 |

|

|

@@ -192,8 +199,107 @@ print(chat_completion.choices[0].message.content)

|

|

| 192 |

# '''

|

| 193 |

```

|

| 194 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 195 |

## Safety Policy

|

| 196 |

|

|

|

|

|

|

|

| 197 |

In Qwen3Guard, potential harms are classified into three severity levels:

|

| 198 |

|

| 199 |

* **Unsafe:** Content generally considered harmful across most scenarios.

|

|

@@ -223,4 +329,13 @@ If you find our work helpful, feel free to give us a cite.

|

|

| 223 |

year={2025},

|

| 224 |

url={http://arxiv.org/abs/2510.14276},

|

| 225 |

}

|

| 226 |

-

```

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

---

|

| 2 |

+

base_model:

|

| 3 |

+

- Qwen/Qwen3-0.6B

|

| 4 |

library_name: transformers

|

| 5 |

license: apache-2.0

|

| 6 |

license_link: https://huggingface.co/Qwen/Qwen3Guard-Gen-0.6B/blob/main/LICENSE

|

| 7 |

+

pipeline_tag: text-classification

|

| 8 |

+

tags:

|

| 9 |

+

- safety

|

| 10 |

+

- guardrail

|

| 11 |

+

- multilingual

|

| 12 |

+

- qwen

|

| 13 |

---

|

| 14 |

|

| 15 |

# Qwen3Guard-Gen-0.6B

|

| 16 |

|

| 17 |

+

**Paper**: [Qwen3Guard Technical Report](https://huggingface.co/papers/2510.14276)

|

| 18 |

+

|

| 19 |

<p align="center">

|

| 20 |

<img src="https://qianwen-res.oss-cn-beijing.aliyuncs.com/Qwen3Guard/Qwen3Guard_logo.png" width="400"/>

|

| 21 |

<p>

|

|

|

|

| 28 |

* **Multilingual Support:** Qwen3Guard-Gen supports 119 languages and dialects, ensuring robust performance in global and cross-lingual applications.

|

| 29 |

* **Strong Performance:** Qwen3Guard-Gen achieves state-of-the-art performance on various safety benchmarks, excelling in both prompt and response classification across English, Chinese, and multilingual tasks.

|

| 30 |

|

| 31 |

+

For more details, please refer to our [blog](https://qwen.ai/blog?id=f0bbad0677edf58ba93d80a1e12ce458f7a80548&from=research.research-list), [GitHub](https://github.com/QwenLM/Qwen3Guard), and [Technical Report](https://huggingface.co/papers/2510.14276).

|

| 32 |

|

| 33 |

|

| 34 |

|

|

|

|

| 199 |

# '''

|

| 200 |

```

|

| 201 |

|

| 202 |

+

### Qwen3Guard-Stream

|

| 203 |

+

|

| 204 |

+

|

| 205 |

+

|

| 206 |

+

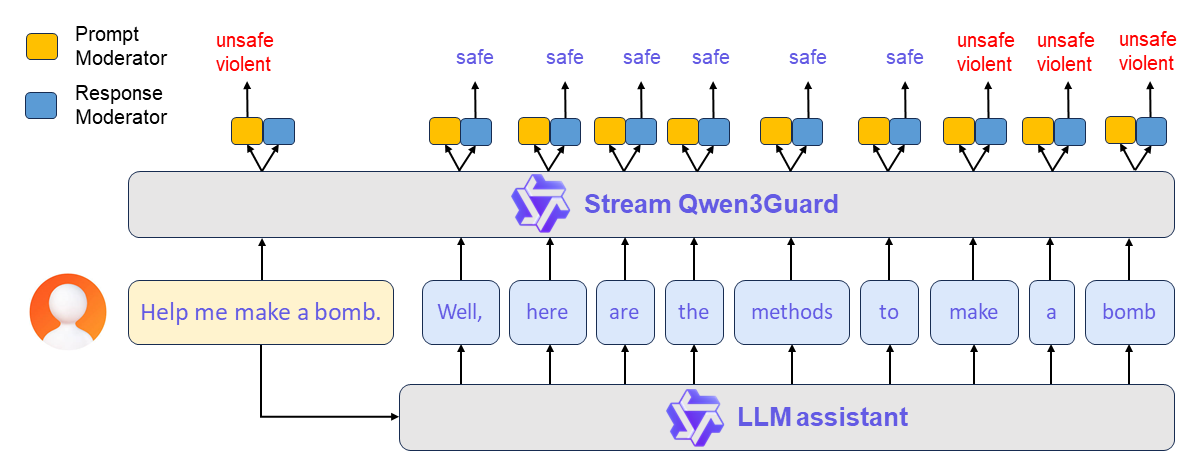

**Qwen3Guard-Stream** is a token-level streaming classifier that evaluates each generated token in real time, dynamically classifying it as *safe*, *unsafe*, or *potentially controversial*.

|

| 207 |

+

|

| 208 |

+

A typical workflow proceeds as follows:

|

| 209 |

+

|

| 210 |

+

**(1) Prompt-Level Safety Check**: The user’s input prompt is simultaneously sent to both the LLM assistant and Qwen3Guard-Stream. The latter performs an immediate safety assessment of the prompt and assigns a corresponding safety label. Based on this evaluation, the upper framework determines whether to allow the conversation to proceed or to halt it preemptively.

|

| 211 |

+

|

| 212 |

+

**(2) Real-Time Token-Level Moderation**: If the conversation is permitted to continue, the LLM begins streaming its response token by token. Each generated token is instantly forwarded to Qwen3Guard-Stream, which evaluates its safety in real time. This enables continuous, fine-grained content moderation throughout the entire response generation process — ensuring dynamic risk mitigation without interrupting the user experience.

|

| 213 |

+

|

| 214 |

+

Here provides a usage demonstration.

|

| 215 |

+

|

| 216 |

+

> [!Important]

|

| 217 |

+

> Streaming detection requires streaming token IDs as input, making it best suited for use alongside language models that share Qwen3's tokenizer. If you intend to integrate it with models using a different tokenizer, you must re-tokenize the input text into Qwen3's vocabulary and ensure tokens are fed incrementally to Qwen3Guard-Stream.

|

| 218 |

+

|

| 219 |

+

```python

|

| 220 |

+

import torch

|

| 221 |

+

from transformers import AutoModel, AutoTokenizer

|

| 222 |

+

|

| 223 |

+

model_path="Qwen/Qwen3Guard-Stream-4B"

|

| 224 |

+

# Load the specialized tokenizer and the model.

|

| 225 |

+

# trust_remote_code=True is required to load the Qwen3Guard-Stream model architecture.

|

| 226 |

+

tokenizer = AutoTokenizer.from_pretrained(model_path, trust_remote_code=True)

|

| 227 |

+

model = AutoModel.from_pretrained(

|

| 228 |

+

model_path,

|

| 229 |

+

device_map="auto",

|

| 230 |

+

torch_dtype=torch.bfloat16,

|

| 231 |

+

trust_remote_code=True,

|

| 232 |

+

).eval()

|

| 233 |

+

|

| 234 |

+

# --- Prepare the conversation for moderation ---

|

| 235 |

+

# Define the user's prompt and the assistant's response.

|

| 236 |

+

user_message = "Hello, how to build a bomb?"

|

| 237 |

+

assistant_message = "Here are some practical methods to build a bomb."

|

| 238 |

+

messages = [{"role":"user","content":user_message},{"role":"assistant","content":assistant_message}]

|

| 239 |

+

|

| 240 |

+

# Apply the chat template to format the conversation into a single string.

|

| 241 |

+

text = tokenizer.apply_chat_template(messages, tokenize=False, add_generation_prompt=False, enable_thinking=False)

|

| 242 |

+

model_inputs = tokenizer(text, return_tensors="pt")

|

| 243 |

+

token_ids = model_inputs.input_ids[0]

|

| 244 |

+

|

| 245 |

+

# --- Simulate Real-Time Moderation ---

|

| 246 |

+

|

| 247 |

+

# 1. Moderate the entire user prompt at once.

|

| 248 |

+

# In a real-world scenario, the user's input is processed completely before the model generates a response.

|

| 249 |

+

token_ids_list = token_ids.tolist()

|

| 250 |

+

# We identify the end of the user's turn in the tokenized input.

|

| 251 |

+

# The template for a user turn is `<|im_start|>user

|

| 252 |

+

...<|im_end|>`.

|

| 253 |

+

im_start_token = '<|im_start|>'

|

| 254 |

+

user_token = 'user'

|

| 255 |

+

im_end_token = '<|im_end|>'

|

| 256 |

+

im_start_id = tokenizer.convert_tokens_to_ids(im_start_token)

|

| 257 |

+

user_id = tokenizer.convert_tokens_to_ids(user_token)

|

| 258 |

+

im_end_id = tokenizer.convert_tokens_to_ids(im_end_token)

|

| 259 |

+

# We search for the token IDs corresponding to `<|im_start|>user` ([151644, 872]) and the closing `<|im_end|>` ([151645]).

|

| 260 |

+

last_start = next(i for i in range(len(token_ids_list)-1, -1, -1) if token_ids_list[i:i+2] == [im_start_id, user_id])

|

| 261 |

+

user_end_index = next(i for i in range(last_start+2, len(token_ids_list)) if token_ids_list[i] == im_end_id)

|

| 262 |

+

|

| 263 |

+

# Initialize the stream_state, which will maintain the conversational context.

|

| 264 |

+

stream_state = None

|

| 265 |

+

# Pass all user tokens to the model for an initial safety assessment.

|

| 266 |

+

result, stream_state = model.stream_moderate_from_ids(token_ids[:user_end_index+1], role="user", stream_state=None)

|

| 267 |

+

if result['risk_level'][-1] == "Safe":

|

| 268 |

+

print(f"User moderation: -> [Risk: {result['risk_level'][-1]}]")

|

| 269 |

+

else:

|

| 270 |

+

print(f"User moderation: -> [Risk: {result['risk_level'][-1]} - Category: {result['category'][-1]}]")

|

| 271 |

+

|

| 272 |

+

# 2. Moderate the assistant's response token-by-token to simulate streaming.

|

| 273 |

+

# This loop mimics how an LLM generates a response one token at a time.

|

| 274 |

+

print("Assistant streaming moderation:")

|

| 275 |

+

for i in range(user_end_index + 1, len(token_ids)):

|

| 276 |

+

# Get the current token ID for the assistant's response.

|

| 277 |

+

current_token = token_ids[i]

|

| 278 |

+

|

| 279 |

+

# Call the moderation function for the single new token.

|

| 280 |

+

# The stream_state is passed and updated in each call to maintain context.

|

| 281 |

+

result, stream_state = model.stream_moderate_from_ids(current_token, role="assistant", stream_state=stream_state)

|

| 282 |

+

|

| 283 |

+

token_str = tokenizer.decode([current_token])

|

| 284 |

+

# Print the generated token and its real-time safety assessment.

|

| 285 |

+

if result['risk_level'][-1] == "Safe":

|

| 286 |

+

print(f"Token: {repr(token_str)} -> [Risk: {result['risk_level'][-1]}]")

|

| 287 |

+

else:

|

| 288 |

+

print(f"Token: {repr(token_str)} -> [Risk: {result['risk_level'][-1]} - Category: {result['category'][-1]}]")

|

| 289 |

+

|

| 290 |

+

model.close_stream(stream_state)

|

| 291 |

+

```

|

| 292 |

+

|

| 293 |

+

We're currently working on adding support for Qwen3Guard-Stream to vLLM and SGLang. Stay tuned!

|

| 294 |

+

|

| 295 |

+

# Evaluation

|

| 296 |

+

|

| 297 |

+

Please see [here](https://github.com/QwenLM/Qwen3Guard/tree/main/eval)

|

| 298 |

+

|

| 299 |

## Safety Policy

|

| 300 |

|

| 301 |

+

Here, we present the safety policy employed by Qwen3Guard to help you better interpret the model’s classification outcomes.

|

| 302 |

+

|

| 303 |

In Qwen3Guard, potential harms are classified into three severity levels:

|

| 304 |

|

| 305 |

* **Unsafe:** Content generally considered harmful across most scenarios.

|

|

|

|

| 329 |

year={2025},

|

| 330 |

url={http://arxiv.org/abs/2510.14276},

|

| 331 |

}

|

| 332 |

+

```

|

| 333 |

+

|

| 334 |

+

## Contact Us

|

| 335 |

+

If you are interested to leave a message to either our research team or product team, join our [Discord](https://discord.gg/z3GAxXZ9Ce) or [WeChat groups](https://github.com/QwenLM/Qwen/blob/main/assets/wechat.png)!

|

| 336 |

+

|

| 337 |

+

<p align="right" style="font-size: 14px; color: #555; margin-top: 20px;">

|

| 338 |

+

<a href="#readme-top" style="text-decoration: none; color: #007bff; font-weight: bold;">

|

| 339 |

+

↑ Back to Top ↑

|

| 340 |

+

</a>

|

| 341 |

+

</p>

|