Datasets:

Modalities:

Image

Languages:

English

Size:

10K<n<100K

Tags:

computer-vision

3d-reconstruction

subsurface-scattering

gaussian-splatting

inverse-rendering

photometric-stereo

License:

feat: Readme

Browse files- README.md +150 -0

- other/dataset.png +3 -0

- other/preprocessing.png +3 -0

README.md

ADDED

|

@@ -0,0 +1,150 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

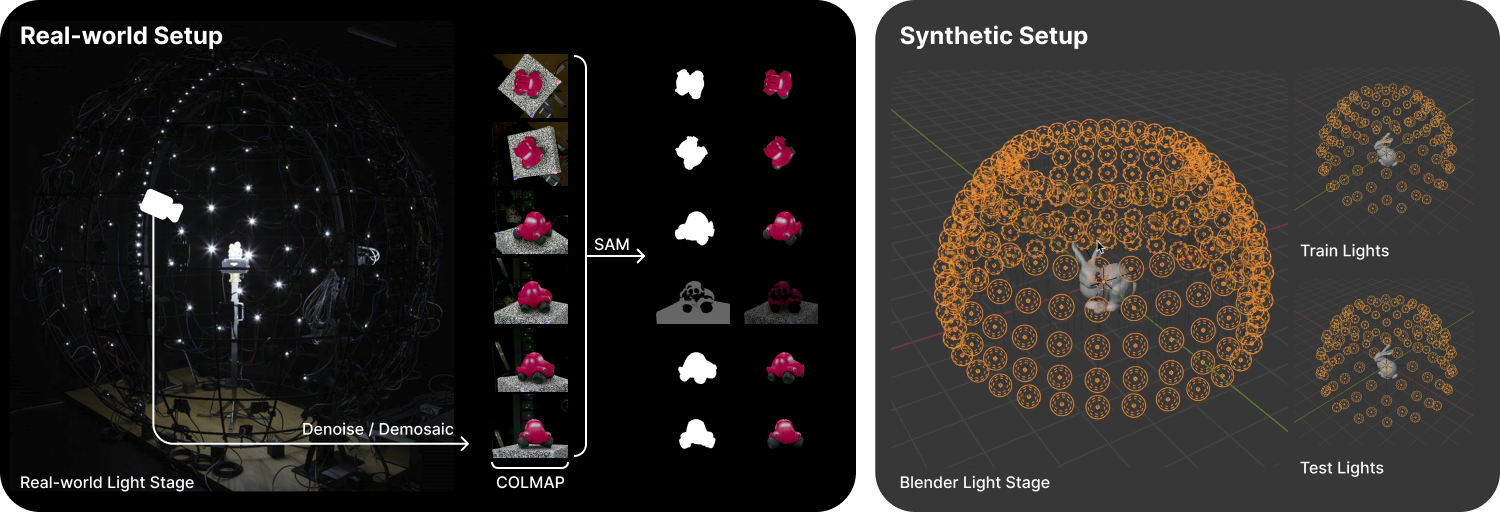

# 🕯️ Light-Stage OLAT Subsurface-Scattering Dataset

|

| 2 |

+

|

| 3 |

+

*Companion data for the paper **"Subsurface Scattering for 3D Gaussian Splatting"***

|

| 4 |

+

|

| 5 |

+

> **This README documents *only the dataset*.**

|

| 6 |

+

> A separate repo covers the training / rendering **code**: <https://github.com/cgtuebingen/SSS-GS>

|

| 7 |

+

|

| 8 |

+

<p align="center">

|

| 9 |

+

<img src="other/dataset.png" width="80%" alt="Dataset overview"/>

|

| 10 |

+

</p>

|

| 11 |

+

|

| 12 |

+

## Overview

|

| 13 |

+

|

| 14 |

+

Subsurface scattering (SSS) gives translucent materials (wax, soap, jade, skin) their distinctive soft glow. Our paper introduces **SSS-GS**, the first 3D Gaussian-Splatting framework that *jointly* reconstructs shape, BRDF and volumetric SSS while running at real-time framerates. Training such a model requires dense **multi-view ⇄ multi-light OLAT** data.

|

| 15 |

+

|

| 16 |

+

This dataset delivers exactly that:

|

| 17 |

+

|

| 18 |

+

* **25 objects** – 20 captured on a physical light-stage, 5 rendered in a synthetic stage

|

| 19 |

+

* **> 37k images** (≈ 1 TB raw / ≈ 30 GB processed) with **known camera & light poses**

|

| 20 |

+

* Ready-to-use JSON transform files compatible with NeRF & 3D GS toolchains

|

| 21 |

+

* Processed to 800 px images + masks; **raw 16 MP capture** available on request

|

| 22 |

+

|

| 23 |

+

### Applications

|

| 24 |

+

|

| 25 |

+

* Research on SSS, inverse-rendering, radiance-field relighting, differentiable shading

|

| 26 |

+

* Benchmarking OLAT pipelines or light-stage calibration

|

| 27 |

+

* Teaching datasets for photometric 3D reconstruction

|

| 28 |

+

|

| 29 |

+

## Quick Start

|

| 30 |

+

|

| 31 |

+

```bash

|

| 32 |

+

# Download and extract one real-world object

|

| 33 |

+

curl -L https://…/real_world/candle.tar | tar -x

|

| 34 |

+

```

|

| 35 |

+

|

| 36 |

+

## Directory Layout

|

| 37 |

+

```

|

| 38 |

+

dataset_root/

|

| 39 |

+

├── real_world/ # Captured objects (processed, ready to train)

|

| 40 |

+

│ └── <object>.tar # Each tar = one object (≈ 4–8 GB)

|

| 41 |

+

└── synthetic/ # Procedurally rendered objects

|

| 42 |

+

├── <object>_full/ # full-resolution (800 px)

|

| 43 |

+

└── <object>_small/ # 256 px "quick-train" version

|

| 44 |

+

```

|

| 45 |

+

|

| 46 |

+

### Inside a **real-world** tar

|

| 47 |

+

```

|

| 48 |

+

<object>/

|

| 49 |

+

├── resized/ # θ_φ_board_i.png (≈ 800 × 650 px)

|

| 50 |

+

├── transforms_train.json # (train-set only) ⇄ camera / light metadata

|

| 51 |

+

├── transforms_test.json # (test-set only) ⇄ camera / light metadata

|

| 52 |

+

├── light_positions.json # all θ_φ_board_i → (x,y,z)

|

| 53 |

+

├── exclude_list.json # bad views (lens flare, matting error, …)

|

| 54 |

+

└── cam_lights_aligned.png # sanity-check visualisation

|

| 55 |

+

```

|

| 56 |

+

*Raw capture* Full-resolution, unprocessed RGB-bayer images (~ 1 TB per object) are kept offline—contact us to arrange transfer.

|

| 57 |

+

|

| 58 |

+

### Inside a **synthetic** object folder

|

| 59 |

+

```

|

| 60 |

+

<object>_full/

|

| 61 |

+

├── <object>.blend # Blender scene with 112 HDR stage lights

|

| 62 |

+

├── train/ # r_<cam>_l_<light>.png (= 800 × 800 px)

|

| 63 |

+

├── test/ # r_<cam>_l_<light>.png (= 800 × 800 px)

|

| 64 |

+

├── eval/ # only in "_small" subsets

|

| 65 |

+

├── transforms_train.json # (train-set only) ⇄ camera / light metadata

|

| 66 |

+

└── transforms_test.json # (test-set only) ⇄ camera / light metadata

|

| 67 |

+

```

|

| 68 |

+

The *small* variant differs only in image resolution & optional `eval/`.

|

| 69 |

+

|

| 70 |

+

## Data Collection

|

| 71 |

+

|

| 72 |

+

### Real-World Subset

|

| 73 |

+

|

| 74 |

+

**Capture Setup:**

|

| 75 |

+

- **Stage**: 4 m diameter light-stage with 167 individually addressable LEDs

|

| 76 |

+

- **Camera**: FLIR Oryx 12 MP with 35 mm F-mount, motorized turntable & vertical rail

|

| 77 |

+

- **Processing**: COLMAP SfM, automatic masking (SAM + ViTMatte), resize → PNG

|

| 78 |

+

|

| 79 |

+

| Objects | Avg. Views | Lights/View | Resolution | Masks |

|

| 80 |

+

|---------|------------|-------------|------------|-------|

|

| 81 |

+

| 20 | 158 | 167 | 800×650 px | α-mattes |

|

| 82 |

+

|

| 83 |

+

<p align="center">

|

| 84 |

+

<img src="other/preprocessing.png" width="60%" alt="Preprocessing pipeline"/>

|

| 85 |

+

</p>

|

| 86 |

+

|

| 87 |

+

### Synthetic Subset

|

| 88 |

+

|

| 89 |

+

**Rendering Setup:**

|

| 90 |

+

- **Models**: Stanford 3D Scans and BlenderKit

|

| 91 |

+

- **Renderer**: Blender Cycles with spectral SSS (Principled BSDF)

|

| 92 |

+

- **Lights**: 112 positions (7 rings × 16), 200 test cameras on NeRF spiral path

|

| 93 |

+

|

| 94 |

+

| Variant | Images | Views × Lights | Resolution | Notes |

|

| 95 |

+

|---------|--------|----------------|------------|-------|

|

| 96 |

+

| _full | 11,200 | 100 × 112 | 800² | Filmic tonemapping |

|

| 97 |

+

| _small | 1,500 | 15 × 100 | 256² | Quick prototyping |

|

| 98 |

+

|

| 99 |

+

## File & Naming Conventions

|

| 100 |

+

* **Real images** `theta_<θ>_phi_<φ>_board_<id>.png`

|

| 101 |

+

*θ, φ* in degrees; *board* 0-195 indexes the LED PCBs.

|

| 102 |

+

* **Synthetic images** `r_<camera>_l_<light>.png`

|

| 103 |

+

* **JSON schema**

|

| 104 |

+

```jsonc

|

| 105 |

+

{

|

| 106 |

+

"camera_angle_x": 0.3558,

|

| 107 |

+

"frames": [{

|

| 108 |

+

"file_paths": ["resized/theta_10.0_phi_0.0_board_1", …],

|

| 109 |

+

"light_positions": [[x,y,z], …], // metres, stage origin

|

| 110 |

+

"transform_matrix": [[...], ...], // 4×4 extrinsic

|

| 111 |

+

"width": 800, "height": 650, "cx": 400.0, "cy": 324.5

|

| 112 |

+

}]

|

| 113 |

+

}

|

| 114 |

+

```

|

| 115 |

+

For synthetic files: identical structure, naming `r_<cam>_l_<light>`.

|

| 116 |

+

|

| 117 |

+

## Licensing & Third-Party Assets

|

| 118 |

+

| Asset | Source | License / Note |

|

| 119 |

+

|-------|--------|----------------|

|

| 120 |

+

| Synthetic models | [Stanford 3-D Scans](https://graphics.stanford.edu/data/3Dscanrep/) | Varies (non-commercial / research) |

|

| 121 |

+

| | [BlenderKit](https://www.blenderkit.com/) | CC-0, CC-BY or Royalty-Free (check per-asset page) |

|

| 122 |

+

| HDR env-maps | [Poly Haven](https://polyhaven.com/) | CC-0 |

|

| 123 |

+

| Code | MIT (see repo) |

|

| 124 |

+

|

| 125 |

+

The dataset is released **for non-commercial research and educational use**.

|

| 126 |

+

If you plan to redistribute or use individual synthetic assets commercially, verify the upstream license first.

|

| 127 |

+

|

| 128 |

+

## Citation

|

| 129 |

+

If you use this dataset, please cite the paper:

|

| 130 |

+

|

| 131 |

+

```bibtex

|

| 132 |

+

@inproceeding{sss_gs,

|

| 133 |

+

author = {Dihlmann, Jan-Niklas and Majumdar, Arjun and Engelhardt, Andreas and Braun, Raphael and Lensch, Hendrik P.A.},

|

| 134 |

+

booktitle = {Advances in Neural Information Processing Systems},

|

| 135 |

+

editor = {A. Globerson and L. Mackey and D. Belgrave and A. Fan and U. Paquet and J. Tomczak and C. Zhang},

|

| 136 |

+

pages = {121765--121789},

|

| 137 |

+

publisher = {Curran Associates, Inc.},

|

| 138 |

+

title = {Subsurface Scattering for Gaussian Splatting},

|

| 139 |

+

url = {https://proceedings.neurips.cc/paper_files/paper/2024/file/dc72529d604962a86b7730806b6113fa-Paper-Conference.pdf},

|

| 140 |

+

volume = {37},

|

| 141 |

+

year = {2024}

|

| 142 |

+

}

|

| 143 |

+

|

| 144 |

+

```

|

| 145 |

+

|

| 146 |

+

## Contact & Acknowledgements

|

| 147 |

+

Questions, raw-capture requests, or pull-requests?

|

| 148 |

+

📧 `jan-niklas.dihlmann (at) uni-tuebingen.de`

|

| 149 |

+

|

| 150 |

+

This work was funded by DFG (EXC 2064/1, SFB 1233) and the Tübingen AI Center.

|

other/dataset.png

ADDED

|

Git LFS Details

|

other/preprocessing.png

ADDED

|

Git LFS Details

|