Update README.md

Browse files

README.md

CHANGED

|

@@ -299,90 +299,86 @@ tags:

|

|

| 299 |

- newspapers

|

| 300 |

- history

|

| 301 |

pretty_name: Newspaper Navigator

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 302 |

---

|

| 303 |

|

| 304 |

-

|

| 305 |

# Dataset Card for Newspaper Navigator

|

| 306 |

|

| 307 |

## Dataset Summary

|

| 308 |

|

| 309 |

-

This dataset provides a Parquet-converted version of the [Newspaper Navigator](https://news-navigator.labs.loc.gov/) dataset from the Library of Congress. Originally released as JSON, Newspaper Navigator contains over 16 million pages of historic US newspapers annotated with bounding boxes, predicted visual types (e.g., photographs, maps), and OCR content.

|

| 310 |

|

| 311 |

-

This version was created using [`nnanno`](https://github.com/Living-with-machines/nnanno), a tool for sampling, annotating, and running inference on the Newspaper Navigator dataset. The dataset has been split into separate configurations by `predicted_type` (e.g., `photographs`, `illustrations`, `maps`) to enable easy downloading of specific content types.

|

| 312 |

|

| 313 |

All records include metadata such as publication date, location, LCCN, bounding boxes, OCR text, IIIF image links, and source URLs.

|

| 314 |

|

| 315 |

-

|

| 316 |

|

| 317 |

-

|

| 318 |

|

| 319 |

-

|

|

|

|

| 320 |

|

| 321 |

-

|

| 322 |

|

| 323 |

-

|

| 324 |

|

| 325 |

-

|

| 326 |

-

{scheme}://{server}/{prefix}/{identifier}/{region}/{size}/{rotation}/{quality}.{format}

|

| 327 |

-

```

|

| 328 |

|

| 329 |

-

|

| 330 |

-

|

|

|

|

|

|

|

| 331 |

|

| 332 |

-

|

| 333 |

-

|

| 334 |

|

| 335 |

-

|

|

|

|

| 336 |

|

| 337 |

-

|

| 338 |

-

|

| 339 |

-

IIIF servers are designed to deliver image regions at scale:

|

| 340 |

|

| 341 |

-

|

| 342 |

-

|

| 343 |

-

|

| 344 |

-

|

| 345 |

-

|

| 346 |

-

This makes IIIF ideal for loading targeted image crops in ML workflows — without handling large downloads or preprocessing full-page scans.

|

| 347 |

|

| 348 |

-

|

| 349 |

-

|

| 350 |

-

|

| 351 |

-

|

| 352 |

-

|

| 353 |

-

|

| 354 |

-

-

|

| 355 |

-

-

|

| 356 |

-

-

|

| 357 |

-

-

|

| 358 |

-

|

| 359 |

-

|

| 360 |

-

|

| 361 |

-

|

| 362 |

-

|

| 363 |

-

|

| 364 |

-

|

| 365 |

-

|

| 366 |

-

|

| 367 |

-

|

| 368 |

-

|

| 369 |

-

|

| 370 |

-

|

| 371 |

-

|

| 372 |

-

|

| 373 |

-

|

| 374 |

-

|

| 375 |

-

|

| 376 |

-

|

| 377 |

-

|

| 378 |

-

|

| 379 |

-

|

| 380 |

-

|

| 381 |

-

| `url` | URL to the original JP2 image |

|

| 382 |

-

| `page_url` | URL to the Chronicling America page |

|

| 383 |

-

| `prediction_section_iiif_url` | IIIF URL targeting the visual region |

|

| 384 |

-

| `iiif_full_url` | IIIF URL for the full page image |

|

| 385 |

-

| `predicted_type` | Predicted visual type (e.g., photograph, map) |

|

| 386 |

|

| 387 |

### Example

|

| 388 |

|

|

@@ -409,6 +405,68 @@ Each row represents a predicted bounding box within a newspaper page.

|

|

| 409 |

}

|

| 410 |

```

|

| 411 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 412 |

|

| 413 |

### Source

|

| 414 |

|

|

@@ -422,6 +480,33 @@ This version of the dataset was prepared using nnanno.

|

|

| 422 |

|

| 423 |

The conversion process reformats JSON metadata into structured Parquet files for easier querying and integration with modern ML pipelines.

|

| 424 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 425 |

### Citation

|

| 426 |

|

| 427 |

```bibtex

|

|

@@ -437,8 +522,6 @@ The conversion process reformats JSON metadata into structured Parquet files for

|

|

| 437 |

|

| 438 |

This work draws on the remarkable digitization and metadata efforts of the Library of Congress’s Chronicling America and the innovative Newspaper Navigator project.

|

| 439 |

|

| 440 |

-

The conversion tools were developed during the Living with Machines project.

|

| 441 |

-

|

| 442 |

### Dataset Contact

|

| 443 |

|

| 444 |

This conversion of the dataset is created and maintained by @davanstrien.

|

|

|

|

| 299 |

- newspapers

|

| 300 |

- history

|

| 301 |

pretty_name: Newspaper Navigator

|

| 302 |

+

license: cc0-1.0

|

| 303 |

+

language:

|

| 304 |

+

- en

|

| 305 |

+

size_categories:

|

| 306 |

+

- 1M<n<10M

|

| 307 |

---

|

| 308 |

|

|

|

|

| 309 |

# Dataset Card for Newspaper Navigator

|

| 310 |

|

| 311 |

## Dataset Summary

|

| 312 |

|

| 313 |

+

This dataset provides a Parquet-converted version of the [Newspaper Navigator](https://news-navigator.labs.loc.gov/) dataset from the Library of Congress. Originally released as JSON, Newspaper Navigator contains over 16 million pages of historic US newspapers annotated with bounding boxes, predicted visual types (e.g., photographs, maps), and OCR content. This work was carried out as part of a project by Benjamin Germain Lee et al.

|

| 314 |

|

| 315 |

+

This version of the dataset was created using [`nnanno`](https://github.com/Living-with-machines/nnanno), a tool for sampling, annotating, and running inference on the Newspaper Navigator dataset. The dataset has been split into separate configurations by `predicted_type` (e.g., `photographs`, `illustrations`, `maps`) to enable easy downloading of specific content types.

|

| 316 |

|

| 317 |

All records include metadata such as publication date, location, LCCN, bounding boxes, OCR text, IIIF image links, and source URLs.

|

| 318 |

|

| 319 |

+

Currently this version is missing the `ads` and `headlines` configurations. I am working on adding these to the dataset!

|

| 320 |

|

| 321 |

+

### Loading the Dataset with HuggingFace Datasets

|

| 322 |

|

| 323 |

+

```python

|

| 324 |

+

from datasets import load_dataset

|

| 325 |

|

| 326 |

+

# Load a specific configuration (e.g., photos)

|

| 327 |

|

| 328 |

+

photos_dataset = load_dataset("davanstrien/newspaper-navigator", "photos")

|

| 329 |

|

| 330 |

+

# View the first example

|

|

|

|

|

|

|

| 331 |

|

| 332 |

+

example = photos_dataset["train"][0]

|

| 333 |

+

print(f"Publication: {example['name']}")

|

| 334 |

+

print(f"Date: {example['pub_date']}")

|

| 335 |

+

print(f"OCR text: {example['ocr']}")

|

| 336 |

|

| 337 |

+

# Load multiple configurations

|

|

|

|

| 338 |

|

| 339 |

+

configs = ["photos", "cartoons", "maps"]

|

| 340 |

+

datasets = {config: load_dataset("davanstrien/newspaper-navigator", config) for config in configs}

|

| 341 |

|

| 342 |

+

# Filter by date range (e.g., World War II era)

|

|

|

|

|

|

|

| 343 |

|

| 344 |

+

ww2_photos = photos_dataset["train"].filter(

|

| 345 |

+

lambda x: "1939" <= x["pub_date"][:4] <= "1945"

|

| 346 |

+

)

|

| 347 |

+

```

|

|

|

|

|

|

|

| 348 |

|

| 349 |

+

### Dataset Structure

|

| 350 |

+

|

| 351 |

+

The dataset currently has the following configurations:

|

| 352 |

+

|

| 353 |

+

- `cartoons`

|

| 354 |

+

- `comics`

|

| 355 |

+

- `default`

|

| 356 |

+

- `illustrations`

|

| 357 |

+

- `maps`

|

| 358 |

+

- `photos`

|

| 359 |

+

|

| 360 |

+

Each configuration corresponds to one `predicted_type` of visual content extracted by the original object detection model. For each configuration each row represents a predicted bounding box within a newspaper page.

|

| 361 |

+

|

| 362 |

+

Each row includes the following features:

|

| 363 |

+

|

| 364 |

+

- `filepath`: File path of the page image

|

| 365 |

+

- `pub_date`: Publication date (`YYYY-MM-DD`)

|

| 366 |

+

- `page_seq_num`: Sequence number of the page within the edition

|

| 367 |

+

- `edition_seq_num`: Sequence number of the edition

|

| 368 |

+

- `batch`: Chronicling America batch identifier

|

| 369 |

+

- `lccn`: Library of Congress Control Number

|

| 370 |

+

- `box`: Bounding box coordinates `[x, y, width, height]`

|

| 371 |

+

- `score`: Prediction confidence score

|

| 372 |

+

- `ocr`: OCR text extracted from the bounding box

|

| 373 |

+

- `place_of_publication`: Place of publication

|

| 374 |

+

- `geographic_coverage`: Geographic areas covered

|

| 375 |

+

- `name`: Newspaper title

|

| 376 |

+

- `publisher`: Publisher name

|

| 377 |

+

- `url`: URL to the original JP2 image

|

| 378 |

+

- `page_url`: URL to the Chronicling America page

|

| 379 |

+

- `prediction_section_iiif_url`: IIIF URL targeting the visual region

|

| 380 |

+

- `iiif_full_url`: IIIF URL for the full page image

|

| 381 |

+

- `predicted_type`: Predicted visual type (e.g., photograph, map)

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 382 |

|

| 383 |

### Example

|

| 384 |

|

|

|

|

| 405 |

}

|

| 406 |

```

|

| 407 |

|

| 408 |

+

### Potential Applications

|

| 409 |

+

|

| 410 |

+

This dataset opens up numerous research and application opportunities across multiple domains:

|

| 411 |

+

|

| 412 |

+

#### Historical Research

|

| 413 |

+

|

| 414 |

+

- Track visual depictions of events, people, or places across time

|

| 415 |

+

- Analyze evolving graphic design and visual communication techniques in newspapers

|

| 416 |

+

- Study regional differences in visual reporting styles across the United States

|

| 417 |

+

|

| 418 |

+

#### Machine Learning

|

| 419 |

+

|

| 420 |

+

- Fine-tune computer vision models on historical document imagery

|

| 421 |

+

- Develop OCR improvements for historical printed materials

|

| 422 |

+

- Create multimodal models that combine visual elements with surrounding text

|

| 423 |

+

|

| 424 |

+

#### Digital Humanities

|

| 425 |

+

|

| 426 |

+

- Analyze how visual representations of specific groups or topics evolved over time

|

| 427 |

+

- Map geographic coverage of visual news content across different regions

|

| 428 |

+

- Study the emergence and evolution of political cartoons, comics, and photojournalism

|

| 429 |

+

|

| 430 |

+

#### Educational Applications

|

| 431 |

+

|

| 432 |

+

- Create interactive timelines of historical events using primary source imagery

|

| 433 |

+

- Develop educational tools for studying visual culture and media literacy

|

| 434 |

+

- Build classroom resources using authentic historical newspaper visuals

|

| 435 |

+

|

| 436 |

+

### IIIIF tl;dr

|

| 437 |

+

|

| 438 |

+

This dataset includes [IIIF](https://iiif.io/) image URLs — an open standard used by libraries and archives to serve high-resolution images. These URLs allow precise access to a **full page** or a **specific region** (e.g. a bounding box around a photo or map), without downloading the entire file. Ideal for pipelines that need on-demand loading of visual content.

|

| 439 |

+

|

| 440 |

+

---

|

| 441 |

+

|

| 442 |

+

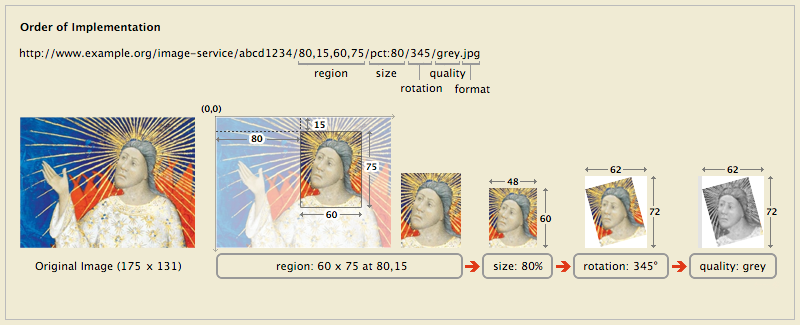

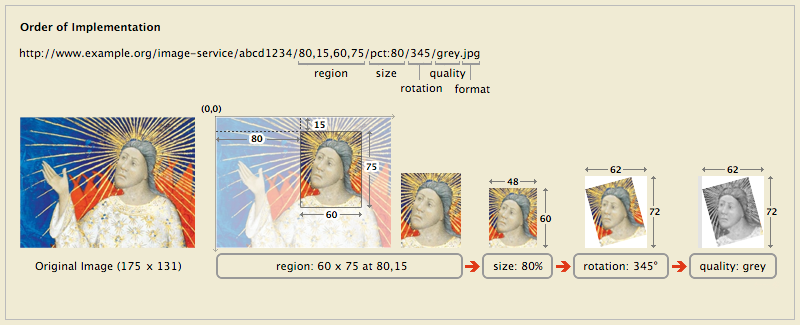

### How a IIIF Image URL Works

|

| 443 |

+

|

| 444 |

+

A IIIF image URL has a standard structure:

|

| 445 |

+

|

| 446 |

+

```

|

| 447 |

+

{scheme}://{server}/{prefix}/{identifier}/{region}/{size}/{rotation}/{quality}.{format}

|

| 448 |

+

```

|

| 449 |

+

|

| 450 |

+

This lets you retrieve just a portion of an image at a given size or resolution.

|

| 451 |

+

For example, the `region` and `size` parts can be used to extract a cropped 300×300 window from a 10,000×10,000 scan.

|

| 452 |

+

|

| 453 |

+

Visual representation of the IIIF image URL:

|

| 454 |

+

|

| 455 |

+

|

| 456 |

+

---

|

| 457 |

+

|

| 458 |

+

### How IIIF Servers Deliver Cropped Images Efficiently

|

| 459 |

+

|

| 460 |

+

IIIF servers are designed to deliver image regions at scale:

|

| 461 |

+

|

| 462 |

+

- **Tiled, multi-resolution storage**: Images are stored as pyramids (e.g., in JPEG2000 or tiled TIFF).

|

| 463 |

+

- **Smart region requests**: URLs specify just the part of the image you want.

|

| 464 |

+

- **No full image needed**: The server returns only the relevant tiles, optionally re-encoded.

|

| 465 |

+

- **Highly cacheable**: Deterministic URLs make responses easy to cache and scale.

|

| 466 |

+

|

| 467 |

+

This makes IIIF ideal for loading targeted image crops in ML workflows — without handling large downloads or preprocessing full-page scans.

|

| 468 |

+

|

| 469 |

+

> ⚠️ **Note:** Please use IIIF image APIs responsibly and in accordance with the current [Library of Congress Terms of Use](https://www.loc.gov/legal/). You should still consider the number of requests you make, cache client-side, etc.

|

| 470 |

|

| 471 |

### Source

|

| 472 |

|

|

|

|

| 480 |

|

| 481 |

The conversion process reformats JSON metadata into structured Parquet files for easier querying and integration with modern ML pipelines.

|

| 482 |

|

| 483 |

+

### Ethical Considerations

|

| 484 |

+

|

| 485 |

+

Working with historical newspaper content requires careful consideration of several ethical dimensions:

|

| 486 |

+

|

| 487 |

+

#### Historical Context and Bias

|

| 488 |

+

|

| 489 |

+

Historical newspapers reflect the biases and prejudices of their time periods

|

| 490 |

+

Content may include offensive, racist, or discriminatory language and imagery

|

| 491 |

+

Researchers should contextualize findings within the historical social and political climate

|

| 492 |

+

|

| 493 |

+

#### Responsible Use Guidelines

|

| 494 |

+

|

| 495 |

+

- Avoid decontextualizing historical content in ways that could perpetuate stereotypes

|

| 496 |

+

- Consider adding appropriate content warnings when sharing potentially offensive historical material

|

| 497 |

+

- Acknowledge the partial and biased nature of newspaper coverage from different eras

|

| 498 |

+

|

| 499 |

+

#### Technical Limitations and Representation Bias

|

| 500 |

+

|

| 501 |

+

- OCR quality varies significantly across the dataset, potentially creating selection bias in text-based analyses

|

| 502 |

+

- The machine learning models used to identify visual elements may have their own biases in classification accuracy

|

| 503 |

+

- Certain communities and perspectives are underrepresented in historical newspaper archives

|

| 504 |

+

|

| 505 |

+

#### API Usage Ethics

|

| 506 |

+

|

| 507 |

+

- The IIIF image API should be used responsibly to avoid overwhelming the Library of Congress servers

|

| 508 |

+

- Implement caching and throttling in applications that make frequent requests

|

| 509 |

+

|

| 510 |

### Citation

|

| 511 |

|

| 512 |

```bibtex

|

|

|

|

| 522 |

|

| 523 |

This work draws on the remarkable digitization and metadata efforts of the Library of Congress’s Chronicling America and the innovative Newspaper Navigator project.

|

| 524 |

|

|

|

|

|

|

|

| 525 |

### Dataset Contact

|

| 526 |

|

| 527 |

This conversion of the dataset is created and maintained by @davanstrien.

|