Commit

·

b0e0bef

1

Parent(s):

aaf6c89

Create README.md

Browse files

README.md

ADDED

|

@@ -0,0 +1,59 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

language: multilingual

|

| 3 |

+

datasets:

|

| 4 |

+

- mc4

|

| 5 |

+

tags:

|

| 6 |

+

- summarization

|

| 7 |

+

- translation

|

| 8 |

+

|

| 9 |

+

license: apache-2.0

|

| 10 |

+

---

|

| 11 |

+

|

| 12 |

+

# ByT5 - Small

|

| 13 |

+

|

| 14 |

+

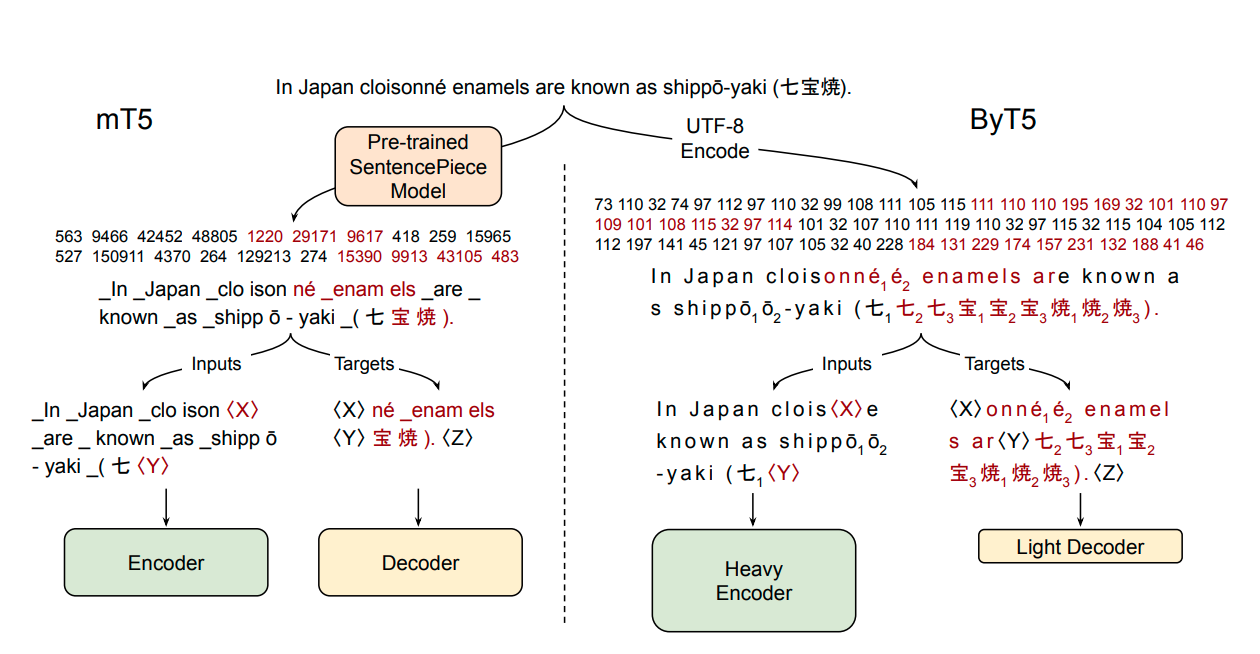

ByT5 is a tokenizer-free version of [Google's T5](https://ai.googleblog.com/2020/02/exploring-transfer-learning-with-t5.html) and generally follows the architecture of [MT5](https://huggingface.co/google/mt5-small).

|

| 15 |

+

|

| 16 |

+

ByT5 was only pre-trained on [mC4](https://www.tensorflow.org/datasets/catalog/c4#c4multilingual) excluding any supervised training with an average span-mask of 20 UTF-8 characters. Therefore, this model has to be fine-tuned before it is useable on a downstream task.

|

| 17 |

+

|

| 18 |

+

ByT5 works especially well on noisy text data,*e.g.*, `google/byt5-small` significantly outperforms [mt5-small](https://huggingface.co/google/mt5-small) on [TweetQA](https://arxiv.org/abs/1907.06292).

|

| 19 |

+

|

| 20 |

+

Paper: [ByT5: Towards a token-free future with pre-trained byte-to-byte models](https://arxiv.org/pdf/1910.10683.pdf)

|

| 21 |

+

|

| 22 |

+

Authors: *Linting Xue, Aditya Barua, Noah Constant, Rami Al-Rfou, Sharan Narang, Mihir Kale, Adam Roberts, Colin Raffel*

|

| 23 |

+

|

| 24 |

+

## Example Inference

|

| 25 |

+

|

| 26 |

+

ByT5 works on raw UTF-8 bytes and can be used without a tokenizer:

|

| 27 |

+

|

| 28 |

+

```python

|

| 29 |

+

from transformers import T5ForConditionalGeneration

|

| 30 |

+

import torch

|

| 31 |

+

|

| 32 |

+

model = T5ForConditionalGeneration.from_pretrained('google/byt5-small')

|

| 33 |

+

|

| 34 |

+

input_ids = torch.tensor([list("Life is like a box of chocolates.".encode("utf-8"))]) + 3 # add 3 for special tokens

|

| 35 |

+

labels = torch.tensor([list("La vie est comme une boîte de chocolat.".encode("utf-8"))]) + 3 # add 3 for special tokens

|

| 36 |

+

|

| 37 |

+

loss = model(input_ids, labels=labels).loss # forward pass

|

| 38 |

+

```

|

| 39 |

+

|

| 40 |

+

For batched inference & training it is however recommended using a tokenizer class for padding:

|

| 41 |

+

|

| 42 |

+

```python

|

| 43 |

+

from transformers import T5ForConditionalGeneration, ByT5Tokenizer

|

| 44 |

+

|

| 45 |

+

model = T5ForConditionalGeneration.from_pretrained('google/byt5-small')

|

| 46 |

+

tokenizer = ByT5Tokenizer.from_pretrained('google/byt5-small')

|

| 47 |

+

|

| 48 |

+

model_inputs = tokenizer(["Life is like a box of chocolates.", "Today is Monday."], padding="longest", return_tensors="pt")

|

| 49 |

+

labels = tokenizer(["La vie est comme une boîte de chocolat.", "Aujourd'hui c'est lundi."], padding="longest", return_tensors="pt").input_ids

|

| 50 |

+

|

| 51 |

+

loss = model(**model_inputs, labels=labels).loss # forward pass

|

| 52 |

+

```

|

| 53 |

+

|

| 54 |

+

## Abstract

|

| 55 |

+

|

| 56 |

+

Most widely-used pre-trained language models operate on sequences of tokens corresponding to word or subword units. Encoding text as a sequence of tokens requires a tokenizer, which is typically created as an independent artifact from the model. Token-free models that instead operate directly on raw text (bytes or characters) have many benefits: they can process text in any language out of the box, they are more robust to noise, and they minimize technical debt by removing complex and error-prone text preprocessing pipelines. Since byte or character sequences are longer than token sequences, past work on token-free models has often introduced new model architectures designed to amortize the cost of operating directly on raw text. In this paper, we show that a standard Transformer architecture can be used with minimal modifications to process byte sequences. We carefully characterize the trade-offs in terms of parameter count, training FLOPs, and inference speed, and show that byte-level models are competitive with their token-level counterparts. We also demonstrate that byte-level models are significantly more robust to noise and perform better on tasks that are sensitive to spelling and pronunciation. As part of our contribution, we release a new set of pre-trained byte-level Transformer models based on the T5 architecture, as well as all code and data used in our experiments.

|

| 57 |

+

|

| 58 |

+

|

| 59 |

+

|