BAH Dataset for Ambivalence/Hesitancy Recognition in Videos for Behavioural Change

by Manuela González-González3,4, Soufiane Belharbi1, Muhammad Osama Zeeshan1, Masoumeh Sharafi1, Muhammad Haseeb Aslam1, Alessandro Lameiras Koerich2, Marco Pedersoli1, Simon L. Bacon3,4, Eric Granger1

1 LIVIA, Dept. of Systems Engineering, ETS Montreal, Canada

2 LIVIA, Dept. of Software and IT Engineering, ETS Montreal, Canada

3 Dept. of Health, Kinesiology, & Applied Physiology, Concordia University, Montreal, Canada

4 Montreal Behavioural Medicine Centre, CIUSSS Nord-de-l’Ile-de-Montréal, Canada

Abstract

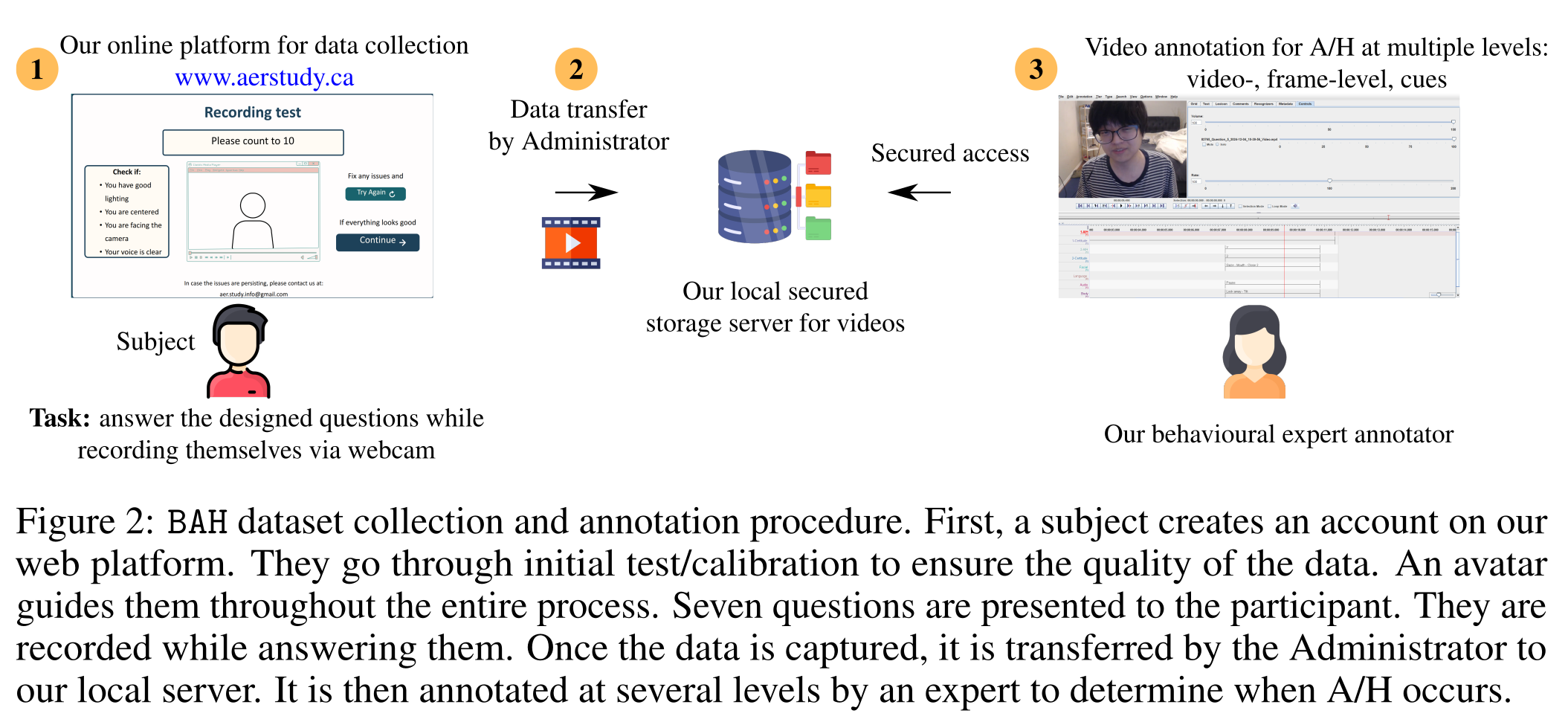

Recognizing complex emotions linked to ambivalence and hesitancy (A/H) can play a critical role in the personalization and effectiveness of digital behaviour change interventions. These subtle and conflicting emotions are manifested by a discord between multiple modalities, such as facial and vocal expressions, and body language. Although experts can be trained to identify A/H, integrating them into digital interventions is costly and less effective. Automatic learning systems provide a cost-effective alternative that can adapt to individual users, and operate seamlessly within real-time, resource-limited environments. However, there are currently no datasets available for the design of ML models to recognize A/H. This paper introduces a first Behavioural Ambivalence/Hesitancy (BAH) dataset collected for subject-based multimodal recognition of A/H in videos. It contains videos from 224 subjects captured across 9 provinces in Canada, with different age, and ethnicity. Through our web platform, we recruited subjects to answer 7 questions, some of which were designed to elicit A/H while recording themselves via webcam with microphone. BAH amounts to 1,118 videos for a total duration of 8.26 hours with 1.5 hours of A/H. Our behavioural team annotated timestamp segments to indicate where A/H occurs, and provide frame- and video-level annotations with the A/H cues. Video transcripts and their timestamps are also included, along with cropped and aligned faces in each frame, and a variety of subject meta-data. Additionally, this paper provides preliminary benchmarking results baseline models for BAH at frame- and video-level recognition with mono- and multi-modal setups. It also includes results on models for zero-shot prediction, and for personalization using unsupervised domain adaptation. The limited performance of baseline models highlights the challenges of recognizing A/H in real-world videos.

Code: Pytorch 2.2.2

Citation:

@article{gonzalez-25-bah,

title={{BAH} Dataset for Ambivalence/Hesitancy Recognition in Videos for Behavioural Change},

author={González-González, M. and Belharbi, S. and Zeeshan, M. O. and

Sharafi, M. and Aslam, M. H and Pedersoli, M. and Koerich, A. L. and

Bacon, S. L. and Granger, E.},

journal={CoRR},

volume={abs/2505.19328},

year={2025}

}

Content:

BAH dataset: Download

To download BAH dataset, please follow closely the instructions described here: BAH Download instructions.

Pretrained weights

The folder pretrained-models contains the weights of several pretrained models:

- Frame-level supervised learning:

frame-level-supervised-learningcontains facial expression vision models, BAH_DB vision models, and multimodal models with different fusion techniques. - Domain adaptation: comming soon.

BAH presentation

BAH: Capture & Annotation

BAH: Variability

BAH: Experimental Protocol

Experiments: Baselines

1) Frame-level supervised classification using multimodal

2) Video-level supervised classification using multimodal

3) Zero-shot performance: Frame- & video-level

4) Personalization using domain adaptation (frame-level)

Conclusion

This work introduces a new and unique multimodal and subject-based video dataset, BAH, for A/H recognition in videos. BAH contains 224 participants across 9 provinces in Canada. Recruited participants answer 7 designed questions to elicit A/H while recording themselves via webcam and microphone via our web-platform. The dataset amounts to 1,118 videos for a total duration of 8.26 hours with 1.5 hours of A/H. It was annotated by our behavioural team at video- and frame-level.

Our initial benchmarking yielded limited performance highlighting the difficulty of A/H recognition. Our results showed also that leveraging context, multimodality, and adapted feature fusion is a first good direction to design robust models. Our dataset and code are made public.

Acknowledgments

This work was supported in part by the Fonds de recherche du Québec – Santé, the Natural Sciences and Engineering Research Council of Canada, Canada Foundation for Innovation, and the Digital Research Alliance of Canada. We thank interns that participated in the dataset annotation: Jessica Almeida (Concordia University, Université du Québec à Montréal), and Laura Lucia Ortiz (MBMC).