+ +

+ +

+ +

diff --git a/mmpose/configs/animal_2d_keypoint/rtmpose/README.md b/mmpose/configs/animal_2d_keypoint/rtmpose/README.md new file mode 100644 index 0000000000000000000000000000000000000000..fbb103e36c5c9e66292904d15c8db467ce18f3b4 --- /dev/null +++ b/mmpose/configs/animal_2d_keypoint/rtmpose/README.md @@ -0,0 +1,16 @@ +# RTMPose + +Recent studies on 2D pose estimation have achieved excellent performance on public benchmarks, yet its application in the industrial community still suffers from heavy model parameters and high latency. +In order to bridge this gap, we empirically study five aspects that affect the performance of multi-person pose estimation algorithms: paradigm, backbone network, localization algorithm, training strategy, and deployment inference, and present a high-performance real-time multi-person pose estimation framework, **RTMPose**, based on MMPose. +Our RTMPose-m achieves **75.8% AP** on COCO with **90+ FPS** on an Intel i7-11700 CPU and **430+ FPS** on an NVIDIA GTX 1660 Ti GPU, and RTMPose-l achieves **67.0% AP** on COCO-WholeBody with **130+ FPS**, outperforming existing open-source libraries. +To further evaluate RTMPose's capability in critical real-time applications, we also report the performance after deploying on the mobile device. + +## Results and Models + +### AP-10K Dataset + +Results on AP-10K validation set + +| Model | Input Size | AP | Details and Download | +| :-------: | :--------: | :---: | :------------------------------------------: | +| RTMPose-m | 256x256 | 0.722 | [rtmpose_cp10k.md](./ap10k/rtmpose_ap10k.md) | diff --git a/mmpose/configs/animal_2d_keypoint/rtmpose/ap10k/rtmpose-m_8xb64-210e_ap10k-256x256.py b/mmpose/configs/animal_2d_keypoint/rtmpose/ap10k/rtmpose-m_8xb64-210e_ap10k-256x256.py new file mode 100644 index 0000000000000000000000000000000000000000..0e8c007b311f07d3a838d015d37b88fc11f760e2 --- /dev/null +++ b/mmpose/configs/animal_2d_keypoint/rtmpose/ap10k/rtmpose-m_8xb64-210e_ap10k-256x256.py @@ -0,0 +1,245 @@ +_base_ = ['../../../_base_/default_runtime.py'] + +# runtime +max_epochs = 210 +stage2_num_epochs = 30 +base_lr = 4e-3 + +train_cfg = dict(max_epochs=max_epochs, val_interval=10) +randomness = dict(seed=21) + +# optimizer +optim_wrapper = dict( + type='OptimWrapper', + optimizer=dict(type='AdamW', lr=base_lr, weight_decay=0.05), + paramwise_cfg=dict( + norm_decay_mult=0, bias_decay_mult=0, bypass_duplicate=True)) + +# learning rate +param_scheduler = [ + dict( + type='LinearLR', + start_factor=1.0e-5, + by_epoch=False, + begin=0, + end=1000), + dict( + type='CosineAnnealingLR', + eta_min=base_lr * 0.05, + begin=max_epochs // 2, + end=max_epochs, + T_max=max_epochs // 2, + by_epoch=True, + convert_to_iter_based=True), +] + +# automatically scaling LR based on the actual training batch size +auto_scale_lr = dict(base_batch_size=512) + +# codec settings +codec = dict( + type='SimCCLabel', + input_size=(256, 256), + sigma=(5.66, 5.66), + simcc_split_ratio=2.0, + normalize=False, + use_dark=False) + +# model settings +model = dict( + type='TopdownPoseEstimator', + data_preprocessor=dict( + type='PoseDataPreprocessor', + mean=[123.675, 116.28, 103.53], + std=[58.395, 57.12, 57.375], + bgr_to_rgb=True), + backbone=dict( + _scope_='mmdet', + type='CSPNeXt', + arch='P5', + expand_ratio=0.5, + deepen_factor=0.67, + widen_factor=0.75, + out_indices=(4, ), + channel_attention=True, + norm_cfg=dict(type='SyncBN'), + act_cfg=dict(type='SiLU'), + init_cfg=dict( + type='Pretrained', + prefix='backbone.', + checkpoint='https://download.openmmlab.com/mmpose/v1/projects/' + 'rtmposev1/cspnext-m_udp-aic-coco_210e-256x192-f2f7d6f6_20230130.pth' # noqa + )), + head=dict( + type='RTMCCHead', + in_channels=768, + out_channels=17, + input_size=codec['input_size'], + in_featuremap_size=tuple([s // 32 for s in codec['input_size']]), + simcc_split_ratio=codec['simcc_split_ratio'], + final_layer_kernel_size=7, + gau_cfg=dict( + hidden_dims=256, + s=128, + expansion_factor=2, + dropout_rate=0., + drop_path=0., + act_fn='SiLU', + use_rel_bias=False, + pos_enc=False), + loss=dict( + type='KLDiscretLoss', + use_target_weight=True, + beta=10., + label_softmax=True), + decoder=codec), + test_cfg=dict(flip_test=True, )) + +# base dataset settings +dataset_type = 'AP10KDataset' +data_mode = 'topdown' +data_root = 'data/ap10k/' + +backend_args = dict(backend='local') +# backend_args = dict( +# backend='petrel', +# path_mapping=dict({ +# f'{data_root}': 's3://openmmlab/datasets/pose/ap10k/', +# f'{data_root}': 's3://openmmlab/datasets/pose/ap10k/' +# })) + +# pipelines +train_pipeline = [ + dict(type='LoadImage', backend_args=backend_args), + dict(type='GetBBoxCenterScale'), + dict(type='RandomFlip', direction='horizontal'), + dict(type='RandomHalfBody'), + dict( + type='RandomBBoxTransform', scale_factor=[0.6, 1.4], rotate_factor=80), + dict(type='TopdownAffine', input_size=codec['input_size']), + dict(type='mmdet.YOLOXHSVRandomAug'), + dict( + type='Albumentation', + transforms=[ + dict(type='Blur', p=0.1), + dict(type='MedianBlur', p=0.1), + dict( + type='CoarseDropout', + max_holes=1, + max_height=0.4, + max_width=0.4, + min_holes=1, + min_height=0.2, + min_width=0.2, + p=1.0), + ]), + dict(type='GenerateTarget', encoder=codec), + dict(type='PackPoseInputs') +] +val_pipeline = [ + dict(type='LoadImage', backend_args=backend_args), + dict(type='GetBBoxCenterScale'), + dict(type='TopdownAffine', input_size=codec['input_size']), + dict(type='PackPoseInputs') +] + +train_pipeline_stage2 = [ + dict(type='LoadImage', backend_args=backend_args), + dict(type='GetBBoxCenterScale'), + dict(type='RandomFlip', direction='horizontal'), + dict(type='RandomHalfBody'), + dict( + type='RandomBBoxTransform', + shift_factor=0., + scale_factor=[0.75, 1.25], + rotate_factor=60), + dict(type='TopdownAffine', input_size=codec['input_size']), + dict(type='mmdet.YOLOXHSVRandomAug'), + dict( + type='Albumentation', + transforms=[ + dict(type='Blur', p=0.1), + dict(type='MedianBlur', p=0.1), + dict( + type='CoarseDropout', + max_holes=1, + max_height=0.4, + max_width=0.4, + min_holes=1, + min_height=0.2, + min_width=0.2, + p=0.5), + ]), + dict(type='GenerateTarget', encoder=codec), + dict(type='PackPoseInputs') +] + +# data loaders +train_dataloader = dict( + batch_size=64, + num_workers=10, + persistent_workers=True, + sampler=dict(type='DefaultSampler', shuffle=True), + dataset=dict( + type=dataset_type, + data_root=data_root, + data_mode=data_mode, + ann_file='annotations/ap10k-train-split1.json', + data_prefix=dict(img='data/'), + pipeline=train_pipeline, + )) +val_dataloader = dict( + batch_size=32, + num_workers=10, + persistent_workers=True, + drop_last=False, + sampler=dict(type='DefaultSampler', shuffle=False, round_up=False), + dataset=dict( + type=dataset_type, + data_root=data_root, + data_mode=data_mode, + ann_file='annotations/ap10k-val-split1.json', + data_prefix=dict(img='data/'), + test_mode=True, + pipeline=val_pipeline, + )) +test_dataloader = dict( + batch_size=32, + num_workers=10, + persistent_workers=True, + drop_last=False, + sampler=dict(type='DefaultSampler', shuffle=False, round_up=False), + dataset=dict( + type=dataset_type, + data_root=data_root, + data_mode=data_mode, + ann_file='annotations/ap10k-test-split1.json', + data_prefix=dict(img='data/'), + test_mode=True, + pipeline=val_pipeline, + )) + +# hooks +default_hooks = dict( + checkpoint=dict(save_best='coco/AP', rule='greater', max_keep_ckpts=1)) + +custom_hooks = [ + dict( + type='EMAHook', + ema_type='ExpMomentumEMA', + momentum=0.0002, + update_buffers=True, + priority=49), + dict( + type='mmdet.PipelineSwitchHook', + switch_epoch=max_epochs - stage2_num_epochs, + switch_pipeline=train_pipeline_stage2) +] + +# evaluators +val_evaluator = dict( + type='CocoMetric', + ann_file=data_root + 'annotations/ap10k-val-split1.json') +test_evaluator = dict( + type='CocoMetric', + ann_file=data_root + 'annotations/ap10k-test-split1.json') diff --git a/mmpose/configs/animal_2d_keypoint/rtmpose/ap10k/rtmpose_ap10k.md b/mmpose/configs/animal_2d_keypoint/rtmpose/ap10k/rtmpose_ap10k.md new file mode 100644 index 0000000000000000000000000000000000000000..4d035a372572aaac93ff980acbb367a1cc6a5efa --- /dev/null +++ b/mmpose/configs/animal_2d_keypoint/rtmpose/ap10k/rtmpose_ap10k.md @@ -0,0 +1,25 @@ + + + + +

+

+

+Results on AP-10K validation set

+

+| Arch | Input Size | AP | AP50 | AP75 | APM | APL | ckpt | log |

+| :----------------------------------------- | :--------: | :---: | :-------------: | :-------------: | :------------: | :------------: | :-----------------------------------------: | :----------------------------------------: |

+| [rtmpose-m](/configs/animal_2d_keypoint/rtmpose/ap10k/rtmpose-m_8xb64-210e_ap10k-256x256.py) | 256x256 | 0.722 | 0.939 | 0.788 | 0.569 | 0.728 | [ckpt](https://download.openmmlab.com/mmpose/v1/projects/rtmposev1/rtmpose-m_simcc-ap10k_pt-aic-coco_210e-256x256-7a041aa1_20230206.pth) | [log](https://download.openmmlab.com/mmpose/v1/projects/rtmposev1/rtmpose-m_simcc-ap10k_pt-aic-coco_210e-256x256-7a041aa1_20230206.json) |

diff --git a/mmpose/configs/animal_2d_keypoint/rtmpose/ap10k/rtmpose_ap10k.yml b/mmpose/configs/animal_2d_keypoint/rtmpose/ap10k/rtmpose_ap10k.yml

new file mode 100644

index 0000000000000000000000000000000000000000..0441d9e65faa6b0274f9152ff31ef1b66a112214

--- /dev/null

+++ b/mmpose/configs/animal_2d_keypoint/rtmpose/ap10k/rtmpose_ap10k.yml

@@ -0,0 +1,19 @@

+Models:

+- Config: configs/animal_2d_keypoint/rtmpose/ap10k/rtmpose-m_8xb64-210e_ap10k-256x256.py

+ In Collection: RTMPose

+ Alias: animal

+ Metadata:

+ Architecture:

+ - RTMPose

+ Training Data: AP-10K

+ Name: rtmpose-m_8xb64-210e_ap10k-256x256

+ Results:

+ - Dataset: AP-10K

+ Metrics:

+ AP: 0.722

+ AP@0.5: 0.939

+ AP@0.75: 0.788

+ AP (L): 0.728

+ AP (M): 0.569

+ Task: Animal 2D Keypoint

+ Weights: https://download.openmmlab.com/mmpose/v1/projects/rtmposev1/rtmpose-m_simcc-ap10k_pt-aic-coco_210e-256x256-7a041aa1_20230206.pth

diff --git a/mmpose/configs/animal_2d_keypoint/topdown_heatmap/README.md b/mmpose/configs/animal_2d_keypoint/topdown_heatmap/README.md

new file mode 100644

index 0000000000000000000000000000000000000000..90a440dc286ef02104ed7bbf59a606a030f7a68e

--- /dev/null

+++ b/mmpose/configs/animal_2d_keypoint/topdown_heatmap/README.md

@@ -0,0 +1,68 @@

+# Top-down heatmap-based pose estimation

+

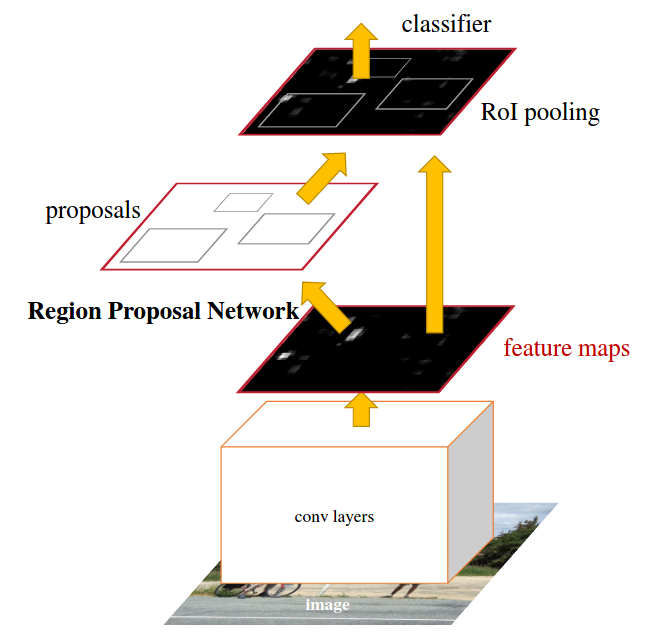

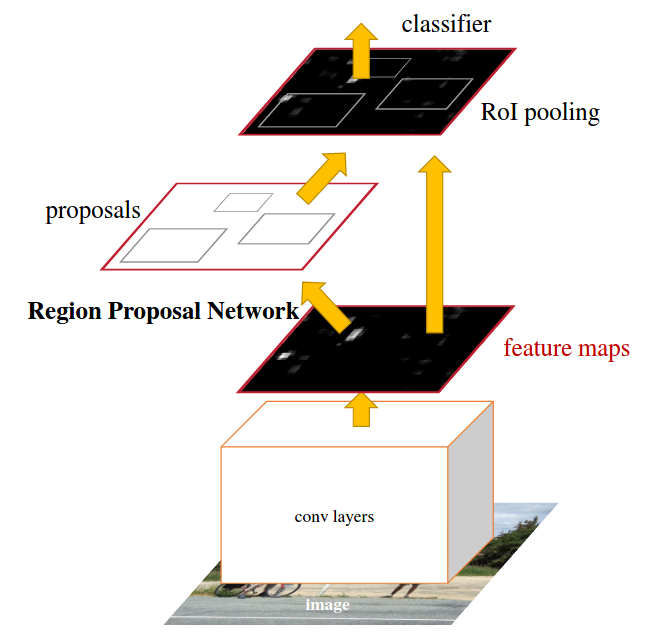

+Top-down methods divide the task into two stages: object detection, followed by single-object pose estimation given object bounding boxes Instead of estimating keypoint coordinates directly, the pose estimator will produce heatmaps which represent the

+likelihood of being a keypoint, following the paradigm introduced in [Simple Baselines for Human Pose Estimation and Tracking](http://openaccess.thecvf.com/content_ECCV_2018/html/Bin_Xiao_Simple_Baselines_for_ECCV_2018_paper.html).

+

+AP-10K (NeurIPS'2021)

+ +```bibtex +@misc{yu2021ap10k, + title={AP-10K: A Benchmark for Animal Pose Estimation in the Wild}, + author={Hang Yu and Yufei Xu and Jing Zhang and Wei Zhao and Ziyu Guan and Dacheng Tao}, + year={2021}, + eprint={2108.12617}, + archivePrefix={arXiv}, + primaryClass={cs.CV} +} +``` + +

+ +

+

+

+## Results and Models

+

+### Animal-Pose Dataset

+

+Results on AnimalPose validation set (1117 instances)

+

+| Model | Input Size | AP | AR | Details and Download |

+| :--------: | :--------: | :---: | :---: | :-------------------------------------------------------: |

+| HRNet-w32 | 256x256 | 0.740 | 0.780 | [hrnet_animalpose.md](./animalpose/hrnet_animalpose.md) |

+| HRNet-w48 | 256x256 | 0.738 | 0.778 | [hrnet_animalpose.md](./animalpose/hrnet_animalpose.md) |

+| ResNet-152 | 256x256 | 0.704 | 0.748 | [resnet_animalpose.md](./animalpose/resnet_animalpose.md) |

+| ResNet-101 | 256x256 | 0.696 | 0.736 | [resnet_animalpose.md](./animalpose/resnet_animalpose.md) |

+| ResNet-50 | 256x256 | 0.691 | 0.736 | [resnet_animalpose.md](./animalpose/resnet_animalpose.md) |

+

+### AP-10K Dataset

+

+Results on AP-10K validation set

+

+| Model | Input Size | AP | Details and Download |

+| :--------: | :--------: | :---: | :--------------------------------------------------: |

+| HRNet-w48 | 256x256 | 0.728 | [hrnet_ap10k.md](./ap10k/hrnet_ap10k.md) |

+| HRNet-w32 | 256x256 | 0.722 | [hrnet_ap10k.md](./ap10k/hrnet_ap10k.md) |

+| ResNet-101 | 256x256 | 0.681 | [resnet_ap10k.md](./ap10k/resnet_ap10k.md) |

+| ResNet-50 | 256x256 | 0.680 | [resnet_ap10k.md](./ap10k/resnet_ap10k.md) |

+| CSPNeXt-m | 256x256 | 0.703 | [cspnext_udp_ap10k.md](./ap10k/cspnext_udp_ap10k.md) |

+

+### Desert Locust Dataset

+

+Results on Desert Locust test set

+

+| Model | Input Size | AUC | EPE | Details and Download |

+| :--------: | :--------: | :---: | :--: | :-------------------------------------------: |

+| ResNet-152 | 160x160 | 0.925 | 1.49 | [resnet_locust.md](./locust/resnet_locust.md) |

+| ResNet-101 | 160x160 | 0.907 | 2.03 | [resnet_locust.md](./locust/resnet_locust.md) |

+| ResNet-50 | 160x160 | 0.900 | 2.27 | [resnet_locust.md](./locust/resnet_locust.md) |

+

+### Grévy’s Zebra Dataset

+

+Results on Grévy’s Zebra test set

+

+| Model | Input Size | AUC | EPE | Details and Download |

+| :--------: | :--------: | :---: | :--: | :----------------------------------------: |

+| ResNet-152 | 160x160 | 0.921 | 1.67 | [resnet_zebra.md](./zebra/resnet_zebra.md) |

+| ResNet-101 | 160x160 | 0.915 | 1.83 | [resnet_zebra.md](./zebra/resnet_zebra.md) |

+| ResNet-50 | 160x160 | 0.914 | 1.87 | [resnet_zebra.md](./zebra/resnet_zebra.md) |

+

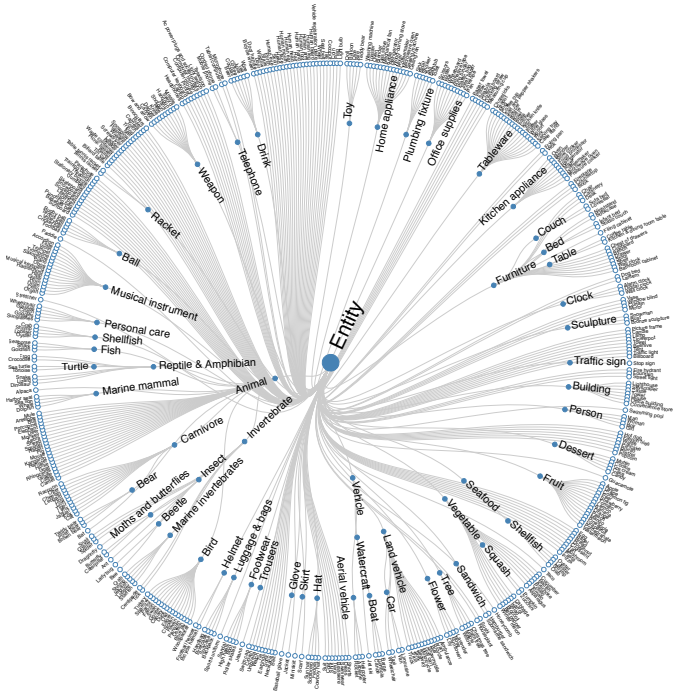

+### Animal-Kingdom Dataset

+

+Results on AnimalKingdom test set

+

+| Model | Input Size | class | PCK(0.05) | Details and Download |

+| :-------: | :--------: | :-----------: | :-------: | :---------------------------------------------------: |

+| HRNet-w32 | 256x256 | P1 | 0.6323 | [hrnet_animalkingdom.md](./ak/hrnet_animalkingdom.md) |

+| HRNet-w32 | 256x256 | P2 | 0.3741 | [hrnet_animalkingdom.md](./ak/hrnet_animalkingdom.md) |

+| HRNet-w32 | 256x256 | P3_mammals | 0.571 | [hrnet_animalkingdom.md](./ak/hrnet_animalkingdom.md) |

+| HRNet-w32 | 256x256 | P3_amphibians | 0.5358 | [hrnet_animalkingdom.md](./ak/hrnet_animalkingdom.md) |

+| HRNet-w32 | 256x256 | P3_reptiles | 0.51 | [hrnet_animalkingdom.md](./ak/hrnet_animalkingdom.md) |

+| HRNet-w32 | 256x256 | P3_birds | 0.7671 | [hrnet_animalkingdom.md](./ak/hrnet_animalkingdom.md) |

+| HRNet-w32 | 256x256 | P3_fishes | 0.6406 | [hrnet_animalkingdom.md](./ak/hrnet_animalkingdom.md) |

diff --git a/mmpose/configs/animal_2d_keypoint/topdown_heatmap/ak/hrnet_animalkingdom.md b/mmpose/configs/animal_2d_keypoint/topdown_heatmap/ak/hrnet_animalkingdom.md

new file mode 100644

index 0000000000000000000000000000000000000000..f32fb49d90f213a8511b8b09e340a9773907b9b1

--- /dev/null

+++ b/mmpose/configs/animal_2d_keypoint/topdown_heatmap/ak/hrnet_animalkingdom.md

@@ -0,0 +1,47 @@

+

+

+ +

+

+

+

+

+

+HRNet (CVPR'2019)

+ +```bibtex +@inproceedings{sun2019deep, + title={Deep high-resolution representation learning for human pose estimation}, + author={Sun, Ke and Xiao, Bin and Liu, Dong and Wang, Jingdong}, + booktitle={Proceedings of the IEEE conference on computer vision and pattern recognition}, + pages={5693--5703}, + year={2019} +} +``` + +

+

+

+Results on AnimalKingdom validation set

+

+| Arch | Input Size | PCK(0.05) | Official Repo | Paper | ckpt | log |

+| ------------------------------------------------------ | ---------- | --------- | ------------- | ------ | ------------------------------------------------------ | ------------------------------------------------------ |

+| [P1_hrnet_w32](configs/animal_2d_keypoint/topdown_heatmap/ak/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P1-256x256.py) | 256x256 | 0.6323 | 0.6342 | 0.6606 | [ckpt](https://download.openmmlab.com/mmpose/v1/animal_2d_keypoint/topdown_heatmap/animal_kingdom/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P1-256x256-08bf96cb_20230519.pth) | [log](https://download.openmmlab.com/mmpose/v1/animal_2d_keypoint/topdown_heatmap/animal_kingdom/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P1-256x256-08bf96cb_20230519.json) |

+| [P2_hrnet_w32](configs/animal_2d_keypoint/topdown_heatmap/ak/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P2-256x256.py) | 256x256 | 0.3741 | 0.3726 | 0.393 | [ckpt](https://download.openmmlab.com/mmpose/v1/animal_2d_keypoint/topdown_heatmap/animal_kingdom/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P2-256x256-2396cc58_20230519.pth) | [log](https://download.openmmlab.com/mmpose/v1/animal_2d_keypoint/topdown_heatmap/animal_kingdom/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P2-256x256-2396cc58_20230519.json) |

+| [P3_mammals_hrnet_w32](configs/animal_2d_keypoint/topdown_heatmap/ak/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P3_mammal-256x256.py) | 256x256 | 0.571 | 0.5719 | 0.6159 | [ckpt](https://download.openmmlab.com/mmpose/v1/animal_2d_keypoint/topdown_heatmap/animal_kingdom/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P3_mammal-256x256-e8aadf02_20230519.pth) | [log](https://download.openmmlab.com/mmpose/v1/animal_2d_keypoint/topdown_heatmap/animal_kingdom/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P3_mammal-256x256-e8aadf02_20230519.json) |

+| [P3_amphibians_hrnet_w32](configs/animal_2d_keypoint/topdown_heatmap/ak/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P3_amphibian-256x256.py) | 256x256 | 0.5358 | 0.5432 | 0.5674 | [ckpt](https://download.openmmlab.com/mmpose/v1/animal_2d_keypoint/topdown_heatmap/animal_kingdom/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P3_amphibian-256x256-845085f9_20230519.pth) | [log](https://download.openmmlab.com/mmpose/v1/animal_2d_keypoint/topdown_heatmap/animal_kingdom/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P3_amphibian-256x256-845085f9_20230519.json) |

+| [P3_reptiles_hrnet_w32](configs/animal_2d_keypoint/topdown_heatmap/ak/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P3_reptile-256x256.py) | 256x256 | 0.51 | 0.5 | 0.5606 | [ckpt](https://download.openmmlab.com/mmpose/v1/animal_2d_keypoint/topdown_heatmap/animal_kingdom/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P3_reptile-256x256-e8440c16_20230519.pth) | [log](https://download.openmmlab.com/mmpose/v1/animal_2d_keypoint/topdown_heatmap/animal_kingdom/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P3_reptile-256x256-e8440c16_20230519.json) |

+| [P3_birds_hrnet_w32](configs/animal_2d_keypoint/topdown_heatmap/ak/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P3_bird-256x256.py) | 256x256 | 0.7671 | 0.7636 | 0.7735 | [ckpt](https://download.openmmlab.com/mmpose/v1/animal_2d_keypoint/topdown_heatmap/animal_kingdom/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P3_bird-256x256-566feff5_20230519.pth) | [log](https://download.openmmlab.com/mmpose/v1/animal_2d_keypoint/topdown_heatmap/animal_kingdom/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P3_bird-256x256-566feff5_20230519.json) |

+| [P3_fishes_hrnet_w32](configs/animal_2d_keypoint/topdown_heatmap/ak/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P3_fish-256x256.py) | 256x256 | 0.6406 | 0.636 | 0.6825 | [ckpt](https://download.openmmlab.com/mmpose/v1/animal_2d_keypoint/topdown_heatmap/animal_kingdom/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P3_fish-256x256-76c3999f_20230519.pth) | [log](https://download.openmmlab.com/mmpose/v1/animal_2d_keypoint/topdown_heatmap/animal_kingdom/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P3_fish-256x256-76c3999f_20230519.json) |

diff --git a/mmpose/configs/animal_2d_keypoint/topdown_heatmap/ak/hrnet_animalkingdom.yml b/mmpose/configs/animal_2d_keypoint/topdown_heatmap/ak/hrnet_animalkingdom.yml

new file mode 100644

index 0000000000000000000000000000000000000000..12f208a10b7a784e1a9faf444845c128bdfb4e88

--- /dev/null

+++ b/mmpose/configs/animal_2d_keypoint/topdown_heatmap/ak/hrnet_animalkingdom.yml

@@ -0,0 +1,86 @@

+Models:

+- Config: configs/animal_2d_keypoint/topdown_heatmap/ak/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P1-256x256.py

+ In Collection: HRNet

+ Metadata:

+ Architecture: &id001

+ - HRNet

+ Training Data: AnimalKingdom_P1

+ Name: td-hm_hrnet-w32_8xb32-300e_animalkingdom_P1-256x256

+ Results:

+ - Dataset: AnimalKingdom

+ Metrics:

+ PCK: 0.6323

+ Task: Animal 2D Keypoint

+ Weights: https://download.openmmlab.com/mmpose/v1/animal_2d_keypoint/topdown_heatmap/animal_kingdom/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P1-256x256-08bf96cb_20230519.pth

+- Config: configs/animal_2d_keypoint/topdown_heatmap/ak/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P2-256x256.py

+ In Collection: HRNet

+ Metadata:

+ Architecture: *id001

+ Training Data: AnimalKingdom_P2

+ Name: td-hm_hrnet-w32_8xb32-300e_animalkingdom_P2-256x256

+ Results:

+ - Dataset: AnimalKingdom

+ Metrics:

+ PCK: 0.3741

+ Task: Animal 2D Keypoint

+ Weights: https://download.openmmlab.com/mmpose/v1/animal_2d_keypoint/topdown_heatmap/animal_kingdom/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P2-256x256-2396cc58_20230519.pth

+- Config: configs/animal_2d_keypoint/topdown_heatmap/ak/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P3_amphibian-256x256.py

+ In Collection: HRNet

+ Metadata:

+ Architecture: *id001

+ Training Data: AnimalKingdom_P3_amphibian

+ Name: td-hm_hrnet-w32_8xb32-300e_animalkingdom_P3_amphibian-256x256

+ Results:

+ - Dataset: AnimalKingdom

+ Metrics:

+ PCK: 0.5358

+ Task: Animal 2D Keypoint

+ Weights: https://download.openmmlab.com/mmpose/v1/animal_2d_keypoint/topdown_heatmap/animal_kingdom/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P3_amphibian-256x256-845085f9_20230519.pth

+- Config: configs/animal_2d_keypoint/topdown_heatmap/ak/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P3_bird-256x256.py

+ In Collection: HRNet

+ Metadata:

+ Architecture: *id001

+ Training Data: AnimalKingdom_P3_bird

+ Name: td-hm_hrnet-w32_8xb32-300e_animalkingdom_P3_bird-256x256

+ Results:

+ - Dataset: AnimalKingdom

+ Metrics:

+ PCK: 0.7671

+ Task: Animal 2D Keypoint

+ Weights: https://download.openmmlab.com/mmpose/v1/animal_2d_keypoint/topdown_heatmap/animal_kingdom/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P3_bird-256x256-566feff5_20230519.pth

+- Config: configs/animal_2d_keypoint/topdown_heatmap/ak/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P3_fish-256x256.py

+ In Collection: HRNet

+ Metadata:

+ Architecture: *id001

+ Training Data: AnimalKingdom_P3_fish

+ Name: td-hm_hrnet-w32_8xb32-300e_animalkingdom_P3_fish-256x256

+ Results:

+ - Dataset: AnimalKingdom

+ Metrics:

+ PCK: 0.6406

+ Task: Animal 2D Keypoint

+ Weights: https://download.openmmlab.com/mmpose/v1/animal_2d_keypoint/topdown_heatmap/animal_kingdom/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P3_fish-256x256-76c3999f_20230519.pth

+- Config: configs/animal_2d_keypoint/topdown_heatmap/ak/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P3_mammal-256x256.py

+ In Collection: HRNet

+ Metadata:

+ Architecture: *id001

+ Training Data: AnimalKingdom_P3_mammal

+ Name: td-hm_hrnet-w32_8xb32-300e_animalkingdom_P3_mammal-256x256

+ Results:

+ - Dataset: AnimalKingdom

+ Metrics:

+ PCK: 0.571

+ Task: Animal 2D Keypoint

+ Weights: https://download.openmmlab.com/mmpose/v1/animal_2d_keypoint/topdown_heatmap/animal_kingdom/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P3_mammal-256x256-e8aadf02_20230519.pth

+- Config: configs/animal_2d_keypoint/topdown_heatmap/ak/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P3_reptile-256x256.py

+ In Collection: HRNet

+ Metadata:

+ Architecture: *id001

+ Training Data: AnimalKingdom_P3_reptile

+ Name: td-hm_hrnet-w32_8xb32-300e_animalkingdom_P3_reptile-256x256

+ Results:

+ - Dataset: AnimalKingdom

+ Metrics:

+ PCK: 0.51

+ Task: Animal 2D Keypoint

+ Weights: https://download.openmmlab.com/mmpose/v1/animal_2d_keypoint/topdown_heatmap/animal_kingdom/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P3_reptile-256x256-e8440c16_20230519.pth

diff --git a/mmpose/configs/animal_2d_keypoint/topdown_heatmap/ak/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P1-256x256.py b/mmpose/configs/animal_2d_keypoint/topdown_heatmap/ak/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P1-256x256.py

new file mode 100644

index 0000000000000000000000000000000000000000..0e7eb0136e9f8476c6863b52e9c2a366b7245fc3

--- /dev/null

+++ b/mmpose/configs/animal_2d_keypoint/topdown_heatmap/ak/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P1-256x256.py

@@ -0,0 +1,146 @@

+_base_ = ['../../../_base_/default_runtime.py']

+

+# runtime

+train_cfg = dict(max_epochs=300, val_interval=10)

+

+# optimizer

+optim_wrapper = dict(optimizer=dict(

+ type='AdamW',

+ lr=5e-4,

+))

+

+# learning policy

+param_scheduler = [

+ dict(

+ type='LinearLR', begin=0, end=500, start_factor=0.001,

+ by_epoch=False), # warm-up

+ dict(

+ type='MultiStepLR',

+ begin=0,

+ end=210,

+ milestones=[170, 200],

+ gamma=0.1,

+ by_epoch=True)

+]

+

+# automatically scaling LR based on the actual training batch size

+auto_scale_lr = dict(base_batch_size=512)

+

+# hooks

+default_hooks = dict(checkpoint=dict(save_best='PCK', rule='greater'))

+

+# codec settings

+codec = dict(

+ type='MSRAHeatmap', input_size=(256, 256), heatmap_size=(64, 64), sigma=2)

+

+# model settings

+model = dict(

+ type='TopdownPoseEstimator',

+ data_preprocessor=dict(

+ type='PoseDataPreprocessor',

+ mean=[123.675, 116.28, 103.53],

+ std=[58.395, 57.12, 57.375],

+ bgr_to_rgb=True),

+ backbone=dict(

+ type='HRNet',

+ in_channels=3,

+ extra=dict(

+ stage1=dict(

+ num_modules=1,

+ num_branches=1,

+ block='BOTTLENECK',

+ num_blocks=(4, ),

+ num_channels=(64, )),

+ stage2=dict(

+ num_modules=1,

+ num_branches=2,

+ block='BASIC',

+ num_blocks=(4, 4),

+ num_channels=(32, 64)),

+ stage3=dict(

+ num_modules=4,

+ num_branches=3,

+ block='BASIC',

+ num_blocks=(4, 4, 4),

+ num_channels=(32, 64, 128)),

+ stage4=dict(

+ num_modules=3,

+ num_branches=4,

+ block='BASIC',

+ num_blocks=(4, 4, 4, 4),

+ num_channels=(32, 64, 128, 256))),

+ init_cfg=dict(

+ type='Pretrained',

+ checkpoint='https://download.openmmlab.com/mmpose/'

+ 'pretrain_models/hrnet_w32-36af842e.pth'),

+ ),

+ head=dict(

+ type='HeatmapHead',

+ in_channels=32,

+ out_channels=23,

+ deconv_out_channels=None,

+ loss=dict(type='KeypointMSELoss', use_target_weight=True),

+ decoder=codec),

+ test_cfg=dict(

+ flip_test=True,

+ flip_mode='heatmap',

+ shift_heatmap=True,

+ ))

+

+# base dataset settings

+dataset_type = 'AnimalKingdomDataset'

+data_mode = 'topdown'

+data_root = 'data/ak/'

+

+# pipelines

+train_pipeline = [

+ dict(type='LoadImage'),

+ dict(type='GetBBoxCenterScale'),

+ dict(type='RandomFlip', direction='horizontal'),

+ dict(type='RandomHalfBody'),

+ dict(type='RandomBBoxTransform'),

+ dict(type='TopdownAffine', input_size=codec['input_size']),

+ dict(type='GenerateTarget', encoder=codec),

+ dict(type='PackPoseInputs')

+]

+val_pipeline = [

+ dict(type='LoadImage'),

+ dict(type='GetBBoxCenterScale'),

+ dict(type='TopdownAffine', input_size=codec['input_size']),

+ dict(type='PackPoseInputs')

+]

+

+# data loaders

+train_dataloader = dict(

+ batch_size=32,

+ num_workers=2,

+ persistent_workers=True,

+ sampler=dict(type='DefaultSampler', shuffle=True),

+ dataset=dict(

+ type=dataset_type,

+ data_root=data_root,

+ data_mode=data_mode,

+ ann_file='annotations/ak_P1/train.json',

+ data_prefix=dict(img='images/'),

+ pipeline=train_pipeline,

+ ))

+val_dataloader = dict(

+ batch_size=24,

+ num_workers=2,

+ persistent_workers=True,

+ drop_last=False,

+ sampler=dict(type='DefaultSampler', shuffle=False, round_up=False),

+ dataset=dict(

+ type=dataset_type,

+ data_root=data_root,

+ data_mode=data_mode,

+ ann_file='annotations/ak_P1/test.json',

+ data_prefix=dict(img='images/'),

+ test_mode=True,

+ pipeline=val_pipeline,

+ ))

+test_dataloader = val_dataloader

+

+# evaluators

+val_evaluator = [dict(type='PCKAccuracy', thr=0.05), dict(type='AUC')]

+test_evaluator = val_evaluator

diff --git a/mmpose/configs/animal_2d_keypoint/topdown_heatmap/ak/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P2-256x256.py b/mmpose/configs/animal_2d_keypoint/topdown_heatmap/ak/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P2-256x256.py

new file mode 100644

index 0000000000000000000000000000000000000000..f42057f8aa91de0ae2a234c7625dce725adf204b

--- /dev/null

+++ b/mmpose/configs/animal_2d_keypoint/topdown_heatmap/ak/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P2-256x256.py

@@ -0,0 +1,146 @@

+_base_ = ['../../../_base_/default_runtime.py']

+

+# runtime

+train_cfg = dict(max_epochs=300, val_interval=10)

+

+# optimizer

+optim_wrapper = dict(optimizer=dict(

+ type='AdamW',

+ lr=5e-4,

+))

+

+# learning policy

+param_scheduler = [

+ dict(

+ type='LinearLR', begin=0, end=500, start_factor=0.001,

+ by_epoch=False), # warm-up

+ dict(

+ type='MultiStepLR',

+ begin=0,

+ end=210,

+ milestones=[170, 200],

+ gamma=0.1,

+ by_epoch=True)

+]

+

+# automatically scaling LR based on the actual training batch size

+auto_scale_lr = dict(base_batch_size=512)

+

+# hooks

+default_hooks = dict(checkpoint=dict(save_best='PCK', rule='greater'))

+

+# codec settings

+codec = dict(

+ type='MSRAHeatmap', input_size=(256, 256), heatmap_size=(64, 64), sigma=2)

+

+# model settings

+model = dict(

+ type='TopdownPoseEstimator',

+ data_preprocessor=dict(

+ type='PoseDataPreprocessor',

+ mean=[123.675, 116.28, 103.53],

+ std=[58.395, 57.12, 57.375],

+ bgr_to_rgb=True),

+ backbone=dict(

+ type='HRNet',

+ in_channels=3,

+ extra=dict(

+ stage1=dict(

+ num_modules=1,

+ num_branches=1,

+ block='BOTTLENECK',

+ num_blocks=(4, ),

+ num_channels=(64, )),

+ stage2=dict(

+ num_modules=1,

+ num_branches=2,

+ block='BASIC',

+ num_blocks=(4, 4),

+ num_channels=(32, 64)),

+ stage3=dict(

+ num_modules=4,

+ num_branches=3,

+ block='BASIC',

+ num_blocks=(4, 4, 4),

+ num_channels=(32, 64, 128)),

+ stage4=dict(

+ num_modules=3,

+ num_branches=4,

+ block='BASIC',

+ num_blocks=(4, 4, 4, 4),

+ num_channels=(32, 64, 128, 256))),

+ init_cfg=dict(

+ type='Pretrained',

+ checkpoint='https://download.openmmlab.com/mmpose/'

+ 'pretrain_models/hrnet_w32-36af842e.pth'),

+ ),

+ head=dict(

+ type='HeatmapHead',

+ in_channels=32,

+ out_channels=23,

+ deconv_out_channels=None,

+ loss=dict(type='KeypointMSELoss', use_target_weight=True),

+ decoder=codec),

+ test_cfg=dict(

+ flip_test=True,

+ flip_mode='heatmap',

+ shift_heatmap=True,

+ ))

+

+# base dataset settings

+dataset_type = 'AnimalKingdomDataset'

+data_mode = 'topdown'

+data_root = 'data/ak/'

+

+# pipelines

+train_pipeline = [

+ dict(type='LoadImage'),

+ dict(type='GetBBoxCenterScale'),

+ dict(type='RandomFlip', direction='horizontal'),

+ dict(type='RandomHalfBody'),

+ dict(type='RandomBBoxTransform'),

+ dict(type='TopdownAffine', input_size=codec['input_size']),

+ dict(type='GenerateTarget', encoder=codec),

+ dict(type='PackPoseInputs')

+]

+val_pipeline = [

+ dict(type='LoadImage'),

+ dict(type='GetBBoxCenterScale'),

+ dict(type='TopdownAffine', input_size=codec['input_size']),

+ dict(type='PackPoseInputs')

+]

+

+# data loaders

+train_dataloader = dict(

+ batch_size=32,

+ num_workers=2,

+ persistent_workers=True,

+ sampler=dict(type='DefaultSampler', shuffle=True),

+ dataset=dict(

+ type=dataset_type,

+ data_root=data_root,

+ data_mode=data_mode,

+ ann_file='annotations/ak_P2/train.json',

+ data_prefix=dict(img='images/'),

+ pipeline=train_pipeline,

+ ))

+val_dataloader = dict(

+ batch_size=24,

+ num_workers=2,

+ persistent_workers=True,

+ drop_last=False,

+ sampler=dict(type='DefaultSampler', shuffle=False, round_up=False),

+ dataset=dict(

+ type=dataset_type,

+ data_root=data_root,

+ data_mode=data_mode,

+ ann_file='annotations/ak_P2/test.json',

+ data_prefix=dict(img='images/'),

+ test_mode=True,

+ pipeline=val_pipeline,

+ ))

+test_dataloader = val_dataloader

+

+# evaluators

+val_evaluator = [dict(type='PCKAccuracy', thr=0.05), dict(type='AUC')]

+test_evaluator = val_evaluator

diff --git a/mmpose/configs/animal_2d_keypoint/topdown_heatmap/ak/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P3_amphibian-256x256.py b/mmpose/configs/animal_2d_keypoint/topdown_heatmap/ak/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P3_amphibian-256x256.py

new file mode 100644

index 0000000000000000000000000000000000000000..5a83e7a97b9478031f7ca4dcc4dccba0350d432d

--- /dev/null

+++ b/mmpose/configs/animal_2d_keypoint/topdown_heatmap/ak/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P3_amphibian-256x256.py

@@ -0,0 +1,146 @@

+_base_ = ['../../../_base_/default_runtime.py']

+

+# runtime

+train_cfg = dict(max_epochs=300, val_interval=10)

+

+# optimizer

+optim_wrapper = dict(optimizer=dict(

+ type='AdamW',

+ lr=5e-4,

+))

+

+# learning policy

+param_scheduler = [

+ dict(

+ type='LinearLR', begin=0, end=500, start_factor=0.001,

+ by_epoch=False), # warm-up

+ dict(

+ type='MultiStepLR',

+ begin=0,

+ end=210,

+ milestones=[170, 200],

+ gamma=0.1,

+ by_epoch=True)

+]

+

+# automatically scaling LR based on the actual training batch size

+auto_scale_lr = dict(base_batch_size=512)

+

+# hooks

+default_hooks = dict(checkpoint=dict(save_best='PCK', rule='greater'))

+

+# codec settings

+codec = dict(

+ type='MSRAHeatmap', input_size=(256, 256), heatmap_size=(64, 64), sigma=2)

+

+# model settings

+model = dict(

+ type='TopdownPoseEstimator',

+ data_preprocessor=dict(

+ type='PoseDataPreprocessor',

+ mean=[123.675, 116.28, 103.53],

+ std=[58.395, 57.12, 57.375],

+ bgr_to_rgb=True),

+ backbone=dict(

+ type='HRNet',

+ in_channels=3,

+ extra=dict(

+ stage1=dict(

+ num_modules=1,

+ num_branches=1,

+ block='BOTTLENECK',

+ num_blocks=(4, ),

+ num_channels=(64, )),

+ stage2=dict(

+ num_modules=1,

+ num_branches=2,

+ block='BASIC',

+ num_blocks=(4, 4),

+ num_channels=(32, 64)),

+ stage3=dict(

+ num_modules=4,

+ num_branches=3,

+ block='BASIC',

+ num_blocks=(4, 4, 4),

+ num_channels=(32, 64, 128)),

+ stage4=dict(

+ num_modules=3,

+ num_branches=4,

+ block='BASIC',

+ num_blocks=(4, 4, 4, 4),

+ num_channels=(32, 64, 128, 256))),

+ init_cfg=dict(

+ type='Pretrained',

+ checkpoint='https://download.openmmlab.com/mmpose/'

+ 'pretrain_models/hrnet_w32-36af842e.pth'),

+ ),

+ head=dict(

+ type='HeatmapHead',

+ in_channels=32,

+ out_channels=23,

+ deconv_out_channels=None,

+ loss=dict(type='KeypointMSELoss', use_target_weight=True),

+ decoder=codec),

+ test_cfg=dict(

+ flip_test=True,

+ flip_mode='heatmap',

+ shift_heatmap=True,

+ ))

+

+# base dataset settings

+dataset_type = 'AnimalKingdomDataset'

+data_mode = 'topdown'

+data_root = 'data/ak/'

+

+# pipelines

+train_pipeline = [

+ dict(type='LoadImage'),

+ dict(type='GetBBoxCenterScale'),

+ dict(type='RandomFlip', direction='horizontal'),

+ dict(type='RandomHalfBody'),

+ dict(type='RandomBBoxTransform'),

+ dict(type='TopdownAffine', input_size=codec['input_size']),

+ dict(type='GenerateTarget', encoder=codec),

+ dict(type='PackPoseInputs')

+]

+val_pipeline = [

+ dict(type='LoadImage'),

+ dict(type='GetBBoxCenterScale'),

+ dict(type='TopdownAffine', input_size=codec['input_size']),

+ dict(type='PackPoseInputs')

+]

+

+# data loaders

+train_dataloader = dict(

+ batch_size=32,

+ num_workers=2,

+ persistent_workers=True,

+ sampler=dict(type='DefaultSampler', shuffle=True),

+ dataset=dict(

+ type=dataset_type,

+ data_root=data_root,

+ data_mode=data_mode,

+ ann_file='annotations/ak_P3_amphibian/train.json',

+ data_prefix=dict(img='images/'),

+ pipeline=train_pipeline,

+ ))

+val_dataloader = dict(

+ batch_size=24,

+ num_workers=2,

+ persistent_workers=True,

+ drop_last=False,

+ sampler=dict(type='DefaultSampler', shuffle=False, round_up=False),

+ dataset=dict(

+ type=dataset_type,

+ data_root=data_root,

+ data_mode=data_mode,

+ ann_file='annotations/ak_P3_amphibian/test.json',

+ data_prefix=dict(img='images/'),

+ test_mode=True,

+ pipeline=val_pipeline,

+ ))

+test_dataloader = val_dataloader

+

+# evaluators

+val_evaluator = [dict(type='PCKAccuracy', thr=0.05), dict(type='AUC')]

+test_evaluator = val_evaluator

diff --git a/mmpose/configs/animal_2d_keypoint/topdown_heatmap/ak/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P3_bird-256x256.py b/mmpose/configs/animal_2d_keypoint/topdown_heatmap/ak/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P3_bird-256x256.py

new file mode 100644

index 0000000000000000000000000000000000000000..ca3c91af610fe995aa24106e0bc6f72b012f9228

--- /dev/null

+++ b/mmpose/configs/animal_2d_keypoint/topdown_heatmap/ak/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P3_bird-256x256.py

@@ -0,0 +1,146 @@

+_base_ = ['../../../_base_/default_runtime.py']

+

+# runtime

+train_cfg = dict(max_epochs=300, val_interval=10)

+

+# optimizer

+optim_wrapper = dict(optimizer=dict(

+ type='AdamW',

+ lr=5e-4,

+))

+

+# learning policy

+param_scheduler = [

+ dict(

+ type='LinearLR', begin=0, end=500, start_factor=0.001,

+ by_epoch=False), # warm-up

+ dict(

+ type='MultiStepLR',

+ begin=0,

+ end=210,

+ milestones=[170, 200],

+ gamma=0.1,

+ by_epoch=True)

+]

+

+# automatically scaling LR based on the actual training batch size

+auto_scale_lr = dict(base_batch_size=512)

+

+# hooks

+default_hooks = dict(checkpoint=dict(save_best='PCK', rule='greater'))

+

+# codec settings

+codec = dict(

+ type='MSRAHeatmap', input_size=(256, 256), heatmap_size=(64, 64), sigma=2)

+

+# model settings

+model = dict(

+ type='TopdownPoseEstimator',

+ data_preprocessor=dict(

+ type='PoseDataPreprocessor',

+ mean=[123.675, 116.28, 103.53],

+ std=[58.395, 57.12, 57.375],

+ bgr_to_rgb=True),

+ backbone=dict(

+ type='HRNet',

+ in_channels=3,

+ extra=dict(

+ stage1=dict(

+ num_modules=1,

+ num_branches=1,

+ block='BOTTLENECK',

+ num_blocks=(4, ),

+ num_channels=(64, )),

+ stage2=dict(

+ num_modules=1,

+ num_branches=2,

+ block='BASIC',

+ num_blocks=(4, 4),

+ num_channels=(32, 64)),

+ stage3=dict(

+ num_modules=4,

+ num_branches=3,

+ block='BASIC',

+ num_blocks=(4, 4, 4),

+ num_channels=(32, 64, 128)),

+ stage4=dict(

+ num_modules=3,

+ num_branches=4,

+ block='BASIC',

+ num_blocks=(4, 4, 4, 4),

+ num_channels=(32, 64, 128, 256))),

+ init_cfg=dict(

+ type='Pretrained',

+ checkpoint='https://download.openmmlab.com/mmpose/'

+ 'pretrain_models/hrnet_w32-36af842e.pth'),

+ ),

+ head=dict(

+ type='HeatmapHead',

+ in_channels=32,

+ out_channels=23,

+ deconv_out_channels=None,

+ loss=dict(type='KeypointMSELoss', use_target_weight=True),

+ decoder=codec),

+ test_cfg=dict(

+ flip_test=True,

+ flip_mode='heatmap',

+ shift_heatmap=True,

+ ))

+

+# base dataset settings

+dataset_type = 'AnimalKingdomDataset'

+data_mode = 'topdown'

+data_root = 'data/ak/'

+

+# pipelines

+train_pipeline = [

+ dict(type='LoadImage'),

+ dict(type='GetBBoxCenterScale'),

+ dict(type='RandomFlip', direction='horizontal'),

+ dict(type='RandomHalfBody'),

+ dict(type='RandomBBoxTransform'),

+ dict(type='TopdownAffine', input_size=codec['input_size']),

+ dict(type='GenerateTarget', encoder=codec),

+ dict(type='PackPoseInputs')

+]

+val_pipeline = [

+ dict(type='LoadImage'),

+ dict(type='GetBBoxCenterScale'),

+ dict(type='TopdownAffine', input_size=codec['input_size']),

+ dict(type='PackPoseInputs')

+]

+

+# data loaders

+train_dataloader = dict(

+ batch_size=32,

+ num_workers=2,

+ persistent_workers=True,

+ sampler=dict(type='DefaultSampler', shuffle=True),

+ dataset=dict(

+ type=dataset_type,

+ data_root=data_root,

+ data_mode=data_mode,

+ ann_file='annotations/ak_P3_bird/train.json',

+ data_prefix=dict(img='images/'),

+ pipeline=train_pipeline,

+ ))

+val_dataloader = dict(

+ batch_size=24,

+ num_workers=2,

+ persistent_workers=True,

+ drop_last=False,

+ sampler=dict(type='DefaultSampler', shuffle=False, round_up=False),

+ dataset=dict(

+ type=dataset_type,

+ data_root=data_root,

+ data_mode=data_mode,

+ ann_file='annotations/ak_P3_bird/test.json',

+ data_prefix=dict(img='images/'),

+ test_mode=True,

+ pipeline=val_pipeline,

+ ))

+test_dataloader = val_dataloader

+

+# evaluators

+val_evaluator = [dict(type='PCKAccuracy', thr=0.05), dict(type='AUC')]

+test_evaluator = val_evaluator

diff --git a/mmpose/configs/animal_2d_keypoint/topdown_heatmap/ak/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P3_fish-256x256.py b/mmpose/configs/animal_2d_keypoint/topdown_heatmap/ak/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P3_fish-256x256.py

new file mode 100644

index 0000000000000000000000000000000000000000..3923f30d104b22c21a4f1b1252a09e3fcbfb99fd

--- /dev/null

+++ b/mmpose/configs/animal_2d_keypoint/topdown_heatmap/ak/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P3_fish-256x256.py

@@ -0,0 +1,146 @@

+_base_ = ['../../../_base_/default_runtime.py']

+

+# runtime

+train_cfg = dict(max_epochs=300, val_interval=10)

+

+# optimizer

+optim_wrapper = dict(optimizer=dict(

+ type='AdamW',

+ lr=5e-4,

+))

+

+# learning policy

+param_scheduler = [

+ dict(

+ type='LinearLR', begin=0, end=500, start_factor=0.001,

+ by_epoch=False), # warm-up

+ dict(

+ type='MultiStepLR',

+ begin=0,

+ end=210,

+ milestones=[170, 200],

+ gamma=0.1,

+ by_epoch=True)

+]

+

+# automatically scaling LR based on the actual training batch size

+auto_scale_lr = dict(base_batch_size=512)

+

+# hooks

+default_hooks = dict(checkpoint=dict(save_best='PCK', rule='greater'))

+

+# codec settings

+codec = dict(

+ type='MSRAHeatmap', input_size=(256, 256), heatmap_size=(64, 64), sigma=2)

+

+# model settings

+model = dict(

+ type='TopdownPoseEstimator',

+ data_preprocessor=dict(

+ type='PoseDataPreprocessor',

+ mean=[123.675, 116.28, 103.53],

+ std=[58.395, 57.12, 57.375],

+ bgr_to_rgb=True),

+ backbone=dict(

+ type='HRNet',

+ in_channels=3,

+ extra=dict(

+ stage1=dict(

+ num_modules=1,

+ num_branches=1,

+ block='BOTTLENECK',

+ num_blocks=(4, ),

+ num_channels=(64, )),

+ stage2=dict(

+ num_modules=1,

+ num_branches=2,

+ block='BASIC',

+ num_blocks=(4, 4),

+ num_channels=(32, 64)),

+ stage3=dict(

+ num_modules=4,

+ num_branches=3,

+ block='BASIC',

+ num_blocks=(4, 4, 4),

+ num_channels=(32, 64, 128)),

+ stage4=dict(

+ num_modules=3,

+ num_branches=4,

+ block='BASIC',

+ num_blocks=(4, 4, 4, 4),

+ num_channels=(32, 64, 128, 256))),

+ init_cfg=dict(

+ type='Pretrained',

+ checkpoint='https://download.openmmlab.com/mmpose/'

+ 'pretrain_models/hrnet_w32-36af842e.pth'),

+ ),

+ head=dict(

+ type='HeatmapHead',

+ in_channels=32,

+ out_channels=23,

+ deconv_out_channels=None,

+ loss=dict(type='KeypointMSELoss', use_target_weight=True),

+ decoder=codec),

+ test_cfg=dict(

+ flip_test=True,

+ flip_mode='heatmap',

+ shift_heatmap=True,

+ ))

+

+# base dataset settings

+dataset_type = 'AnimalKingdomDataset'

+data_mode = 'topdown'

+data_root = 'data/ak/'

+

+# pipelines

+train_pipeline = [

+ dict(type='LoadImage'),

+ dict(type='GetBBoxCenterScale'),

+ dict(type='RandomFlip', direction='horizontal'),

+ dict(type='RandomHalfBody'),

+ dict(type='RandomBBoxTransform'),

+ dict(type='TopdownAffine', input_size=codec['input_size']),

+ dict(type='GenerateTarget', encoder=codec),

+ dict(type='PackPoseInputs')

+]

+val_pipeline = [

+ dict(type='LoadImage'),

+ dict(type='GetBBoxCenterScale'),

+ dict(type='TopdownAffine', input_size=codec['input_size']),

+ dict(type='PackPoseInputs')

+]

+

+# data loaders

+train_dataloader = dict(

+ batch_size=32,

+ num_workers=2,

+ persistent_workers=True,

+ sampler=dict(type='DefaultSampler', shuffle=True),

+ dataset=dict(

+ type=dataset_type,

+ data_root=data_root,

+ data_mode=data_mode,

+ ann_file='annotations/ak_P3_fish/train.json',

+ data_prefix=dict(img='images/'),

+ pipeline=train_pipeline,

+ ))

+val_dataloader = dict(

+ batch_size=24,

+ num_workers=2,

+ persistent_workers=True,

+ drop_last=False,

+ sampler=dict(type='DefaultSampler', shuffle=False, round_up=False),

+ dataset=dict(

+ type=dataset_type,

+ data_root=data_root,

+ data_mode=data_mode,

+ ann_file='annotations/ak_P3_fish/test.json',

+ data_prefix=dict(img='images/'),

+ test_mode=True,

+ pipeline=val_pipeline,

+ ))

+test_dataloader = val_dataloader

+

+# evaluators

+val_evaluator = [dict(type='PCKAccuracy', thr=0.05), dict(type='AUC')]

+test_evaluator = val_evaluator

diff --git a/mmpose/configs/animal_2d_keypoint/topdown_heatmap/ak/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P3_mammal-256x256.py b/mmpose/configs/animal_2d_keypoint/topdown_heatmap/ak/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P3_mammal-256x256.py

new file mode 100644

index 0000000000000000000000000000000000000000..d061c4b6fbc2e01a0b30241cca7fd5212fe29eca

--- /dev/null

+++ b/mmpose/configs/animal_2d_keypoint/topdown_heatmap/ak/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P3_mammal-256x256.py

@@ -0,0 +1,146 @@

+_base_ = ['../../../_base_/default_runtime.py']

+

+# runtime

+train_cfg = dict(max_epochs=300, val_interval=10)

+

+# optimizer

+optim_wrapper = dict(optimizer=dict(

+ type='AdamW',

+ lr=5e-4,

+))

+

+# learning policy

+param_scheduler = [

+ dict(

+ type='LinearLR', begin=0, end=500, start_factor=0.001,

+ by_epoch=False), # warm-up

+ dict(

+ type='MultiStepLR',

+ begin=0,

+ end=210,

+ milestones=[170, 200],

+ gamma=0.1,

+ by_epoch=True)

+]

+

+# automatically scaling LR based on the actual training batch size

+auto_scale_lr = dict(base_batch_size=512)

+

+# hooks

+default_hooks = dict(checkpoint=dict(save_best='PCK', rule='greater'))

+

+# codec settings

+codec = dict(

+ type='MSRAHeatmap', input_size=(256, 256), heatmap_size=(64, 64), sigma=2)

+

+# model settings

+model = dict(

+ type='TopdownPoseEstimator',

+ data_preprocessor=dict(

+ type='PoseDataPreprocessor',

+ mean=[123.675, 116.28, 103.53],

+ std=[58.395, 57.12, 57.375],

+ bgr_to_rgb=True),

+ backbone=dict(

+ type='HRNet',

+ in_channels=3,

+ extra=dict(

+ stage1=dict(

+ num_modules=1,

+ num_branches=1,

+ block='BOTTLENECK',

+ num_blocks=(4, ),

+ num_channels=(64, )),

+ stage2=dict(

+ num_modules=1,

+ num_branches=2,

+ block='BASIC',

+ num_blocks=(4, 4),

+ num_channels=(32, 64)),

+ stage3=dict(

+ num_modules=4,

+ num_branches=3,

+ block='BASIC',

+ num_blocks=(4, 4, 4),

+ num_channels=(32, 64, 128)),

+ stage4=dict(

+ num_modules=3,

+ num_branches=4,

+ block='BASIC',

+ num_blocks=(4, 4, 4, 4),

+ num_channels=(32, 64, 128, 256))),

+ init_cfg=dict(

+ type='Pretrained',

+ checkpoint='https://download.openmmlab.com/mmpose/'

+ 'pretrain_models/hrnet_w32-36af842e.pth'),

+ ),

+ head=dict(

+ type='HeatmapHead',

+ in_channels=32,

+ out_channels=23,

+ deconv_out_channels=None,

+ loss=dict(type='KeypointMSELoss', use_target_weight=True),

+ decoder=codec),

+ test_cfg=dict(

+ flip_test=True,

+ flip_mode='heatmap',

+ shift_heatmap=True,

+ ))

+

+# base dataset settings

+dataset_type = 'AnimalKingdomDataset'

+data_mode = 'topdown'

+data_root = 'data/ak/'

+

+# pipelines

+train_pipeline = [

+ dict(type='LoadImage'),

+ dict(type='GetBBoxCenterScale'),

+ dict(type='RandomFlip', direction='horizontal'),

+ dict(type='RandomHalfBody'),

+ dict(type='RandomBBoxTransform'),

+ dict(type='TopdownAffine', input_size=codec['input_size']),

+ dict(type='GenerateTarget', encoder=codec),

+ dict(type='PackPoseInputs')

+]

+val_pipeline = [

+ dict(type='LoadImage'),

+ dict(type='GetBBoxCenterScale'),

+ dict(type='TopdownAffine', input_size=codec['input_size']),

+ dict(type='PackPoseInputs')

+]

+

+# data loaders

+train_dataloader = dict(

+ batch_size=32,

+ num_workers=2,

+ persistent_workers=True,

+ sampler=dict(type='DefaultSampler', shuffle=True),

+ dataset=dict(

+ type=dataset_type,

+ data_root=data_root,

+ data_mode=data_mode,

+ ann_file='annotations/ak_P3_mammal/train.json',

+ data_prefix=dict(img='images/'),

+ pipeline=train_pipeline,

+ ))

+val_dataloader = dict(

+ batch_size=24,

+ num_workers=2,

+ persistent_workers=True,

+ drop_last=False,

+ sampler=dict(type='DefaultSampler', shuffle=False, round_up=False),

+ dataset=dict(

+ type=dataset_type,

+ data_root=data_root,

+ data_mode=data_mode,

+ ann_file='annotations/ak_P3_mammal/test.json',

+ data_prefix=dict(img='images/'),

+ test_mode=True,

+ pipeline=val_pipeline,

+ ))

+test_dataloader = val_dataloader

+

+# evaluators

+val_evaluator = [dict(type='PCKAccuracy', thr=0.05), dict(type='AUC')]

+test_evaluator = val_evaluator

diff --git a/mmpose/configs/animal_2d_keypoint/topdown_heatmap/ak/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P3_reptile-256x256.py b/mmpose/configs/animal_2d_keypoint/topdown_heatmap/ak/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P3_reptile-256x256.py

new file mode 100644

index 0000000000000000000000000000000000000000..b06a49936bad84e9e01cd5510e779e1909d56520

--- /dev/null

+++ b/mmpose/configs/animal_2d_keypoint/topdown_heatmap/ak/td-hm_hrnet-w32_8xb32-300e_animalkingdom_P3_reptile-256x256.py

@@ -0,0 +1,146 @@

+_base_ = ['../../../_base_/default_runtime.py']

+

+# runtime

+train_cfg = dict(max_epochs=300, val_interval=10)

+

+# optimizer

+optim_wrapper = dict(optimizer=dict(

+ type='AdamW',

+ lr=5e-4,

+))

+

+# learning policy

+param_scheduler = [

+ dict(

+ type='LinearLR', begin=0, end=500, start_factor=0.001,

+ by_epoch=False), # warm-up

+ dict(

+ type='MultiStepLR',

+ begin=0,

+ end=210,

+ milestones=[170, 200],

+ gamma=0.1,

+ by_epoch=True)

+]

+

+# automatically scaling LR based on the actual training batch size

+auto_scale_lr = dict(base_batch_size=512)

+

+# hooks

+default_hooks = dict(checkpoint=dict(save_best='PCK', rule='greater'))

+

+# codec settings

+codec = dict(

+ type='MSRAHeatmap', input_size=(256, 256), heatmap_size=(64, 64), sigma=2)

+

+# model settings

+model = dict(

+ type='TopdownPoseEstimator',

+ data_preprocessor=dict(

+ type='PoseDataPreprocessor',

+ mean=[123.675, 116.28, 103.53],

+ std=[58.395, 57.12, 57.375],

+ bgr_to_rgb=True),

+ backbone=dict(

+ type='HRNet',

+ in_channels=3,

+ extra=dict(

+ stage1=dict(

+ num_modules=1,

+ num_branches=1,

+ block='BOTTLENECK',

+ num_blocks=(4, ),

+ num_channels=(64, )),

+ stage2=dict(

+ num_modules=1,

+ num_branches=2,

+ block='BASIC',

+ num_blocks=(4, 4),

+ num_channels=(32, 64)),

+ stage3=dict(

+ num_modules=4,

+ num_branches=3,

+ block='BASIC',

+ num_blocks=(4, 4, 4),

+ num_channels=(32, 64, 128)),

+ stage4=dict(

+ num_modules=3,

+ num_branches=4,

+ block='BASIC',

+ num_blocks=(4, 4, 4, 4),

+ num_channels=(32, 64, 128, 256))),

+ init_cfg=dict(

+ type='Pretrained',

+ checkpoint='https://download.openmmlab.com/mmpose/'

+ 'pretrain_models/hrnet_w32-36af842e.pth'),

+ ),

+ head=dict(

+ type='HeatmapHead',

+ in_channels=32,

+ out_channels=23,

+ deconv_out_channels=None,

+ loss=dict(type='KeypointMSELoss', use_target_weight=True),

+ decoder=codec),

+ test_cfg=dict(

+ flip_test=True,

+ flip_mode='heatmap',

+ shift_heatmap=True,

+ ))

+

+# base dataset settings

+dataset_type = 'AnimalKingdomDataset'

+data_mode = 'topdown'

+data_root = 'data/ak/'

+

+# pipelines

+train_pipeline = [

+ dict(type='LoadImage'),

+ dict(type='GetBBoxCenterScale'),

+ dict(type='RandomFlip', direction='horizontal'),

+ dict(type='RandomHalfBody'),

+ dict(type='RandomBBoxTransform'),

+ dict(type='TopdownAffine', input_size=codec['input_size']),

+ dict(type='GenerateTarget', encoder=codec),

+ dict(type='PackPoseInputs')

+]

+val_pipeline = [

+ dict(type='LoadImage'),

+ dict(type='GetBBoxCenterScale'),

+ dict(type='TopdownAffine', input_size=codec['input_size']),

+ dict(type='PackPoseInputs')

+]

+

+# data loaders

+train_dataloader = dict(

+ batch_size=32,

+ num_workers=2,

+ persistent_workers=True,

+ sampler=dict(type='DefaultSampler', shuffle=True),

+ dataset=dict(

+ type=dataset_type,

+ data_root=data_root,

+ data_mode=data_mode,

+ ann_file='annotations/ak_P3_reptile/train.json',

+ data_prefix=dict(img='images/'),

+ pipeline=train_pipeline,

+ ))

+val_dataloader = dict(

+ batch_size=24,

+ num_workers=2,

+ persistent_workers=True,

+ drop_last=False,

+ sampler=dict(type='DefaultSampler', shuffle=False, round_up=False),

+ dataset=dict(

+ type=dataset_type,

+ data_root=data_root,

+ data_mode=data_mode,

+ ann_file='annotations/ak_P3_reptile/test.json',

+ data_prefix=dict(img='images/'),

+ test_mode=True,

+ pipeline=val_pipeline,

+ ))

+test_dataloader = val_dataloader

+

+# evaluators

+val_evaluator = [dict(type='PCKAccuracy', thr=0.05), dict(type='AUC')]

+test_evaluator = val_evaluator

diff --git a/mmpose/configs/animal_2d_keypoint/topdown_heatmap/animalpose/hrnet_animalpose.md b/mmpose/configs/animal_2d_keypoint/topdown_heatmap/animalpose/hrnet_animalpose.md

new file mode 100644

index 0000000000000000000000000000000000000000..58b971313fbf4a446a2c9720ac0a687fcc956513

--- /dev/null

+++ b/mmpose/configs/animal_2d_keypoint/topdown_heatmap/animalpose/hrnet_animalpose.md

@@ -0,0 +1,40 @@

+

+

+AnimalKingdom (CVPR'2022)

+ +```bibtex +@InProceedings{ + Ng_2022_CVPR, + author = {Ng, Xun Long and Ong, Kian Eng and Zheng, Qichen and Ni, Yun and Yeo, Si Yong and Liu, Jun}, + title = {Animal Kingdom: A Large and Diverse Dataset for Animal Behavior Understanding}, + booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)}, + month = {June}, + year = {2022}, + pages = {19023-19034} + } +``` + +

+

+

+

+

+HRNet (CVPR'2019)

+ +```bibtex +@inproceedings{sun2019deep, + title={Deep high-resolution representation learning for human pose estimation}, + author={Sun, Ke and Xiao, Bin and Liu, Dong and Wang, Jingdong}, + booktitle={Proceedings of the IEEE conference on computer vision and pattern recognition}, + pages={5693--5703}, + year={2019} +} +``` + +

+

+

+Results on AnimalPose validation set (1117 instances)

+

+| Arch | Input Size | AP | AP50 | AP75 | AR | AR50 | ckpt | log |

+| :-------------------------------------------- | :--------: | :---: | :-------------: | :-------------: | :---: | :-------------: | :-------------------------------------------: | :-------------------------------------------: |

+| [pose_hrnet_w32](/configs/animal_2d_keypoint/topdown_heatmap/animalpose/td-hm_hrnet-w32_8xb64-210e_animalpose-256x256.py) | 256x256 | 0.740 | 0.959 | 0.833 | 0.780 | 0.965 | [ckpt](https://download.openmmlab.com/mmpose/animal/hrnet/hrnet_w32_animalpose_256x256-1aa7f075_20210426.pth) | [log](https://download.openmmlab.com/mmpose/animal/hrnet/hrnet_w32_animalpose_256x256_20210426.log.json) |

+| [pose_hrnet_w48](/configs/animal_2d_keypoint/topdown_heatmap/animalpose/td-hm_hrnet-w48_8xb64-210e_animalpose-256x256.py) | 256x256 | 0.738 | 0.958 | 0.831 | 0.778 | 0.962 | [ckpt](https://download.openmmlab.com/mmpose/animal/hrnet/hrnet_w48_animalpose_256x256-34644726_20210426.pth) | [log](https://download.openmmlab.com/mmpose/animal/hrnet/hrnet_w48_animalpose_256x256_20210426.log.json) |

diff --git a/mmpose/configs/animal_2d_keypoint/topdown_heatmap/animalpose/hrnet_animalpose.yml b/mmpose/configs/animal_2d_keypoint/topdown_heatmap/animalpose/hrnet_animalpose.yml

new file mode 100644

index 0000000000000000000000000000000000000000..caba133370aafff30be90aa9171f8df66fefe7f4

--- /dev/null

+++ b/mmpose/configs/animal_2d_keypoint/topdown_heatmap/animalpose/hrnet_animalpose.yml

@@ -0,0 +1,34 @@

+Models:

+- Config: configs/animal_2d_keypoint/topdown_heatmap/animalpose/td-hm_hrnet-w32_8xb64-210e_animalpose-256x256.py

+ In Collection: HRNet

+ Metadata:

+ Architecture: &id001

+ - HRNet

+ Training Data: Animal-Pose

+ Name: td-hm_hrnet-w32_8xb64-210e_animalpose-256x256

+ Results:

+ - Dataset: Animal-Pose

+ Metrics:

+ AP: 0.740

+ AP@0.5: 0.959

+ AP@0.75: 0.833

+ AR: 0.780

+ AR@0.5: 0.965

+ Task: Animal 2D Keypoint

+ Weights: https://download.openmmlab.com/mmpose/animal/hrnet/hrnet_w32_animalpose_256x256-1aa7f075_20210426.pth

+- Config: configs/animal_2d_keypoint/topdown_heatmap/animalpose/td-hm_hrnet-w48_8xb64-210e_animalpose-256x256.py

+ In Collection: HRNet

+ Metadata:

+ Architecture: *id001

+ Training Data: Animal-Pose

+ Name: td-hm_hrnet-w48_8xb64-210e_animalpose-256x256

+ Results:

+ - Dataset: Animal-Pose

+ Metrics:

+ AP: 0.738

+ AP@0.5: 0.958

+ AP@0.75: 0.831

+ AR: 0.778

+ AR@0.5: 0.962

+ Task: Animal 2D Keypoint

+ Weights: https://download.openmmlab.com/mmpose/animal/hrnet/hrnet_w48_animalpose_256x256-34644726_20210426.pth

diff --git a/mmpose/configs/animal_2d_keypoint/topdown_heatmap/animalpose/resnet_animalpose.md b/mmpose/configs/animal_2d_keypoint/topdown_heatmap/animalpose/resnet_animalpose.md

new file mode 100644

index 0000000000000000000000000000000000000000..20ddf54031e18f8bb9150fccfccff1f6cd5949bf

--- /dev/null

+++ b/mmpose/configs/animal_2d_keypoint/topdown_heatmap/animalpose/resnet_animalpose.md

@@ -0,0 +1,41 @@

+

+

+Animal-Pose (ICCV'2019)

+ +```bibtex +@InProceedings{Cao_2019_ICCV, + author = {Cao, Jinkun and Tang, Hongyang and Fang, Hao-Shu and Shen, Xiaoyong and Lu, Cewu and Tai, Yu-Wing}, + title = {Cross-Domain Adaptation for Animal Pose Estimation}, + booktitle = {The IEEE International Conference on Computer Vision (ICCV)}, + month = {October}, + year = {2019} +} +``` + +

+

+

+

+

+SimpleBaseline2D (ECCV'2018)

+ +```bibtex +@inproceedings{xiao2018simple, + title={Simple baselines for human pose estimation and tracking}, + author={Xiao, Bin and Wu, Haiping and Wei, Yichen}, + booktitle={Proceedings of the European conference on computer vision (ECCV)}, + pages={466--481}, + year={2018} +} +``` + +

+

+

+Results on AnimalPose validation set (1117 instances)

+

+| Arch | Input Size | AP | AP50 | AP75 | AR | AR50 | ckpt | log |

+| :-------------------------------------------- | :--------: | :---: | :-------------: | :-------------: | :---: | :-------------: | :-------------------------------------------: | :-------------------------------------------: |

+| [pose_resnet_50](/configs/animal_2d_keypoint/topdown_heatmap/animalpose/td-hm_res50_8xb64-210e_animalpose-256x256.py) | 256x256 | 0.691 | 0.947 | 0.770 | 0.736 | 0.955 | [ckpt](https://download.openmmlab.com/mmpose/animal/resnet/res50_animalpose_256x256-e1f30bff_20210426.pth) | [log](https://download.openmmlab.com/mmpose/animal/resnet/res50_animalpose_256x256_20210426.log.json) |

+| [pose_resnet_101](/configs/animal_2d_keypoint/topdown_heatmap/animalpose/td-hm_res101_8xb64-210e_animalpose-256x256.py) | 256x256 | 0.696 | 0.948 | 0.774 | 0.736 | 0.951 | [ckpt](https://download.openmmlab.com/mmpose/animal/resnet/res101_animalpose_256x256-85563f4a_20210426.pth) | [log](https://download.openmmlab.com/mmpose/animal/resnet/res101_animalpose_256x256_20210426.log.json) |

+| [pose_resnet_152](/configs/animal_2d_keypoint/topdown_heatmap/animalpose/td-hm_res152_8xb32-210e_animalpose-256x256.py) | 256x256 | 0.704 | 0.938 | 0.786 | 0.748 | 0.946 | [ckpt](https://download.openmmlab.com/mmpose/animal/resnet/res152_animalpose_256x256-a0a7506c_20210426.pth) | [log](https://download.openmmlab.com/mmpose/animal/resnet/res152_animalpose_256x256_20210426.log.json) |

diff --git a/mmpose/configs/animal_2d_keypoint/topdown_heatmap/animalpose/resnet_animalpose.yml b/mmpose/configs/animal_2d_keypoint/topdown_heatmap/animalpose/resnet_animalpose.yml

new file mode 100644

index 0000000000000000000000000000000000000000..345c13c138aafccb5ce1be0ea4136634327248c8

--- /dev/null

+++ b/mmpose/configs/animal_2d_keypoint/topdown_heatmap/animalpose/resnet_animalpose.yml

@@ -0,0 +1,51 @@

+Models:

+- Config: configs/animal_2d_keypoint/topdown_heatmap/animalpose/td-hm_res50_8xb64-210e_animalpose-256x256.py

+ In Collection: SimpleBaseline2D

+ Metadata:

+ Architecture: &id001

+ - SimpleBaseline2D

+ - ResNet

+ Training Data: Animal-Pose

+ Name: td-hm_res50_8xb64-210e_animalpose-256x256

+ Results:

+ - Dataset: Animal-Pose

+ Metrics:

+ AP: 0.691

+ AP@0.5: 0.947

+ AP@0.75: 0.770

+ AR: 0.736

+ AR@0.5: 0.955

+ Task: Animal 2D Keypoint

+ Weights: https://download.openmmlab.com/mmpose/animal/resnet/res50_animalpose_256x256-e1f30bff_20210426.pth

+- Config: configs/animal_2d_keypoint/topdown_heatmap/animalpose/td-hm_res101_8xb64-210e_animalpose-256x256.py

+ In Collection: SimpleBaseline2D

+ Metadata:

+ Architecture: *id001

+ Training Data: Animal-Pose

+ Name: td-hm_res101_8xb64-210e_animalpose-256x256

+ Results:

+ - Dataset: Animal-Pose

+ Metrics:

+ AP: 0.696

+ AP@0.5: 0.948

+ AP@0.75: 0.774

+ AR: 0.736

+ AR@0.5: 0.951

+ Task: Animal 2D Keypoint

+ Weights: https://download.openmmlab.com/mmpose/animal/resnet/res101_animalpose_256x256-85563f4a_20210426.pth

+- Config: configs/animal_2d_keypoint/topdown_heatmap/animalpose/td-hm_res152_8xb32-210e_animalpose-256x256.py

+ In Collection: SimpleBaseline2D

+ Metadata:

+ Architecture: *id001

+ Training Data: Animal-Pose

+ Name: td-hm_res152_8xb32-210e_animalpose-256x256

+ Results:

+ - Dataset: Animal-Pose

+ Metrics:

+ AP: 0.704

+ AP@0.5: 0.938

+ AP@0.75: 0.786

+ AR: 0.748

+ AR@0.5: 0.946

+ Task: Animal 2D Keypoint

+ Weights: https://download.openmmlab.com/mmpose/animal/resnet/res152_animalpose_256x256-a0a7506c_20210426.pth

diff --git a/mmpose/configs/animal_2d_keypoint/topdown_heatmap/animalpose/td-hm_ViTPose-base_8xb64-210e_animalpose-256x192.py b/mmpose/configs/animal_2d_keypoint/topdown_heatmap/animalpose/td-hm_ViTPose-base_8xb64-210e_animalpose-256x192.py

new file mode 100644

index 0000000000000000000000000000000000000000..b73fa4083330eb2c775c8c3b31241bd635bd40b7

--- /dev/null

+++ b/mmpose/configs/animal_2d_keypoint/topdown_heatmap/animalpose/td-hm_ViTPose-base_8xb64-210e_animalpose-256x192.py

@@ -0,0 +1,151 @@

+_base_ = ['../../../_base_/default_runtime.py']

+

+# runtime

+train_cfg = dict(max_epochs=210, val_interval=10)

+

+# optimizer

+custom_imports = dict(

+ imports=['mmpose.engine.optim_wrappers.layer_decay_optim_wrapper'],

+ allow_failed_imports=False)

+

+optim_wrapper = dict(

+ optimizer=dict(

+ type='AdamW', lr=5e-4, betas=(0.9, 0.999), weight_decay=0.1),

+ paramwise_cfg=dict(

+ num_layers=12,

+ layer_decay_rate=0.75,

+ custom_keys={

+ 'bias': dict(decay_multi=0.0),

+ 'pos_embed': dict(decay_mult=0.0),

+ 'relative_position_bias_table': dict(decay_mult=0.0),

+ 'norm': dict(decay_mult=0.0),

+ },

+ ),

+ constructor='LayerDecayOptimWrapperConstructor',

+ clip_grad=dict(max_norm=1., norm_type=2),

+)

+

+# learning policy

+param_scheduler = [

+ dict(

+ type='LinearLR', begin=0, end=500, start_factor=0.001,

+ by_epoch=False), # warm-up

+ dict(

+ type='MultiStepLR',

+ begin=0,

+ end=210,

+ milestones=[170, 200],

+ gamma=0.1,

+ by_epoch=True)

+]

+

+# automatically scaling LR based on the actual training batch size

+auto_scale_lr = dict(base_batch_size=512)

+

+# hooks

+default_hooks = dict(

+ checkpoint=dict(save_best='coco/AP', rule='greater', max_keep_ckpts=1))

+

+# codec settings

+codec = dict(

+ type='UDPHeatmap', input_size=(192, 256), heatmap_size=(48, 64), sigma=2)

+

+# model settings

+model = dict(

+ type='TopdownPoseEstimator',

+ data_preprocessor=dict(

+ type='PoseDataPreprocessor',

+ mean=[123.675, 116.28, 103.53],

+ std=[58.395, 57.12, 57.375],

+ bgr_to_rgb=True),

+ backbone=dict(

+ type='mmpretrain.VisionTransformer',

+ arch='base',

+ img_size=(256, 192),

+ patch_size=16,

+ qkv_bias=True,

+ drop_path_rate=0.3,

+ with_cls_token=False,

+ out_type='featmap',

+ patch_cfg=dict(padding=2),

+ init_cfg=dict(

+ type='Pretrained',

+ checkpoint = 'models/pretrained/mae_pretrain_vit_small_20230913.pth'

+ # checkpoint='https://download.openmmlab.com/mmpose/'

+ # 'v1/pretrained_models/mae_pretrain_vit_base_20230913.pth'),

+ ),

+ head=dict(

+ type='HeatmapHead',

+ in_channels=768,

+ out_channels=17,

+ deconv_out_channels=(256, 256),

+ deconv_kernel_sizes=(4, 4),

+ loss=dict(type='KeypointMSELoss', use_target_weight=True),

+ decoder=codec),

+ test_cfg=dict(

+ flip_test=True,

+ flip_mode='heatmap',

+ shift_heatmap=False,

+ ))

+

+# base dataset settings

+dataset_type = 'AnimalPoseDataset'

+data_mode = 'topdown'

+data_root = "/datagrid/personal/purkrmir/data/AnimalPose/"

+

+# pipelines

+train_pipeline = [

+ dict(type='LoadImage'),

+ dict(type='GetBBoxCenterScale'),

+ dict(type='RandomFlip', direction='horizontal'),

+ dict(type='RandomHalfBody'),

+ dict(type='RandomBBoxTransform'),

+ dict(type='TopdownAffine', input_size=codec['input_size'], use_udp=True),

+ dict(type='GenerateTarget', encoder=codec),

+ dict(type='PackPoseInputs')

+]

+val_pipeline = [

+ dict(type='LoadImage'),

+ dict(type='GetBBoxCenterScale'),

+ dict(type='TopdownAffine', input_size=codec['input_size'], use_udp=True),

+ dict(type='PackPoseInputs')

+]

+

+# data loaders

+train_dataloader = dict(

+ batch_size=64,

+ num_workers=4,

+ persistent_workers=True,

+ sampler=dict(type='DefaultSampler', shuffle=True),

+ dataset=dict(

+ type=dataset_type,

+ data_root=data_root,

+ data_mode=data_mode,

+ ann_file='animalpose_train.json',

+ data_prefix=dict(img='images/'),

+ pipeline=train_pipeline,

+ ))

+val_dataloader = dict(

+ batch_size=32,

+ num_workers=4,

+ persistent_workers=True,

+ drop_last=False,

+ sampler=dict(type='DefaultSampler', shuffle=False, round_up=False),

+ dataset=dict(

+ type=dataset_type,

+ data_root=data_root,

+ data_mode=data_mode,

+ ann_file='animalpose_val.json',

+ # bbox_file='data/coco/person_detection_results/'

+ # 'COCO_val2017_detections_AP_H_56_person.json',

+ data_prefix=dict(img='images/'),

+ test_mode=True,

+ pipeline=val_pipeline,

+ ))

+test_dataloader = val_dataloader

+

+# evaluators

+val_evaluator = dict(

+ type='CocoMetric',

+ ann_file=data_root + 'animalpose_val.json')

+test_evaluator = val_evaluator

diff --git a/mmpose/configs/animal_2d_keypoint/topdown_heatmap/animalpose/td-hm_ViTPose-small_8xb64-210e_animalpose-256x192.py b/mmpose/configs/animal_2d_keypoint/topdown_heatmap/animalpose/td-hm_ViTPose-small_8xb64-210e_animalpose-256x192.py

new file mode 100644

index 0000000000000000000000000000000000000000..f626920050a9f9c8587c788b5831a8ec96083e15

--- /dev/null

+++ b/mmpose/configs/animal_2d_keypoint/topdown_heatmap/animalpose/td-hm_ViTPose-small_8xb64-210e_animalpose-256x192.py

@@ -0,0 +1,162 @@

+TRAIN_ROOT = "/datagrid/personal/purkrmir/data/AnimalPose/"

+

+BATCH_SIZE = 64

+

+load_from = 'models/pretrained/vitpose-s.pth'

+# load_from = 'models/pretrained/vitpose-s+_compatible.pth'

+

+_base_ = ['../../../_base_/default_runtime.py']

+

+# runtime

+train_cfg = dict(max_epochs=210, val_interval=10)

+

+# optimizer

+custom_imports = dict(

+ imports=['mmpose.engine.optim_wrappers.layer_decay_optim_wrapper'],

+ allow_failed_imports=False)

+

+optim_wrapper = dict(

+ optimizer=dict(

+ type='AdamW', lr=5e-4, betas=(0.9, 0.999), weight_decay=0.1),

+ paramwise_cfg=dict(

+ num_layers=12,

+ layer_decay_rate=0.75,

+ custom_keys={

+ 'bias': dict(decay_multi=0.0),

+ 'pos_embed': dict(decay_mult=0.0),

+ 'relative_position_bias_table': dict(decay_mult=0.0),

+ 'norm': dict(decay_mult=0.0),

+ },

+ ),

+ constructor='LayerDecayOptimWrapperConstructor',

+ clip_grad=dict(max_norm=1., norm_type=2),

+)

+

+# learning policy

+param_scheduler = [

+ dict(

+ type='LinearLR', begin=0, end=500, start_factor=0.001,

+ by_epoch=False), # warm-up

+ dict(

+ type='MultiStepLR',

+ begin=0,

+ end=210,

+ milestones=[170, 200],

+ gamma=0.1,

+ by_epoch=True)

+]

+

+# automatically scaling LR based on the actual training batch size

+auto_scale_lr = dict(base_batch_size=512)

+

+# hooks

+default_hooks = dict(

+ checkpoint=dict(save_best='coco/AP', rule='greater', max_keep_ckpts=1))

+

+# codec settings

+codec = dict(

+ type='UDPHeatmap', input_size=(192, 256), heatmap_size=(48, 64), sigma=2)

+

+# model settings

+model = dict(

+ type='TopdownPoseEstimator',

+ data_preprocessor=dict(

+ type='PoseDataPreprocessor',

+ mean=[123.675, 116.28, 103.53],

+ std=[58.395, 57.12, 57.375],

+ bgr_to_rgb=True),

+ backbone=dict(

+ type='mmpretrain.VisionTransformer',

+ arch={

+ 'embed_dims': 384,

+ 'num_layers': 12,

+ 'num_heads': 12,

+ 'feedforward_channels': 384 * 4

+ },

+ img_size=(256, 192),

+ patch_size=16,

+ qkv_bias=True,

+ drop_path_rate=0.1,

+ with_cls_token=False,

+ out_type='featmap',

+ patch_cfg=dict(padding=2),

+ init_cfg=None

+ # init_cfg=dict(

+ # type='Pretrained',

+ # checkpoint='models/pretrained/vitpose-s+.pth'),

+ ),

+ head=dict(

+ type='HeatmapHead',

+ in_channels=384,

+ out_channels=20,

+ deconv_out_channels=(256, 256),

+ deconv_kernel_sizes=(4, 4),

+ loss=dict(type='KeypointMSELoss', use_target_weight=True),

+ decoder=codec),

+ test_cfg=dict(

+ flip_test=True,

+ flip_mode='heatmap',

+ shift_heatmap=False,

+ ))

+

+# base dataset settings

+dataset_type = 'AnimalPoseDataset'

+data_mode = 'topdown'

+data_root = TRAIN_ROOT

+

+# pipelines

+train_pipeline = [

+ dict(type='LoadImage'),

+ dict(type='GetBBoxCenterScale'),

+ dict(type='RandomFlip', direction='horizontal'),

+ dict(type='RandomHalfBody'),

+ dict(type='RandomBBoxTransform'),

+ dict(type='TopdownAffine', input_size=codec['input_size'], use_udp=True),

+ dict(type='GenerateTarget', encoder=codec),

+ dict(type='PackPoseInputs')

+]

+val_pipeline = [

+ dict(type='LoadImage'),

+ dict(type='GetBBoxCenterScale'),

+ dict(type='TopdownAffine', input_size=codec['input_size'], use_udp=True),

+ dict(type='PackPoseInputs')

+]

+

+# data loaders

+train_dataloader = dict(

+ batch_size=BATCH_SIZE,

+ num_workers=4,

+ persistent_workers=True,

+ sampler=dict(type='DefaultSampler', shuffle=True),

+ dataset=dict(

+ type=dataset_type,

+ data_root=data_root,

+ data_mode=data_mode,

+ ann_file='annotations/animalpose_train.json',

+ data_prefix=dict(img='images/'),

+ pipeline=train_pipeline,

+ ))

+val_dataloader = dict(

+ batch_size=BATCH_SIZE,

+ num_workers=4,

+ persistent_workers=True,

+ drop_last=False,

+ sampler=dict(type='DefaultSampler', shuffle=False, round_up=False),

+ dataset=dict(

+ type=dataset_type,

+ data_root=data_root,

+ data_mode=data_mode,

+ ann_file='annotations/animalpose_val.json',

+ # bbox_file='data/coco/person_detection_results/'

+ # 'COCO_val2017_detections_AP_H_56_person.json',

+ data_prefix=dict(img='images/'),

+ test_mode=True,

+ pipeline=val_pipeline,

+ ))

+test_dataloader = val_dataloader

+

+# evaluators

+val_evaluator = dict(

+ type='CocoMetric',

+ ann_file=data_root + 'annotations/animalpose_val.json')