OpenCensor-Hebrew

This is a fine tuned AlephBERT model that finds bad words ( profanity ) in Hebrew text.

You give the model a Hebrew sentence. It returns:

- a score between 0 and 1

- a yes/no flag (based on a cutoff you choose)

Meaning of the score:

- 0 = clean, 1 = has profanity

- Recommended cutoff from tests: 0.29 ( you can change it )

How to use

import torch

from transformers import AutoTokenizer, AutoModelForSequenceClassification

KModel = "LikoKIko/OpenCensor-Hebrew"

KCutoff = 0.29 # best threshold from training

KMaxLen = 256 # number of tokens (not characters)

tokenizer = AutoTokenizer.from_pretrained(KModel)

model = AutoModelForSequenceClassification.from_pretrained(KModel, num_labels=1).eval()

text = "some hebrew text here"

inputs = tokenizer(text, return_tensors="pt", truncation=True, padding=True, max_length=KMaxLen)

with torch.inference_mode():

score = torch.sigmoid(model(**inputs).logits).item()

KHasProfanity = int(score >= KCutoff)

print({"score": round(score, 4), "KHasProfanity": KHasProfanity})

Note: If the text is very long, it is cut at KMaxLen tokens.

About this model

- Base:

onlplab/alephbert-base - Task: binary classification (clean / profanity)

- Language: Hebrew

- Max length: 256 tokens

- Training (this run):

- Batch size: 32

- Epochs: 10

- Learning rate: 0.000015

- Loss: binary cross-entropy with logits (

BCEWithLogitsLoss). We usepos_weightso the model pays more attention to the rare class. This helps when the dataset is imbalanced. - Scheduler: linear warmup (10%)

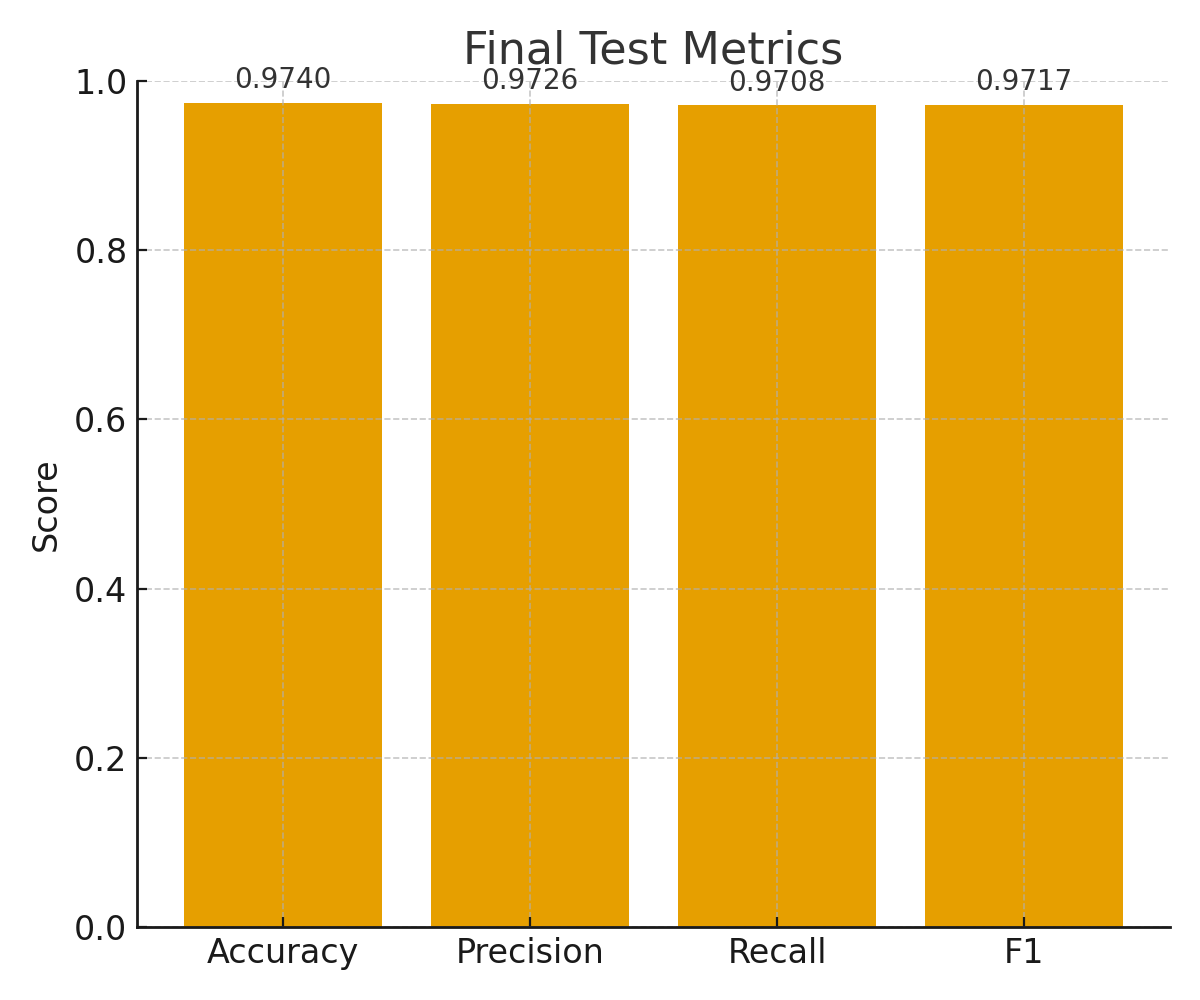

Results (this run)

- Test Accuracy: 0.9740

- Test Precision: 0.9726

- Test Recall: 0.9708

- Test F1: 0.9717

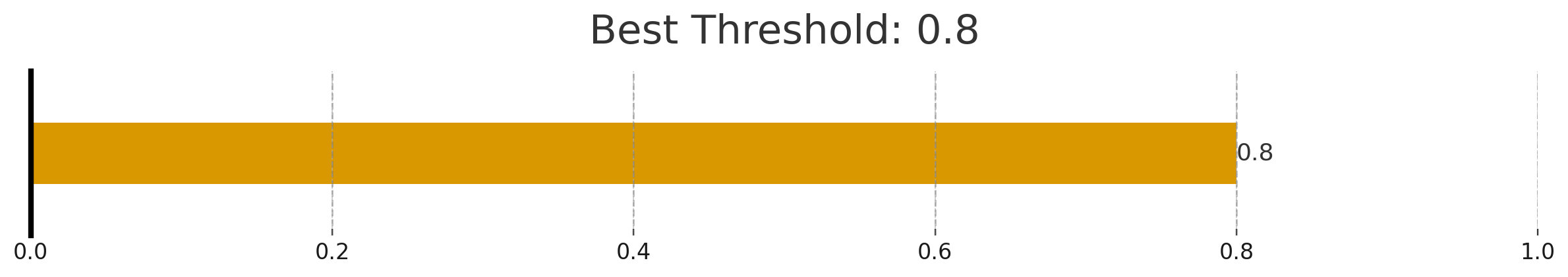

- Best threshold: 0.29

- *Updates: Set threshold to 0.8

Reproduce (training code)

This model was trained with a script that:

- Loads

onlplab/alephbert-basewithnum_labels=1 - Tokenizes with

max_length=256and pads to the max length - Trains with AdamW, linear warmup, and mixed precision

- Tries cutoffs from

0.05to0.95on the validation set and picks the best F1 - Saves the best checkpoint by validation F1, then reports test metrics

License

CC-BY-SA-4.0

How to cite

@misc{opencensor-hebrew,

title = {OpenCensor-Hebrew: Hebrew Profanity Detection Model},

author = {LikoKIko},

year = {2025},

url = {https://huggingface.co/LikoKIko/OpenCensor-Hebrew}

}

- Downloads last month

- 67

Model tree for LikoKIko/OpenCensor-Hebrew

Base model

onlplab/alephbert-base