The full dataset viewer is not available (click to read why). Only showing a preview of the rows.

Error code: DatasetGenerationError

Exception: ParserError

Message: Error tokenizing data. C error: EOF inside string starting at row 14963

Traceback: Traceback (most recent call last):

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/builder.py", line 1995, in _prepare_split_single

for _, table in generator:

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/packaged_modules/csv/csv.py", line 195, in _generate_tables

for batch_idx, df in enumerate(csv_file_reader):

File "/src/services/worker/.venv/lib/python3.9/site-packages/pandas/io/parsers/readers.py", line 1843, in __next__

return self.get_chunk()

File "/src/services/worker/.venv/lib/python3.9/site-packages/pandas/io/parsers/readers.py", line 1985, in get_chunk

return self.read(nrows=size)

File "/src/services/worker/.venv/lib/python3.9/site-packages/pandas/io/parsers/readers.py", line 1923, in read

) = self._engine.read( # type: ignore[attr-defined]

File "/src/services/worker/.venv/lib/python3.9/site-packages/pandas/io/parsers/c_parser_wrapper.py", line 234, in read

chunks = self._reader.read_low_memory(nrows)

File "parsers.pyx", line 850, in pandas._libs.parsers.TextReader.read_low_memory

File "parsers.pyx", line 905, in pandas._libs.parsers.TextReader._read_rows

File "parsers.pyx", line 874, in pandas._libs.parsers.TextReader._tokenize_rows

File "parsers.pyx", line 891, in pandas._libs.parsers.TextReader._check_tokenize_status

File "parsers.pyx", line 2061, in pandas._libs.parsers.raise_parser_error

pandas.errors.ParserError: Error tokenizing data. C error: EOF inside string starting at row 14963

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "/src/services/worker/src/worker/job_runners/config/parquet_and_info.py", line 1529, in compute_config_parquet_and_info_response

parquet_operations = convert_to_parquet(builder)

File "/src/services/worker/src/worker/job_runners/config/parquet_and_info.py", line 1154, in convert_to_parquet

builder.download_and_prepare(

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/builder.py", line 1027, in download_and_prepare

self._download_and_prepare(

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/builder.py", line 1122, in _download_and_prepare

self._prepare_split(split_generator, **prepare_split_kwargs)

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/builder.py", line 1882, in _prepare_split

for job_id, done, content in self._prepare_split_single(

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/builder.py", line 2038, in _prepare_split_single

raise DatasetGenerationError("An error occurred while generating the dataset") from e

datasets.exceptions.DatasetGenerationError: An error occurred while generating the datasetNeed help to make the dataset viewer work? Make sure to review how to configure the dataset viewer, and open a discussion for direct support.

TOKEN

string | NE-COARSE-LIT

string | MISC

string |

|---|---|---|

-DOCSTART-

|

O

|

_

|

# onb:id = ONB_hum_18400625

| null | null |

# onb:image_link = https://anno.onb.ac.at/cgi-content/anno?aid=hum&datum=18400625&seite=2

| null | null |

# onb:page_nr = 2

| null | null |

# onb:publication_year_str = 18400625

| null | null |

506

|

O

|

_

|

fülle

|

O

|

_

|

aus

|

O

|

_

|

seinem

|

O

|

_

|

Herzen

|

O

|

_

|

strömt

|

O

|

_

|

;

|

O

|

_

|

er

|

O

|

_

|

setzt

|

O

|

_

|

jedoch

|

O

|

_

|

sehr

|

O

|

_

|

oft

|

O

|

_

|

aus

|

O

|

_

|

,

|

O

|

_

|

weil

|

O

|

_

|

die

|

O

|

_

|

immer

|

O

|

_

|

wiederkehrende

|

O

|

_

|

Thräne

|

O

|

_

|

sein

|

O

|

_

|

Auge

|

O

|

_

|

umflort

|

O

|

_

|

;

|

O

|

_

|

die

|

O

|

_

|

Lippen

|

O

|

_

|

sind

|

O

|

_

|

fast

|

O

|

_

|

zusammengepreßt

|

O

|

_

|

,

|

O

|

_

|

als

|

O

|

_

|

wollten

|

O

|

_

|

sie

|

O

|

_

|

den

|

O

|

_

|

ausbre

|

O

|

_

|

chenden

|

O

|

_

|

Schmerz

|

O

|

_

|

mit

|

O

|

_

|

Gewalt

|

O

|

_

|

nach

|

O

|

_

|

Innen

|

O

|

_

|

zurückdrängen

|

O

|

_

|

,

|

O

|

_

|

un

|

O

|

_

|

nur

|

O

|

_

|

dann

|

O

|

_

|

öffnen

|

O

|

_

|

sie

|

O

|

_

|

sich

|

O

|

_

|

,

|

O

|

_

|

wenn

|

O

|

_

|

die

|

O

|

_

|

Brust

|

O

|

_

|

durch

|

O

|

_

|

Anhäufung

|

O

|

_

|

unterdrückter

|

O

|

_

|

Seufzer

|

O

|

_

|

zu

|

O

|

_

|

zerspringen

|

O

|

_

|

droht

|

O

|

_

|

;

|

O

|

_

|

diese

|

O

|

_

|

steigen

|

O

|

_

|

danr

|

O

|

_

|

herauf

|

O

|

_

|

aus

|

O

|

_

|

einer

|

O

|

_

|

endlosen

|

O

|

_

|

Tiefe

|

O

|

_

|

,

|

O

|

_

|

wie

|

O

|

_

|

bei

|

O

|

_

|

einem

|

O

|

_

|

ausgebrannten

|

O

|

_

|

Vulkane

|

O

|

_

|

der

|

O

|

_

|

feurige

|

O

|

_

|

Luftstrom

|

O

|

_

|

dem

|

O

|

_

|

innersten

|

O

|

_

|

Bergseingeweide

|

O

|

_

|

entfährt

|

O

|

_

|

.

|

O

|

EndOfSentence

|

Jetzt

|

O

|

_

|

scheint

|

O

|

_

|

er

|

O

|

_

|

eine

|

O

|

_

|

großartige

|

O

|

_

|

Idee

|

O

|

_

|

niedergeschrieben

|

O

|

_

|

zu

|

O

|

_

|

haben

|

O

|

_

|

,

|

O

|

_

|

würdig

|

O

|

_

|

dem

|

O

|

_

|

Gehirne

|

O

|

_

|

HisGermaNER: NER Datasets for Historical German

In this repository we release another NER dataset from historical German newspapers.

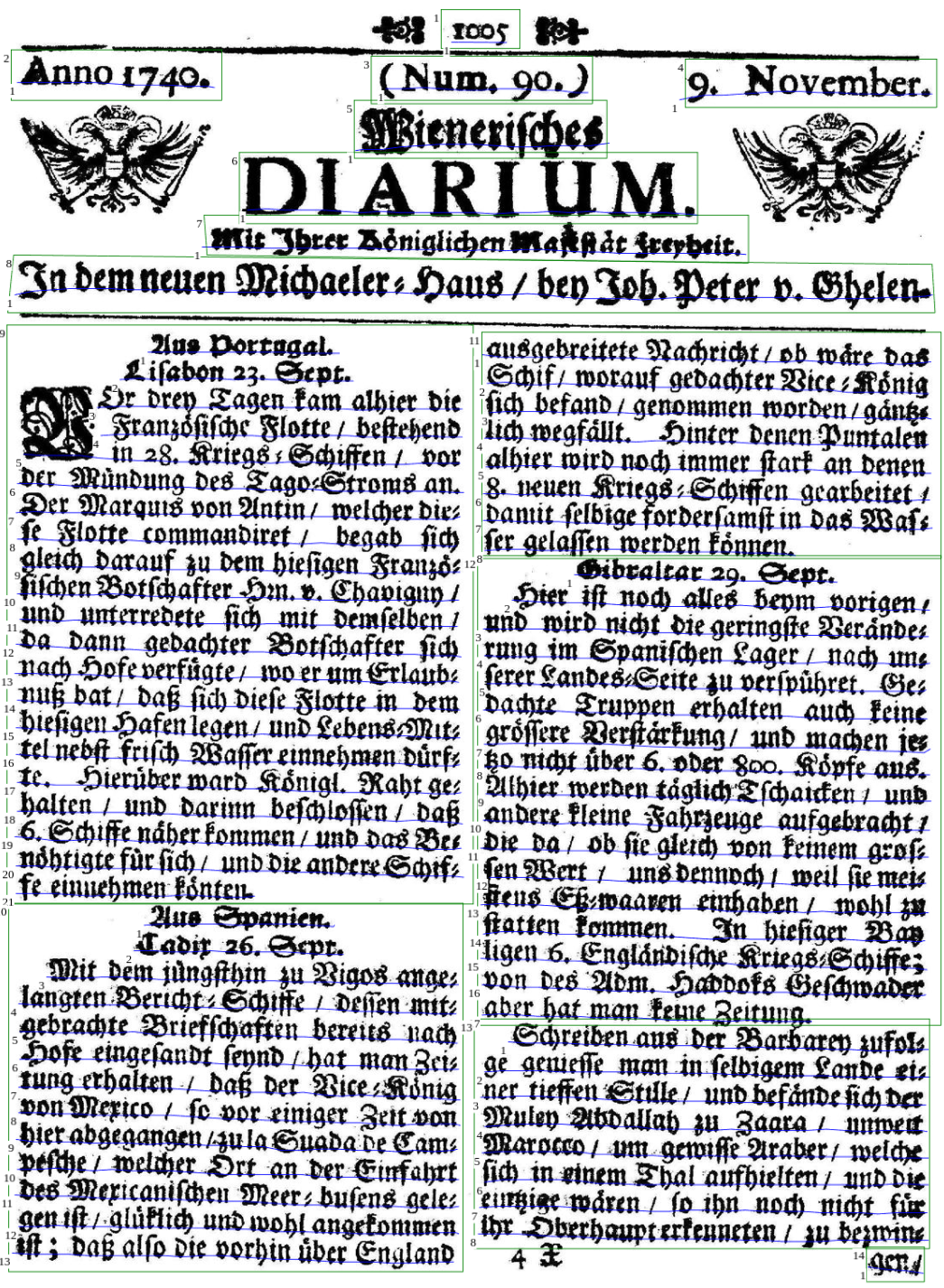

Newspaper corpus

In the first release of our dataset, we select 11 newspapers from 1710 to 1840 from the Austrian National Library (ONB), resulting in 100 pages:

| Year | ONB ID | Newspaper | URL | Pages |

|---|---|---|---|---|

| 1720 | ONB_wrz_17200511 |

Wiener Zeitung | Viewer | 10 |

| 1730 | ONB_wrz_17300603 |

Wiener Zeitung | Viewer | 14 |

| 1740 | ONB_wrz_17401109 |

Wiener Zeitung | Viewer | 12 |

| 1770 | ONB_rpr_17700517 |

Reichspostreuter | Viewer | 4 |

| 1780 | ONB_wrz_17800701 |

Wiener Zeitung | Viewer | 24 |

| 1790 | ONB_pre_17901030 |

Preßburger Zeitung | Viewer | 12 |

| 1800 | ONB_ibs_18000322 |

Intelligenzblatt von Salzburg | Viewer | 8 |

| 1810 | ONB_mgs_18100508 |

Morgenblatt für gebildete Stände | Viewer | 4 |

| 1820 | ONB_wan_18200824 |

Der Wanderer | Viewer | 4 |

| 1830 | ONB_ild_18300713 |

Das Inland | Viewer | 4 |

| 1840 | ONB_hum_18400625 |

Der Humorist | Viewer | 4 |

Data Workflow

In the first step, we obtain original scans from ONB for our selected newspapers. In the second step, we perform OCR using Transkribus.

We use the Transkribus print M1 model for performing OCR. Note: we experimented with an existing NewsEye model, but the print M1 model is newer and led to better performance in our preliminary experiments.

Only layout hints/fixes were made in Transkribus. So no OCR corrections or normalizations were performed.

We export plain text of all newspaper pages into plain text format and perform normalization of hyphenation and the = character.

After normalization we tokenize the plain text newspaper pages using the PreTokenizer of the hmBERT model.

After pre-tokenization we import the corpus into Argilla to start the annotation of named entities.

Note: We perform annotation at page/document-level. Thus, no sentence segmentation is needed and performed.

In the annotation process we also manually annotate sentence boundaries using a special EOS tag.

The dataset is exported into an CoNLL-like format after the annotation process.

The EOS tag is removed and the information of an potential end of sentence is stored in a special column.

Annotation Guidelines

We use the same NE's (PER, LOC and ORG) and annotation guideline as used in the awesome Europeana NER Corpora.

Furthermore, we introduced some specific rules for annotations:

PER: We include e.g.Kaiser,Lord,CardinalorGrafin the NE, but notHerr,Fräulein,Generalor rank/grades.LOC: We excludedKönigreichfrom the NE.

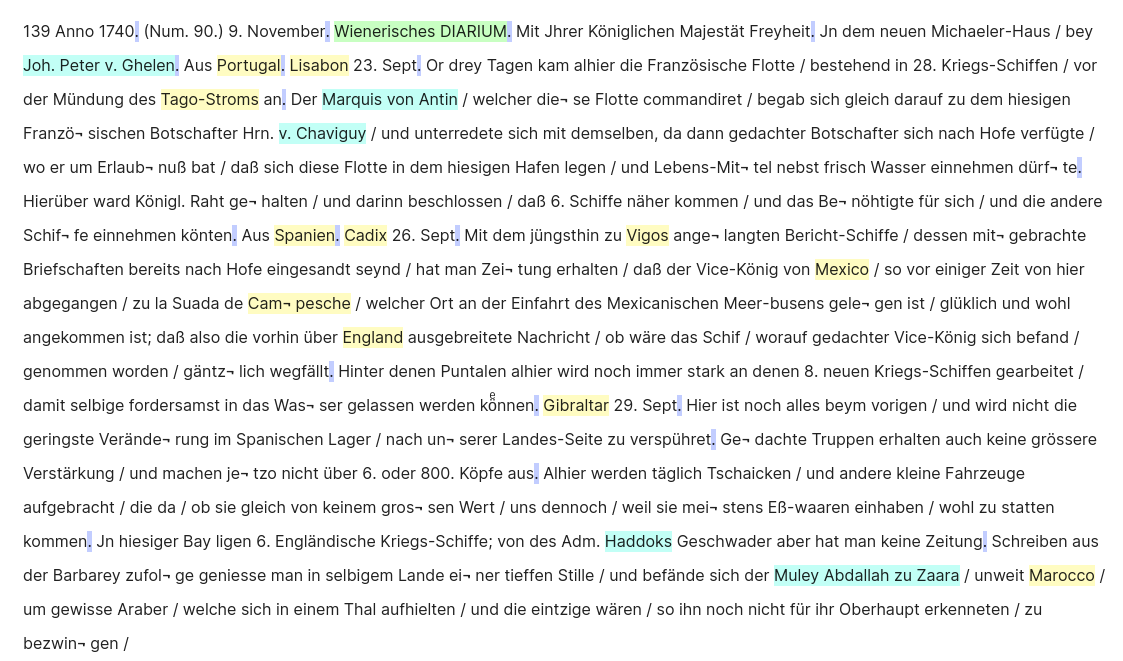

Dataset Format

Our dataset format is inspired by the HIPE-2022 Shared Task. Here's an example of an annotated document:

TOKEN NE-COARSE-LIT MISC

-DOCSTART- O _

# onb:id = ONB_wrz_17800701

# onb:image_link = https://anno.onb.ac.at/cgi-content/anno?aid=wrz&datum=17800701&seite=12

# onb:page_nr = 12

# onb:publication_year_str = 17800701

den O _

Pöbel O _

noch O _

mehr O _

in O _

Harnisch O _

. O EndOfSentence

Sie O _

legten O _

sogleich O _

Note: we include a -DOCSTART- marker to e.g. allow document-level features for NER as proposed in the FLERT paper.

Dataset Splits & Stats

For training powerful NER models on the dataset, we manually document-splitted the dataset into training, development and test splits.

The training split consists of 73 documents, development split of 13 documents and test split of 14 documents.

We perform dehyphenation as one and only preprocessing step. The final dataset splits can be found in the splits folder of this dataset repository.

Some dataset statistics - instances per class:

| Class | Training | Development | Test |

|---|---|---|---|

PER |

942 | 308 | 238 |

LOC |

749 | 217 | 216 |

ORG |

16 | 3 | 11 |

Number of sentences (incl. document marker) per split:

| Training | Development | Test | |

|---|---|---|---|

| Sentences | 1.539 | 406 | 400 |

Release Cycles

We plan to release new updated versions of this dataset on a regular basis (e.g. monthly).

For now, we want to collect some feedback about the dataset first, so we use v0 as current version.

Questions & Feedback

Please open a new discussion here for questions or feedback!

License

Dataset is (currently) licenced under CC BY 4.0.

- Downloads last month

- 78