EarthSynth: Generating Informative Earth Observation with Diffusion Models

Jiancheng Pan*, Shiye Lei*, Yuqian Fu✉, Jiahao Li, Yanxing Liu

Xiao He, Yuze Sun, Long Peng, Xiaomeng Huang✉ , Bo Zhao✉

* Equal Contribution Corresponding Author ✉

News | Abstract | Dataset | Model | Statement

Examples

A satellite image of road.

A satellite image of small vehicle.

A satellite image of tree. (Flood)

A satellite image of water.

A satellite image of baseball diamond, vehicle.

TODO

- Release EarthSynth Training Code

- Release EarthSynth Models to 🤗 HuggingFace

- Release EarthSynth-180K Dataset to 🤗 HuggingFace

News

- [2025/10/30] EarthSynth Models is uploaded to 🤗 HuggingFace.

- [2025/8/7] EarthSynth-180K dataset is uploaded to 🤗 HuggingFace.

- [2025/5/20] Our paper of "EarthSynth: Generating Informative Earth Observation with Diffusion Models" is up on arXiv.

Abstract

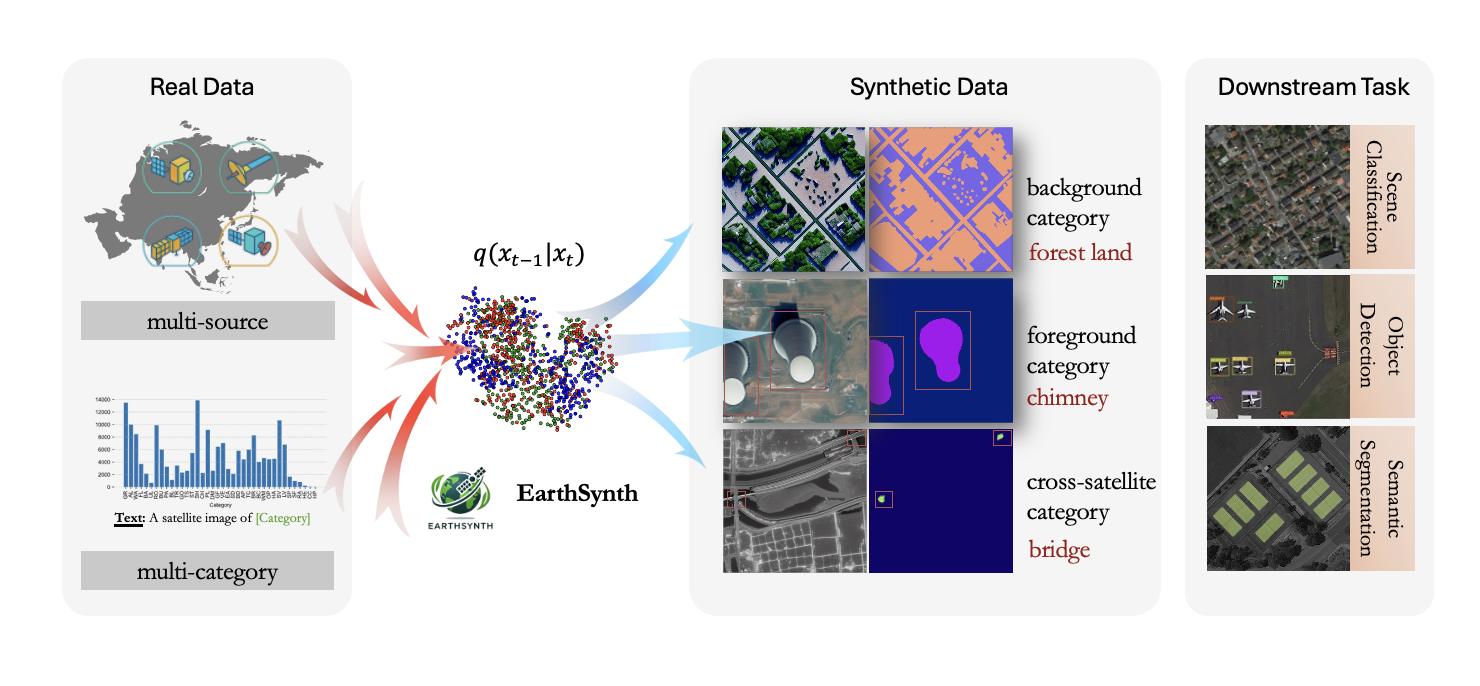

Remote sensing image (RSI) interpretation typically faces challenges due to the scarcity of labeled data, which limits the performance of RSI interpretation tasks. To tackle this challenge, we propose EarthSynth, a diffusion-based generative foundation model that enables synthesizing multi-category, cross-satellite labeled Earth observation for downstream RSI interpretation tasks. To the best of our knowledge, EarthSynth is the first to explore multi-task generation for remote sensing, tackling the challenge of limited generalization in task-oriented synthesis for RSI interpretation. EarthSynth, trained on the EarthSynth-180K dataset, employs the Counterfactual Composition training strategy with a three-dimensional batch-sample selection mechanism to improve training data diversity and enhance category control. Furthermore, a rule-based method of R-Filter is proposed to filter more informative synthetic data for downstream tasks. We evaluate our EarthSynth on scene classification, object detection, and semantic segmentation in open-world scenarios. There are significant improvements in open-vocabulary understanding tasks, offering a practical solution for advancing RSI interpretation.

Dataset

EarthSynth-180K is derived from OEM, LoveDA, DeepGlobe, SAMRS, and LAE-1M datasets. It is further enhanced with mask and text prompt conditions, making it suitable for training foundation diffusion-based generative model. The EarthSynth-180K dataset is constructed using the Random Cropping and Category Augmentation strategies.

Data Preparation

We use category augmentation on each image to help the model better understand each category and allow more control over specific categories when generating images. This also helps improve the combination of samples in the batch-based CF-Comp strategy. If you want to train a remote sensing foundation generative model of your own, this step is not necessary. Here is the use of the category-augmentation method.

- Merge the split zip files and extract them

cat train.zip_part_* > train.zip

unzip train.zip

- Store the dataset in the following directory structure:

./data/EarthSynth-180K

.(./data/EarthSynth-180K)

└── train

├── images

└── masks

- Run the category augmentation script:

python category-augmentation.py

After running, the directory will look like this:

..(./data/EarthSynth-180K)

└── train

├── category_images # Augmented single-category images

├── category_masks # Augmented single-category masks

├── images

├── masks

└── train.jsonl # JSONL file for training

Model

Environment Setup

The experimental environment is based on diffusers==0.30.3, and the installation environment references mmdetection's installation guide. You can refer to my environment requirements.txt if you encounter problems.

conda create -n earthsy python=3.8 -y

conda activate earthsy

pip install -r requirements.txt

git clone https://github.com/jaychempan/EarthSynth.git

cd diffusers

pip install -e ".[torch]"

EarthSynth with CF-Comp

EarthSynth is trained with CF-Comp training strategy on real and unrealistic logical mixed data distribution, learns remote sensing pixel-level properties in multiple dimensions, and builds a unified process for conditional diffusion training and synthesis.

Train EarthSynth

This project is based on diffusers' ControlNet base structure, and the community is open for easy use and promotion. By modifying the config file of train.sh of the catalog ./diffusers/train/.

cd diffusers/

bash train/train.sh

Inference

Example inference using 🤗 HuggingFace pipeline:

from diffusers import StableDiffusionControlNetPipeline, ControlNetModel

import torch

from PIL import Image

img = Image.open("./demo/control/mask.png")

controlnet = ControlNetModel.from_pretrained("jaychempan/EarthSynth")

pipe = StableDiffusionControlNetPipeline.from_pretrained("stable-diffusion-v1-5/stable-diffusion-v1-5", controlnet=controlnet)

pipe = pipe.to("cuda:0")

# generate image

generator = torch.manual_seed(10345340)

image = pipe(

"A satellite image of a storage tank",

generator=generator,

image=img,

).images[0]

image.save("generated_storage_tank.png")

Or you can infer locally:

python test.py --base_model path/to/stable-diffusion/ --controlnet_path path/to/earthsynth [--control_image_dir] [--output_dir] [--output_dir] [--category_txt_path] [--num_images]

e.g.

python test.py --base_model ./weights/stable-diffusion-v1-5/ --controlnet_path ./weights/EarthSynth/controlnet --num_images 5 --control_image_dir ./demo/control/ --category_txt_path ./demo/class.txt --output_dir ./outputs

Training Data Generation

Acknowledgement

This project references and uses the following open-source models and datasets.

Related Open Source Models

Related Open Source Datasets

Citation

If you are interested in the following work or want to use our dataset, please cite the following paper.

@misc{pan2025earthsynthgeneratinginformativeearth,

title={EarthSynth: Generating Informative Earth Observation with Diffusion Models},

author={Jiancheng Pan and Shiye Lei and Yuqian Fu and Jiahao Li and Yanxing Liu and Yuze Sun and Xiao He and Long Peng and Xiaomeng Huang and Bo Zhao},

year={2025},

eprint={2505.12108},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2505.12108},

}

- Downloads last month

- 15