metadata

base_model:

- deepseek-ai/DeepSeek-R1-Distill-Qwen-7B

license: apache-2.0

pipeline_tag: text-generation

library_name: transformers

Spiral-DeepSeek-R1-Distill-Qwen-7B

Links

- 📜 Paper

- 💻 GitHub

- 🤗 Spiral Collection

Introduction

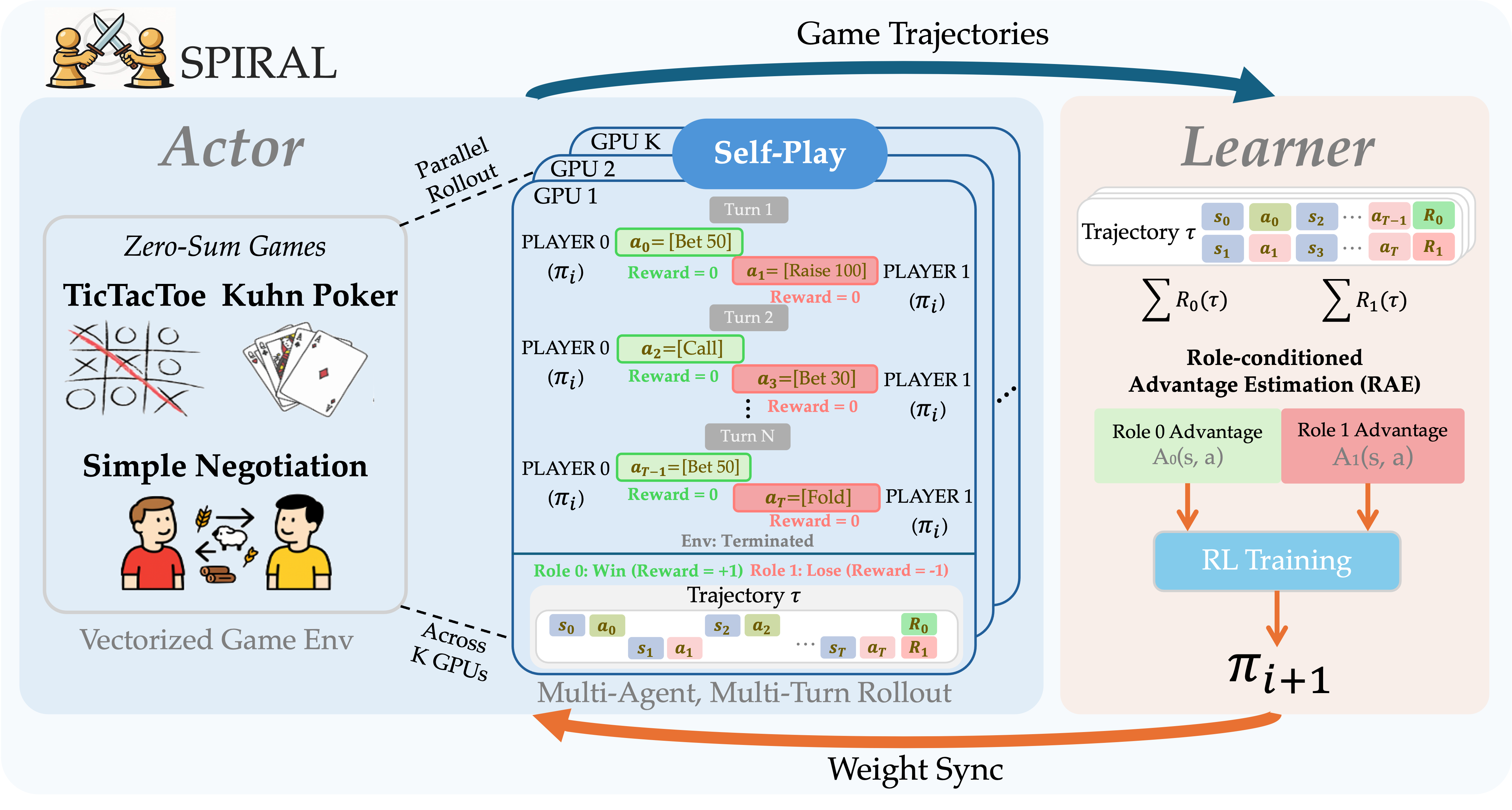

This model is trained with self-play on multi-games (TicTacToe, Kuhn Poker, Simple Negotiation) using the SPIRAL framework.

Usage

This model can be easily loaded and used with the transformers library.

from transformers import pipeline, AutoTokenizer, AutoModelForCausalLM

import torch

model_id = "spiral-rl/Spiral-DeepSeek-R1-Distill-Qwen-7B"

# Load model and tokenizer

tokenizer = AutoTokenizer.from_pretrained(model_id)

model = AutoModelForCausalLM.from_pretrained(

model_id,

torch_dtype=torch.bfloat16, # or torch.float16 for GPUs that don't support bfloat16

device_map="auto"

)

# Create a text generation pipeline

pipe = pipeline(

"text-generation",

model=model,

tokenizer=tokenizer,

max_new_tokens=50,

do_sample=True,

temperature=0.7,

top_k=50,

top_p=0.95

)

# Define a chat message

messages = [

{"role": "user", "content": "What is the capital of France?"}

]

# Generate text

output = pipe(messages)

print(output[0]['generated_text'])

For more advanced usage, including training and evaluation with the SPIRAL framework, please refer to the GitHub repository.

Citation

@article{liu2025spiral,

title={SPIRAL: Self-Play on Zero-Sum Games Incentivizes Reasoning via Multi-Agent Multi-Turn Reinforcement Learning},

author={Liu, Bo and Guertler, Leon and Yu, Simon and Liu, Zichen and Qi, Penghui and Balcells, Daniel and Liu, Mickel and Tan, Cheston and Shi, Weiyan and Lin, Min and Lee, Wee Sun and Jaques, Natasha},

journal={arXiv preprint arXiv:2506.24119},

year={2025},

url={https://arxiv.org/abs/2506.24119}

}