Sungur-14B

This is the quantized version of suayptalha/Sungur-14B.

Sungur-14B is a Turkish-specialized large language model derived from Qwen/Qwen3-14B. The model was fine-tuned using suayptalha/Sungur-Dataset, a 41.1k-sample collection of reasoning-focused conversations spanning domains such as mathematics, medicine, and general knowledge. This dataset is entirely in Turkish and was created to enhance native Turkish reasoning ability.

The training process employed 4-bit QLoRA for Supervised Fine-Tuning (SFT), enabling efficient adaptation while preserving the capabilities of the base model.

Sungur-14B is designed for Turkish reasoning and text generation tasks, delivering coherent, context-aware, and logically structured responses. Through its specialized dataset and training pipeline, the model gains strong native reasoning capabilities in Turkish, making it suitable for advanced applications in analytical dialogue, education, and domain-specific problem solving.

For thinking mode, use

temperature=0.6,top_p=0.95,top_k=20,min_p=0, andrepetition_penalty=1.2. DO NOT use greedy decoding, as it can lead to performance degradation and endless repetitions. For non-thinking mode, usetemperature=0.7,top_p=0.8,top_k=20, andmin_p=0.

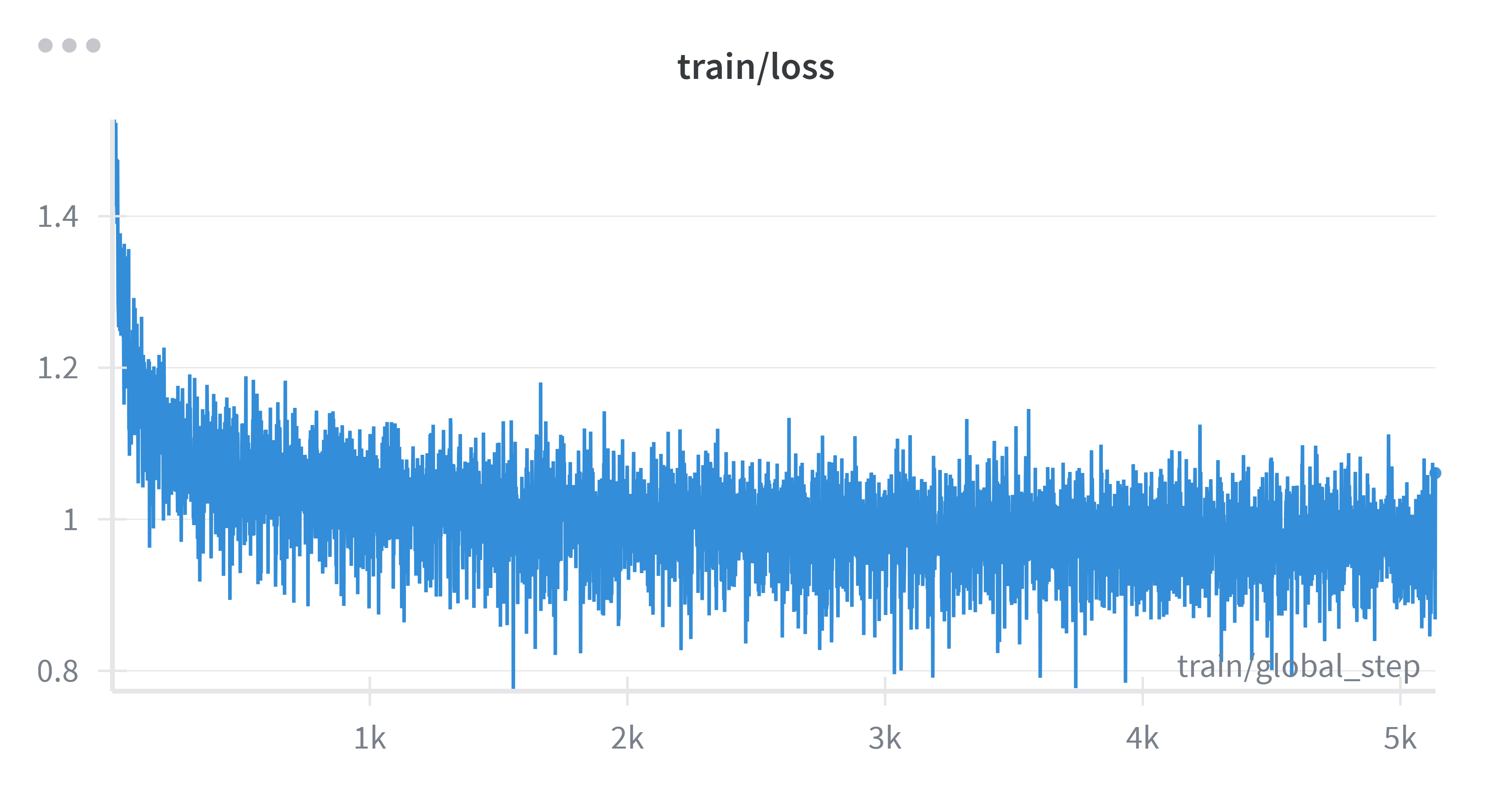

Loss Graph:

This model was trained on 1xB200 GPU. Training took ~3 hours.

📊 Benchmarks

Comparison with Base Model (via malhajar17/lm-evaluation-harness_turkish)

| Benchmark | Sungur-14B | Qwen3-14B |

|---|---|---|

| ARC (tr, acc) | 0.4727 | 0.4701 |

| ARC (tr, acc_norm) | 0.5213 | 0.5273 |

| GSM8K (tr, flex) | 0.0380 | 0.0418 |

| GSM8K (tr, strict) | 0.7760 | 0.8185 |

| HellaSwag (tr, acc) | 0.4051 | 0.4017 |

| HellaSwag (tr, norm) | 0.5279 | 0.5113 |

| Winogrande (tr) | 0.5893 | 0.5656 |

| TruthfulQA (acc) | 0.5174 | 0.5165 |

| MMLU (tr, ort.) | 0.6640 | 0.6729 |

Turkish GSM8K Results

| Model Name | GSM8K (strict) |

|---|---|

| Qwen/Qwen2.5-72B-Instruct | 83.60 |

| Qwen/Qwen3-14B | 81.85 |

| Qwen/Qwen2.5-32B-Instruct | 77.83 |

| suayptalha/Sungur-14B | 77.60 |

| google/gemma-3-27b-it | 77.52 |

| ytu-ce-cosmos/Turkish-Gemma-9b-T1 | 77.41 |

| Qwen/Qwen2.5-14B-it | 76.77 |

| google/gemma-2-27b-it | 76.54 |

| suayptalha/Sungur-9B | 74.49 |

| ytu-ce-cosmos/Turkish-Gemma-9b-v0.1 | 73.42 |

| google/gemma-3-12b-it | 72.06 |

| meta-llama/Llama-3-1-70B-Instruct | 66.13 |

| Qwen/Qwen2.5-7B-Instruct | 64.16 |

| google/gemma-2-9b-it | 63.10 |

Acknowledgments

- Thanks to @Qwen team for their amazing Qwen/Qwen3-14B model.

- Thanks to unsloth for making the repository I used to make this model.

- Thanks to all Turkish open source AI community.

Citation

@misc{sungur_collection_2025,

title = {Sungur (Hugging Face Collection)},

author = {Şuayp Talha Kocabay},

year = {2025},

howpublished = {\url{https://huggingface.co/collections/suayptalha/sungur-68dcd094da7f8976cdc5898e}},

note = {Turkish LLM family and dataset collection}

}

Support

license: apache-2.0

- Downloads last month

- 93

2-bit

3-bit

4-bit

5-bit

6-bit

8-bit

16-bit